We are approaching vacation times here in Sweden, but the tech news keep on coming! Top news from last week include a new AI model by researchers at Radboud University to reconstruct visual stimuli from brain activity, accurately reconstructing what someone is looking at based on recordings of their brain activity. Also French startup Kyutai has introduced the world’s first “real-time voice AI”, which was developed in less than 6 months by 8 people. And to wrap it up Meta has launched Meta 3D Gen, a new state-of-the-art text-to-3D asset generation model that looks very promising for anyone working with digital assets!

This week’s news:

- Mind-Reading AI Recreates What You’re Looking at With Amazing Accuracy

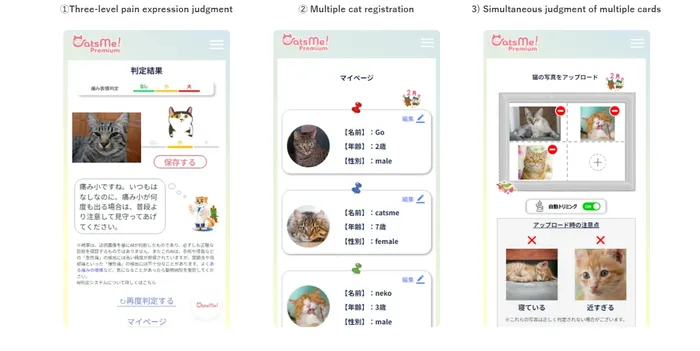

- New AI App Detects Pain in Cats Through Facial Analysis

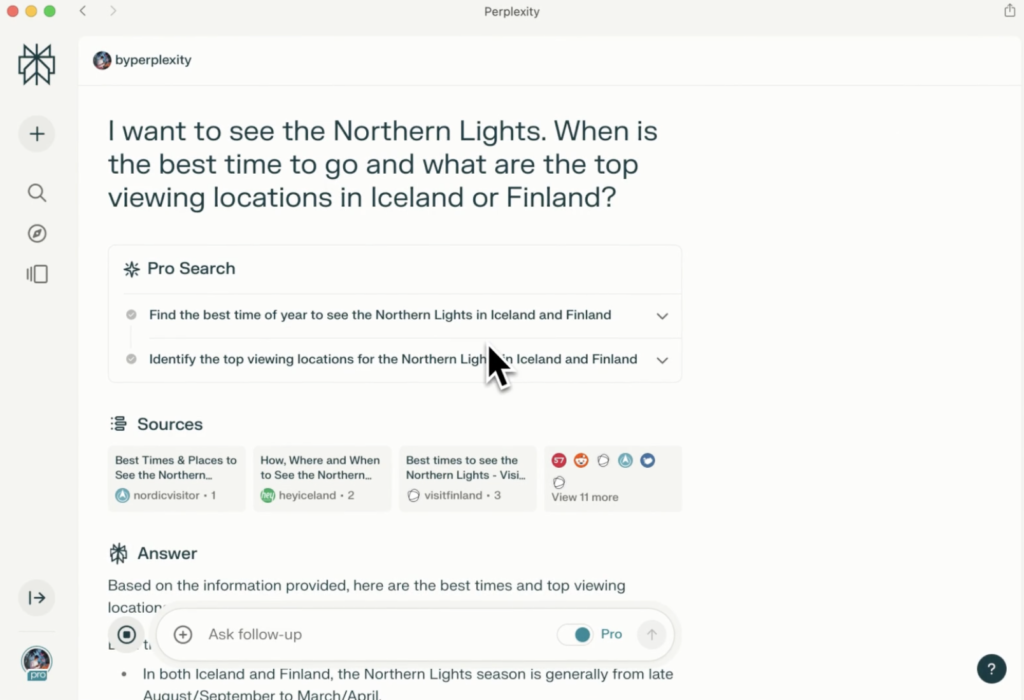

- Perplexity Announces Next Evolution of Pro Search

- French Startup ‘Kyutai’ Introduces Moshi: “First Real-Time Voice AI”

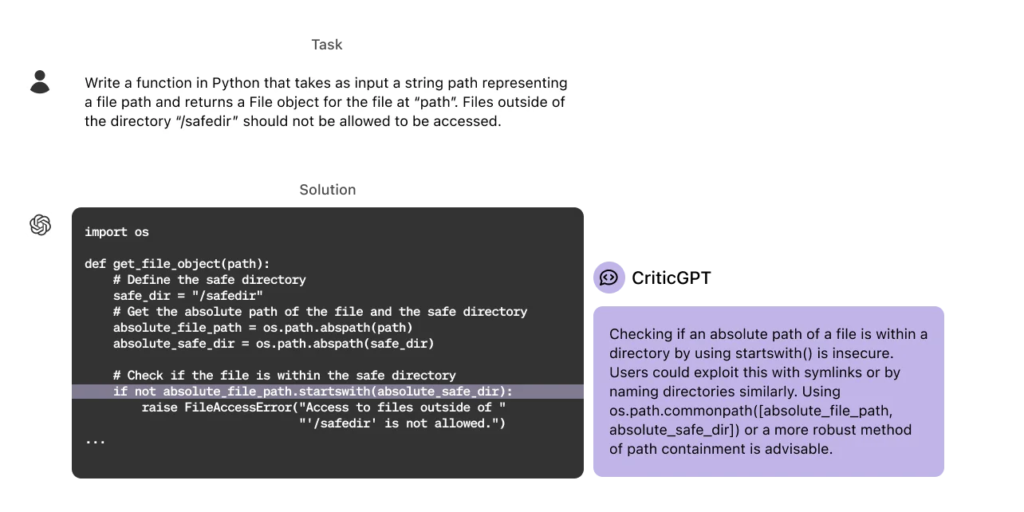

- OpenAI Announces CriticGPT – A Specialized GPT-4 Model for Code Review

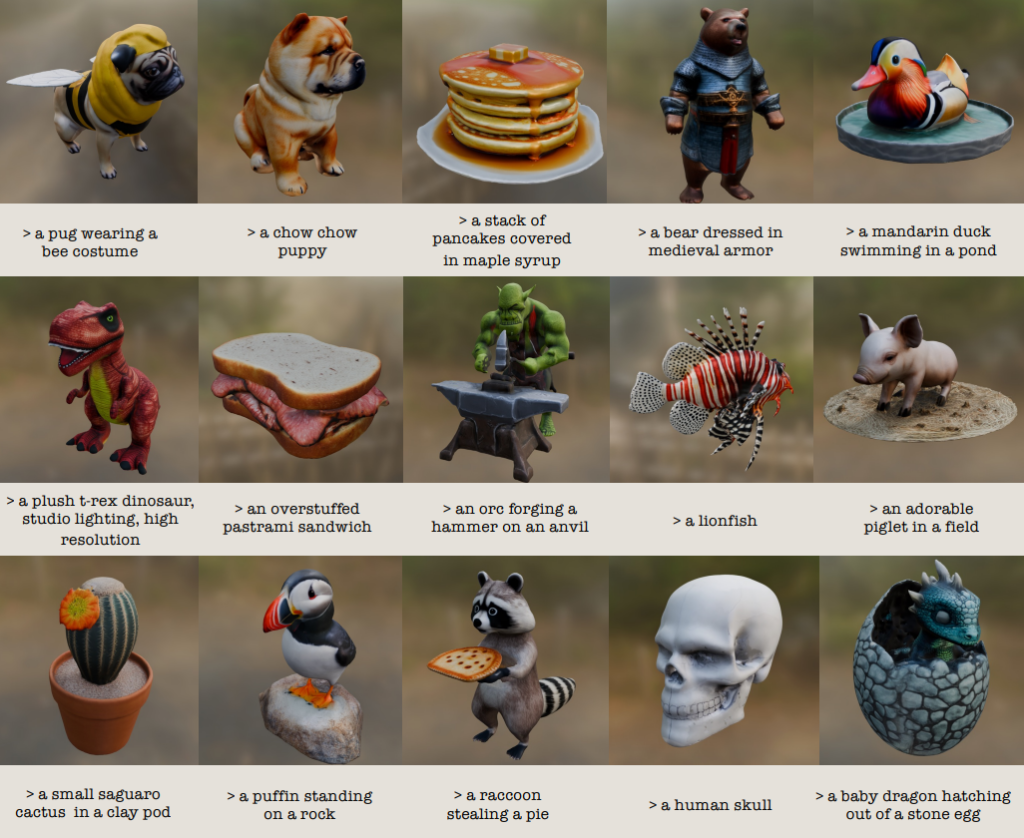

- Meta Launches Meta 3D Gen: State-of-the-art Text-to-3D Asset Generation

- OpenAI Signs Licensing Deal with TIME

- Runway Gen-3 Now Available to Everyone

Mind-Reading AI Recreates What You’re Looking at With Amazing Accuracy

The News:

- Researchers at Radboud University has developed an AI system that can create remarkably accurate reconstructions of what someone is looking at based on recordings of their brain activity.

- The team used both fMRI scans of humans and direct electrode recordings from a macaque monkey to capture brain activity while viewing images.

- In the image above, the top row is what the monkey saw, and the bottom row is the images the AI system reconstructed based on brain activity.

My take: There are a few limitations in the study – the main one being that the AI reconstructions were performed using images already present in the dataset. This means that the AI system was not truly “reading” new images from brain activity, but rather matching patterns to known stimuli. The researchers also noted that it is easier to accurately reconstruct AI-generated images than real ones, which could affect the practical applications of the technology in real-life scenarios. Nevertheless, the study represents a significant advancement in the field of neural decoding and brain-computer interfaces, with many potential future use cases.

Read more:

New AI App Detects Pain in Cats Through Facial Analysis

https://aibusiness.com/ml/ai-app-detects-subtle-signs-of-pain-in-cats-through-facial-analysis

The News:

- A new app CatsMe! developed by Carelogy and researchers from Nihon University in Japan, uses an AI trained on a vast dataset of expert-labeled cat facial images to recognize telltale signs of feline discomfort.

- The app has an accuracy rating of more than 95% and has been downloaded by more than 200,000 users in 50 countries.

- Why is this needed? According to the Drake Center for Veterinary Care, cats often conceal signs of pain as part of their instincts to avoid attracting attention from predators. Detecting pain in cats is difficult even for experts.

My take: How do you know that your pet cat is in pain? Most times you don’t, really. I own and have owned several cats, and typically the times you know your cat is in pain are the times it comes home with severe wounds from a fight with another cat. This app will be very welcome to cat owners all over the world, and I hope it will be released in Europe soon.

Perplexity Announces Next Evolution of Pro Search

https://www.perplexity.ai/hub/blog/pro-search-upgraded-for-more-advanced-problem-solving

The News:

- Before searching the web for answers to your prompt, Perplexity now first analyses your prompt, and breaks it down into separate tasks. Perplexity will then spawn separate searches for each task, analyze the search results, and take actions based on its findings. Perplexity can even initiate follow-up searches that build on previous results.

- Perplexity Pro has also integrated the Wolfram|Alpha engine, meaning it can solve complex mathematical questions.

My take: Perplexity lives in a gray zone between web scraping and information retrieval based on specific user queries. Yes, it’s a bot that collects information using headless browsers. But this is also the case for page preloading in Chrome, when it loads web pages it thinks you might want to read. Perplexity is not an LLM, and you can use it with models such as Claude or ChatGPT, so Perplexity does not “train” anything from web sites. But they do index information, similar to Google. I have tried the new Pro Search, and and it’s actually remarkably good for quickly getting information about specific topics. This is how the AI powered search by Google should have worked when they launched it in May, instead of encouraging people to eat glue and rocks.

French Startup ‘Kyutai’ Introduces Moshi: “First Real-Time Voice AI”

https://kyutai.org/cp_moshi.pdf

The News:

- French startup company ‘Kyutai’ (Japanese, meaning “sphere” or “orb”) has created a software platform called “Moshi”. Moshi gained quite lots of news this week as the “First Real-Time Voice AI” and also due to the fact that it was developed by only 8 people in 6 months.Kyutai was founded 2023, and has received €300 in funding from Iliad (French telecommunications company founded by billionaire Xavier Niel), CMA CGM SA (French container transportation and shipping company) and Eric Schmidt.

- Kyutai is registered as a privately-funded non-profit organization. Kyutai plans to release Moshi as an open-source project, which will include the model, code, and associated research papers.

- Behind the scenes, “Moshi” is using an internally-built model called “Helium-7B”, which is a 7-billion-parameter multimodal language model. Helium-7B runs locally on both MacBooks and consumer-grade GPUs, and much like OpenAI demonstrated a few weeks ago, Kyoutai has implemented quite a few tricks to make the latency as low as 200 milliseconds. One such trick is “Mimi”, their audio compression system, compressing audio 300 times more than MP3-files, and captures both acoustic and semantic information.

My take: The quality of their language model Helium-7B, in particular responses to the questions they asked, was quite bad even for a 7B model. When asked “What kind of gear do you need to bring for mountain climbing?” the model responded with “You might want to take your time getting your climbing shoes on, because you don’t want to be using a egg.” These weird types of answers just kept showing even in this controlled demo. I also thought the way the AI constantly interrupted the speakers in the keynote was annoying, but I can see why they did it for demonstrational purposes. It’s well worth your time checking their presentation if you have time for it, it was the first time I have heard an English text-to-speech engine speak with a clear French accent.

Read more:

OpenAI Announces CriticGPT – A Specialized GPT-4 Model for Code Review

https://openai.com/index/finding-gpt4s-mistakes-with-gpt-4

The News:

- OpenAI has announced CriticGPT – a specialized version of GPT-4 for reviewing source code.

- CriticGPT is meant to be used in with RLHF (Reinforcement Learning from Human Feedback). When models such as GPT-4o becomes better at generating complex code, humans will get progressively worse at finding difficult bugs in the source code. CriticGPT helps identifying possible issues in the reinforcement process.

- In tests, users who got help from CriticGPT outperformed those without help 60% of the time.

My take: One of the main drawbacks with all generative models AI today is that the models cannot “see” the things they generated, such as source code, and fix things that look wrong. Having two models working together is an interesting approach, and maybe this is the way we will approach this task in the near future for things such as user interfaces and shaders.

Read more:

Meta Launches Meta 3D Gen: State-of-the-art Text-to-3D Asset Generation

https://ai.meta.com/research/publications/meta-3d-gen

The News:

- Meta has introduced Meta 3D Gen (3DGen), a new state-of-the-art pipeline for text-to-3D asset generation.

- 3DGen combines two key components: Meta 3D AssetGen for text-to-3D generation and Meta 3D TextureGen for text-to-texture generation. Both of these are released as separate research papers.

- The system can generate high-quality 3D assets with textures and physically-based rendering (PBR) materials in less than one minute.

- 3D Gen uses a multi-stage approach, generating the initial 3D asset first and then refining the texture. In user studies, 3DGen achieved a win rate of 68% compared to single-stage models.

My take: For researchers, students or indie developers, having access to high quality textured assets has always been costly, time consuming and problematic. Being able to generate any asset using a text-to-3D prompt is amazing especially for prototyping, and I can see this generative approach being used in a wide range of contexts not only for game development but any type of visualisation.

Read more:

- Meta 3D AssetGen: Text-to-Mesh Generation with High-Quality Geometry, Texture, and PBR Materials

- Meta 3D TextureGen: Fast and Consistent Texture Generation for 3D Objects | Research – AI at Meta

OpenAI Signs Licensing Deal with TIME

https://openai.com/index/strategic-content-partnership-with-time

The News:

- OpenAI gets full access to TIME’s current and extensive archives from the last 101 years for training their new models.

- When users ask for specific information, ChatGPT will link back to the original source on time.com

- TIME will in turn get access to OpenAI’s technology to develop new products.

My take: It’s hard to know how much better this data will make ChatGPT in the future, since OpenAI has not disclosed if current models have already been scraping time.com for content. Having links directly to time.com however is a good thing, and having access to archives dating back 101 years is excellent.

Runway Gen-3 Now Available to Everyone

The News:

- Last month Runway announced “Gen-3”, their first model in its next-gen series trained for learning ‘general world models’.

- Gen-3 features significant upgrades to key features like character and scene consistency, camera motion and techniques, and transitions between scenes.

- Access to Gen-3 requires a subscription that starts at $12 per month, which gives users 63 seconds of generations.

My take: I gave it my standard video-generating prompt “40 year old man jogging through Swedish forest on gravel roads in the sunset” and you can see the results below. While it performed better than Luma AI “Dream Machine” released two weeks ago, the results are still quite bad. It seems that most of these modern video generators perform well when they can copy lots of data from the material it has been trained on, or mix it into something that looks like something else entirely, like animals made out of food for example. Still the potential is there, and we will probably reach the point where most b-roll can be generated by an AI engine within two years. The main question is if we want to.

Read more:

- Javi Lopez ⛩️ on X: “⚡ The best GEN-3 prompts so far

- Proper on X: 11 short films showing off the new model