Last week Apple announced that they are delaying Apple Intelligence in the EU due to the Digital Markets Act. This week, the European Commission are threatening to fine Microsoft up to 10 percent of its annual global revenue for bundling Teams into Microsoft Office. The new European Digital Markets Act (DMA) came into effect in March 2024, and it will have significant effects going forward in terms of new platform features being introduced in Europe by the seven “gatekeeper” companies. It will probably take a few years before we can see if the DMA was a good or terrible decision for European companies in the tech industry, but changes are coming.

This week’s news:

- European Commission Launches Investigation against Microsoft for Integrating Teams with Office

- Waymo One is Now Open to Everyone in San Francisco

- AI Music Generators “Suno” and “Udio” Sued by Major Record Labels

- LinkedIn Cuts Ticket Resolution Time by 28.6% using Retrieval-Augmented Generation (RAG)

- Google launches Gemma 2 Open AI Models

- Google Opens up Gemini 1.5 Pro 2 Million Token Context Window for All Developers

- Anthropic Releases “Projects” for Claude AI Pro and Team Users

- Apple and EPFL Launches 4M-21 Multimodal AI Model with Permissive License

European Commission Launches Investigation against Microsoft for Integrating Teams with Office

https://ec.europa.eu/commission/presscorner/detail/en/ip_24_3446

The News:

- The European Commission has informed Microsoft of its preliminary view that the company has breached EU antitrust rules.

- The Commission is concerned that Microsoft may have granted Teams a distribution advantage by not giving customers the choice whether or not to acquire access to Teams when they subscribe to their SaaS productivity applications.

- Since Microsoft is one of seven designated “Gatekeeper companies” (which, by sheer coincidence, are all from the US or Asia), they risk a fine of up to 10% of the company’s annual worldwide turnover.

My take: I believe that the European Commission expects these seven gatekeeper companies to capitulate and fully adapt their global strategy based on EU laws. In practice, what I believe will happen, is that the entire European Union will risk falling years behind the rest of the world in technical development. Companies like Apple, Google and Microsoft will continue to develop their platforms at a speed unmatched by European companies, but they will all delay their EU releases by several months, maybe years. We have already seen this with Apple Intelligence, and with Microsoft risking 10% fine of their annual worldwide turnover they sure won’t release any new features in the EU without being absolutely certain they do not get objected by the European Council. And that will take a long time for every single new feature added to their platforms.

Waymo One is Now Open to Everyone in San Francisco

https://waymo.com/blog/2024/06/waymo-one-is-now-open-to-everyone-in-san-francisco

The News:

- On June 25, Waymo opened up their driverless service “Waymo One” for the public.

- Through the end of March 2024, Waymo Driver has accumulated over 3.8 million rider-only miles in San Fransisco. Compared to human drivers, the Waymo Driver was involved in 17 fewer crashes with injuries, a 78% reduction.

- Waymo is currently providing more than 50,000 rides a week in three major urban areas.

My take: Here is another area where the EU is falling behind compared to US and China. In Sweden, home to Polestar and Volvo Cars, we still have not been able to put a single autonomous car out on public roads even for testing due to heavy regulations. All while Waymo provides over 50,000 rides per week, collecting huge amounts of valuable data to help them improve their algorithms and hardware at breakthrough speeds, already developing their next generation autonomous drive using next-generation Nvidia hardware.

AI Music Generators “Suno” and “Udio” Sued by Major Record Labels

The News:

- The Recording Industry Association of America (RIAA) is suing Suno and Udio, seeking up to $150,000 per infringed work with potentially billions in damages.

- The labels claim Suno and Udio trained their AI models on copyrighted recordings without permission or compensation.

- RIAA claims that Suno and Udio mimic specific artists and hit songs, and that both companies have admitted to training on copyrighted material.

What you might have missed: In an interview with RollingStone, Antonio Rodriguez one of Suno’s earliest investors, said “Honestly, if we had deals with labels when this company got started, I probably wouldn’t have invested in it. I think that they needed to make this product without the constraints.”

My take: If Suno and Udio have done nothing wrong, then this lawsuit should be no problems for them. They should be able to show how they trained their models and what materials they used. On the other hand if they did use copyrighted material to train their models, I am glad I am not one of the investors in these companies.

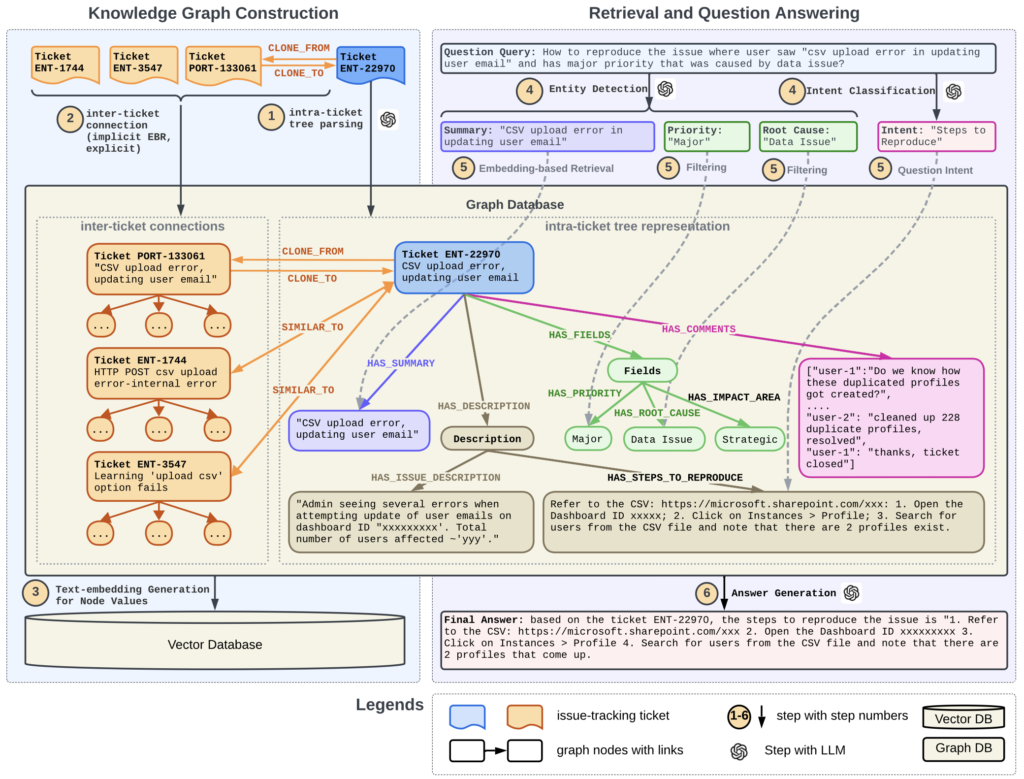

LinkedIn Cuts Ticket Resolution Time by 28.6% using Retrieval-Augmented Generation (RAG)

https://arxiv.org/pdf/2404.17723

The News:

- LinkedIn made a Knowledge Graph (KG) by parsing historical customer support tickets into structured tree representations, preserving their internal relationships.

- Tickets are linked based on contextual similarities, dependencies, and references — all of these make up a comprehensive graph.

- Each node in the KG is embedded so they can do semantic search and retrieval. The RAG QA system then identifies relevant sub-graphs by doing traversal and searching by semantic similarity.

- During question-answering, they parse consumer queries and retrieve related sub-graphs from the knowledge graph to generate answers

- The solution is based on GPT-4, E5, and a combination of a vector DB (QDrant) and a graph DB (Neo4j)

My take: This paper is interesting since it shows how knowledge graphs can be used to improve RAG solutions. LinkedIn also reports real-life metrics from A/B tests, and since the time to resolution is measurable the cost reduction can also be quantified. If your organisation has any kind of support system and have not yet explored knowledge graphs and Retrieval-Augmented Generation (RAG), now would be a good time to start investing in those technologies.

Google launches Gemma 2 Open AI Models

https://blog.google/technology/developers/google-gemma-2

The News:

- Google has launched Gemma 2, the next generation of its open AI model series. Gemma 2 comes in two sizes: a 9B parameter model and a larger 27B parameter model.

- The 27B model offers competitive performance to models more than twice its size, while the 9B outperforms similar-class models like Llama 3 8B.

- The 27B version of Gemma 2 is designed to run inference efficiently at full precision on a single Google Cloud TPU host, such as the NVIDIA A100 or H100 Tensor Core GPU.

My take: Today, tech giants like Apple, Google and Meta have all released superb, open, language models with reasonable licensing agreements. The company that still does not release open models is ironically OpenAI. Being able to run the 27B model on a single GPU is great news, and I can see many companies choosing Gemma 2 for their next project going forward.

Google Opens up Gemini 1.5 Pro 2 Million Token Context Window for All Developers

https://developers.googleblog.com/en/new-features-for-the-gemini-api-and-google-ai-studio

The News:

- At Google I/O, Google announced the “longest ever context window” of 2 million tokens in Gemini 1.5 Pro behind a waitlist. Last week they opened up access to the 2 million token context window on Gemini 1.5 Pro for all developers.

- Gemini 1.5 Pro also gains Code Execution, which means that the model can generate and run Python code and learn iteratively from the results until it gets to a desired final output.

My take: Lots of interesting possibilities opens up with a 2M context window, and it will be interesting to see how organizations take advantage of it in the coming months. The new Code Execution is the first step in the evolution where the LLM analyzes its own results and can do adjustments to the code if the results differ from the expected outcome. Next steps include multimodal analysis of user interfaces, shaders, 3D renders and 3D models, with the current speed of improvements we should hopefully see that within the next year already.

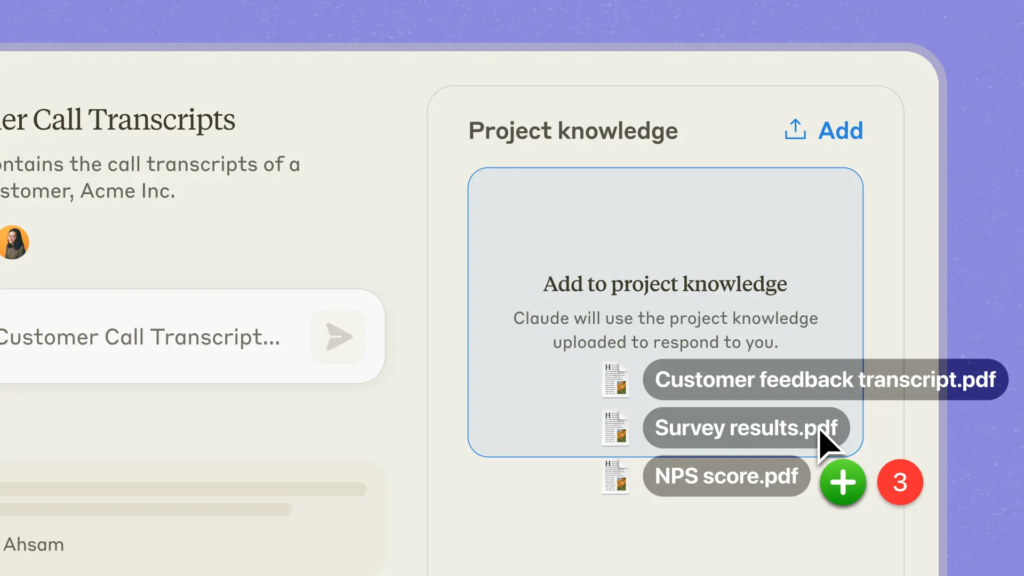

Anthropic Releases “Projects” for Claude AI Pro and Team Users

https://www.anthropic.com/news/projects

The News:

- Claude.ai Pro and Team users can now organize their chats into Projects, bringing together curated sets of knowledge and chat activity in one place—with the ability to make their best chats with Claude viewable by teammates.

- Each project includes a 200K context window, the equivalent of a 500-page book

- By dragging in codebases, interview transcripts, or past work into the project, you provide context for Claude so you don’t have to start from zero with new chats. You can also write custom instructions.

My take: Anthropic has really stepped up the game recently, with Claude 3.5 Opus, Artifacts and now Projects. The coding skills of Claude 3.5 is excellent, and I have switched to Claude myself from ChatGPT since it gives me results that better match what I am looking for.

Apple and EPFL Launches 4M-21 Multimodal AI Model with Permissive License

https://arxiv.org/abs/2406.09406

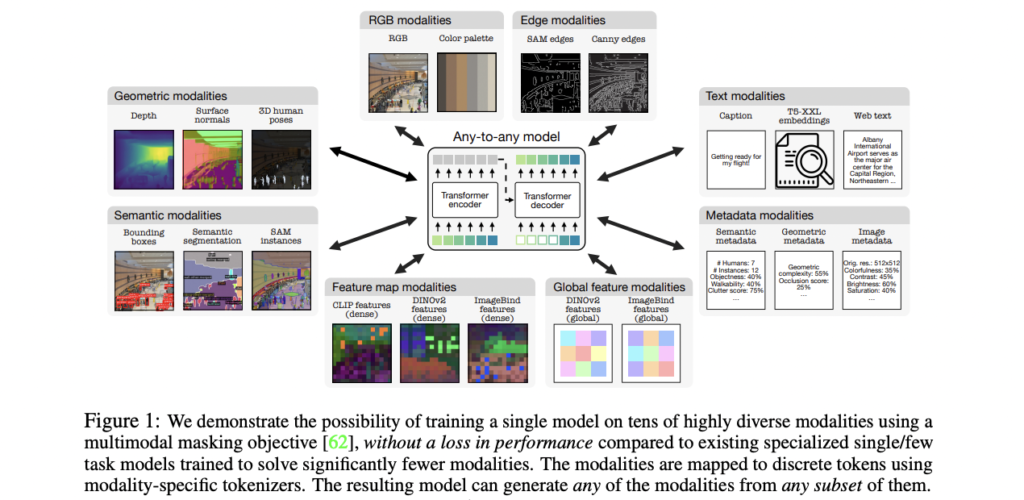

About 4M (Massively Multimodal Masked Modeling):

- 4M is a framework for training “any-to-any” foundation models, using tokenization and masking to scale to many diverse modalities.

- Models trained using 4M can perform a wide range of vision tasks, transfer well to unseen tasks and modalities, and are flexible and steerable multimodal generative models.

The News:

- Apple and EPFL (École Polytechnique Fédérale de Lausanne – public research university in Lausanne, Switzerland) have released 4M-21, an effective multimodal model that solves 10s of tasks & modalities.

- The release comprises demo code, sample results, and tokenizers of diverse modalities.

- Out-of-the-box capabilities include bounding boxes, semantic segmentation, depth, surface normals, human poses, and much more.

My take: Apple has not disclosed if and where 4M-21 is used in their upcoming Apple Intelligence launch, but I am willing to bet it is included in several places. It’s small (3B, fits in a phone) with performance matching much larger and specialised models, so it’s a natural fit for Apple’s on-device AI strategy. It’s also great to see Apple is releasing their model with a permissive license.

Read more:

https://github.com/apple/ml-4m