In 2019 Elon Musk said “I think we will have fully self-driving cars this year”. In 2020 it was still “level five autonomy this year”. In 2021 it was “this year” and in 2022 it was “we’ll achieve full self-driving this year”. And last year in 2023 Elon Musk said “I think we’ll do [FSD] this year”.

At this year’s event “We Robot” it was no longer “this year”, it was “next year” and in two regions: California and Texas. Maybe if the event was held in January 2025 it would still be “this year”. I was one of those who believed Tesla would actually launch something new last week, that we finally reached the point where it no longer was “this year”, but “now”. But it was just the same story as the previous five years.

Maybe it’s simply impossible to balance the price of fully autonomous and safe vehicles with something people can afford to buy? The Waymo robotaxis still employ 13 cameras, 4 lidars, 6 radars and a large array of audio receivers. The price of such a vehicle if mass-produced would be astronomical. It’s up to Tesla to show that it’s possible to do safe FSD in a large scale with just cameras, and so far they have not been convincing.

As a bonus, here’s a slideshow showing all the times Elon Musk has promised Fully Self Driving (FSD) cars “this year”: Here’s To 10 Years Of Elon Promising Us Self-Driving Cars

WHEN DO YOU WANT TO RECEIVE THIS NEWSLETTER?

I made a poll on LinkedIn asking when you want to receive the newsletter to avoid it being pushed to the bottom of your inbox, and I already got a few requests to send it out early at 6:30 CET. Does that work for you? Either vote in the poll or send me a message, I would love to hear your comments.

THIS WEEK’S NEWS:

- Tesla Concept Day: Robotaxis, Robovans and Humanoid Robots

- Zoom Getting Closer to AI Avatars Going to Your Meetings

- Researchers at Penn State Creates AI-Powered Electric Tongue

- OpenAI and Hearst form Strategic Partnership

- Amazon Launches AI Shopping Guide

- Nobel Prize in Physics to Hopfield and Hinton for AI Research

- Nobel Prize in Chemistry to Baker, Hassabis and Jumper for AlphaFold

Tesla Concept Day: Robotaxis, Robovans and Humanoid Robots

https://twitter.com/Tesla/status/1844573295510728857

The News:

- On October 10, Tesla announced their upcoming Robotaxi. It is planned for production in 2026 and will be priced below $30,000.

- According to Elon Musk “autonomous cars are expected to be 10–20 times safer than human-driven vehicles and could cost as little as 20 cents per mile, compared to the $1 per mile for city buses”.

- Next year Tesla plans to start fully autonomous unsupervised FSD (Fully Self Driving) with the Model 3 and Model Y in Texas and California.

- Tesla do not use LIDARs for their autonomous systems, but instead rely on AI, cameras and extensive training data.

- In addition to the Robotaxi, a larger self-driving Robovan was also introduced, carrying up to 20 people.

- Tesla also demonstrated the latest Optimus Humanoid robots, with Elon Musk predicting a sale price between $20,000-$30,000, claiming that they will be “the biggest product ever of any kind”

What you might have missed: Robert Scoble visited the event and asked one of the Optimus robots how much of it was AI and how much was remote assist, and it turns out that most things about the Optimus except the actual walk cycle was remote assisted. According to Robert Scoble “a human was wearing VR somewhere else and was embodied inside the robot”.

My take: I don’t really agree with The Verge saying “Elon Musk’s robotaxi is finally here”. There is a special type of company that has to rely on concept demonstrations to stay relevant, and Tesla just got added to my list. At the event Tesla didn’t really show anything new – they had a remotely controlled “cybertaxi” cars-on-rails in a fully sealed-off area, they had walking humanoid robots that were remote controlled by people using VR headsets, and again they promised to solve FSD in the robotaxi without using LIDARs, without explaining how. It’s no wonder Tesla’s stock dropped 8.8% right after the event.

When it comes to Robotaxis however I do believe they are the future of transportation. Instead of saving precious city areas for parking, these areas could instead be changed into parks and cars can drive away and park outside the city by themselves. Instead of “wasting” two hours every day commuting to work, this time can be spent working, meaning you get 40 hours of spare time back every month. Instead of leaving work early to pick up the kids from daycare, you can let the robotaxi pickup the kids and then meet you at work when it’s your turn to go home. The possibilities are nearly endless, and if Robotaxis can be sold at the same or lower price than regular electric cars they will probably take over all car sales once people trust the FSD algorithms. So I understand why Tesla want to go in this direction, but showing concept demonstrations like this doesn’t really work anymore, especially when Waymo is already performing over 100,000 paid fully autonomus robotaxi rides per week.

Read more:

- Tesla Cybercab announced: Elon Musk’s robotaxi is finally here – The Verge

- Tesla: We, Robot – YouTube

Zoom Getting Closer to AI Avatars Going to Your Meetings

https://news.zoom.us/zoomtopia-2024-unveiling-ai-first-work-platform-innovations/

The News:

- Earlier this year Zoom’s CEO Eric Yuan said that one day an AI Avatar will be able to do your job on your behalf. According to Yuan, the avatar will speak in your Zoom meetings for you, answer emails and take phone calls, supposedly freeing you up for the rest of your life.

- Last week, Zoom got a bit closer to that vision. At the event called Zoomtopia 2024, Zoom launched:

- Companion 2.0 – an AI Assistant that knows what you are looking at, and can provide “intelligent suggestions and responses”. It can also search the web for you and take actions as an agent.

- Zoom Tasks – that use the AI Companion to help “detect, recommend, and complete tasks for a user based on conversations from across Zoom Workplace”.

- Custom AI Avatars – initially only available in Zoom Clips in 2025, but has the ability to create video content from text scripts.

My take: Is this really where we want to be going with digital meetings? I think digital meetings are problematic as they are – high audio latency makes for awkward conversations if both parties use bluetooth connections, most participants have poor video quality and lightning, and every simple human interaction you want to do has to be scheduled into a meeting. I don’t really see how adding AI agents into meetings that act on behalf of certain participants would make this experience better, what’s your opinion?

Researchers at Penn State Creates AI-Powered Electric Tongue

https://www.psu.edu/news/research/story/matter-taste-electronic-tongue-reveals-ai-inner-thoughts

The News:

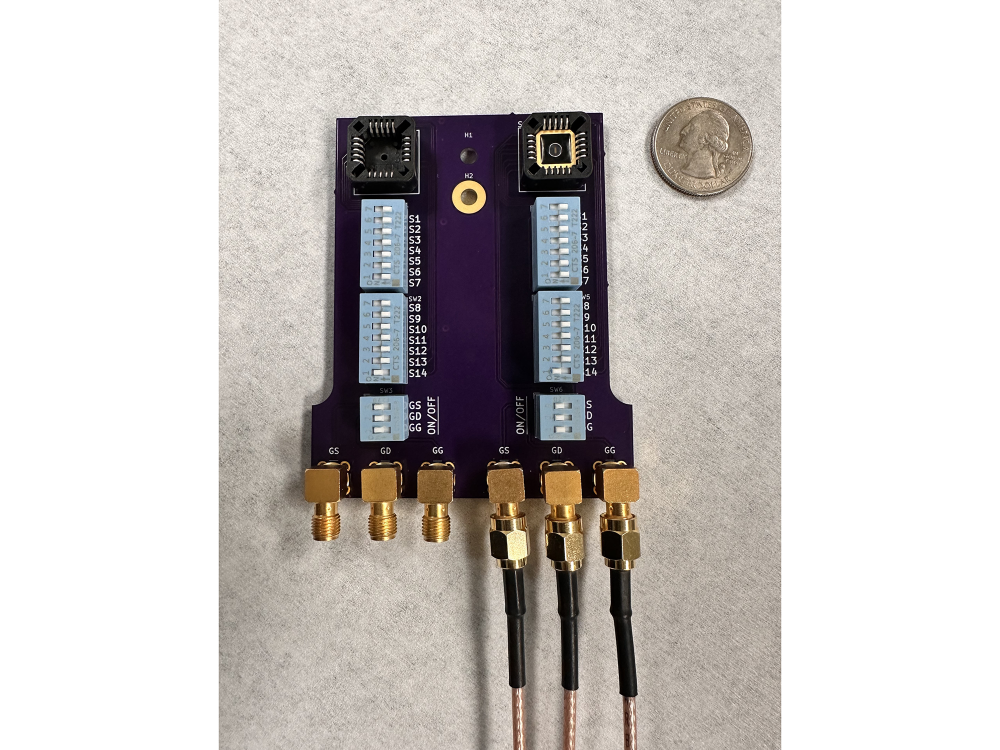

- Researchers at Penn State has created an AI-powered electronic “tongue” that can identify subtle differences in liquids and detect food spoilage.

- The electronic tongue combines a special sensor with an AI modeled after the human brain’s taste center, enabling it to ‘taste’ liquids.

- The tongue can ID differences in similar liquids like watered-down milk, sodas, coffee, and spoiled fruit juices with over 80% accuracy in about a minute.

My take: This was unexpected but fascinating news! There have been many innovations this year when it comes to vision and hearing capabilities of digital systems, and taste feels like the natural next step. If you saw the video of the factory worker in China who had to taste 10,000 vapes a day to verify that the liquid formula was kept the same, you probably also got thinking on how many other workers there are out there in different factories sniffing and tasting thousands of products every day. Hopefully innovations such as this electrical tongue will make life much easier for many factory workers in the near future!

OpenAI and Hearst form Strategic Partnership

https://openai.com/index/hearst

The News:

- Last week OpenAI announced a strategic partnership with Hearst, publisher of brands like Houston Chronicle, San Francisco Chronicle, Esquire, Cosmopolitan, ELLE, Runner’s World and Women’s Health.

- The collaboration spans over 20 magazine brands and 40+ newspapers.

- Hearst joins a large list of publishers recently partnered with OpenAI such as Condé Nast (Vogue, The New Yorker, Vanity Fair and Wired) and TIME.

My take: As a runner, having access to a personal assistant that has all the knowledge in all magazines ever published of Runner’s World would be truly amazing. This is all coming to the next version of ChatGPT, and I believe it will be a true game-changer to work with since it will truly be an “expert” in everyday topics, human needs and human interaction.

Amazon Launches AI Shopping Guide

https://www.aboutamazon.com/news/retail/amazon-ai-shopping-guides-product-research-recommendations

The News:

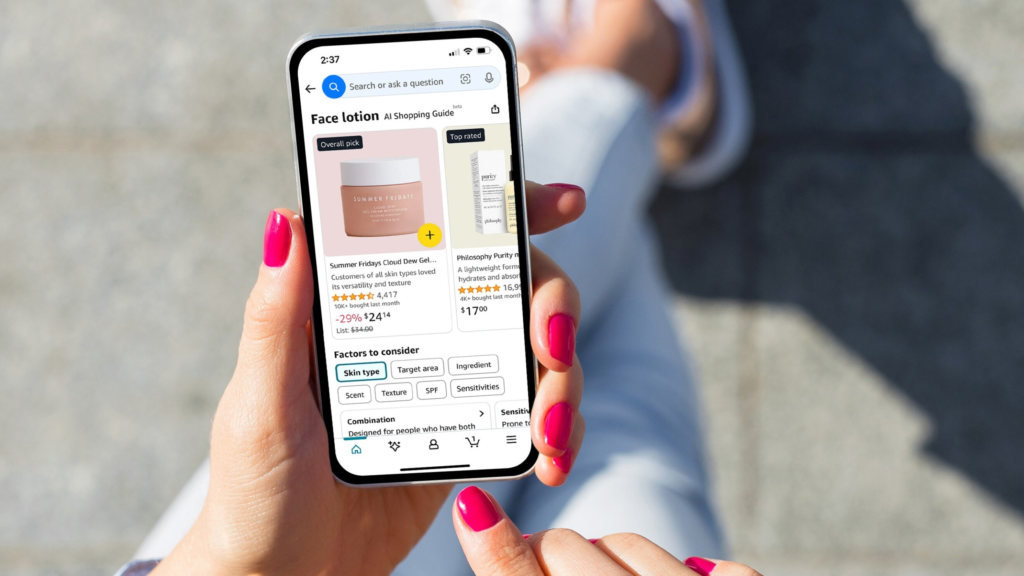

- Amazon is rolling out a new AI-powered Shopping Guide to help customers find products based on the specific features they need.

- The new AI-powered “Shopping Guides” are now rolling out in the US on Amazon’s mobile website and apps for iOS and Android, “presenting users with more tailored product information and recommendations when they’re browsing.”

My take: We might soon see an end to “filter settings” with dozens of parameters on various e-commerce websites. Instead you tell the guide what is most important for you and it filters automatically based on item descriptions and technical features. If you have a website where customers browse items today you should probably check out how Amazon is developing their Shopping Guides, because as soon as customers gets used to this experience they probably want to have it on other sites as well.

Read more:

Nobel Prize in Physics to Hopfield and Hinton for AI Research

https://www.nobelprize.org/prizes/physics/2024/press-release

The News:

- This year’s Nobel Prize for Physics went to John Hopfield and Geoffrey Hinton for their “foundational discoveries and inventions that enable machine learning and artificial neural networks”.

- Along with David Rumelhart and Ronald Williams, Hinton was instrumental in popularizing the backpropagation algorithm, which is essential for training multi-layered neural networks. Hinton’s work laid the groundwork for the modern resurgence of neural networks, particularly in deep learning, leading to breakthroughs in areas like image recognition and natural language processing.

- Hopfield introduced the idea of a recurrent neural network that can serve as an associative memory. Hopfield’s work was crucial in showing how neural networks could exhibit emergent behavior, such as pattern recognition.

My take: Hopfield and Hinton approached neural networks taking inspiration not only by the brain but also by central notions in physics such as energy, temperature, system dynamics, energy barriers, the role of randomness and noise, connecting the local properties, e.g., of atoms or neurons, to global ones like entropy and attractors. With an entire world about to change in its foundations thanks to AI, it was about time to give Hopfield and Hintons the recognition they deserve!

Nobel Prize in Chemistry to Baker, Hassabis and Jumper for AlphaFold

https://www.nobelprize.org/prizes/chemistry/2024/press-release

The News:

- This year’s Nobel Prize for Chemistry went to David Baker, Demis Hassabis and John Jumper for their work with computational protein design and protein structure prediction in AlphaFold.

- I reported on AlphaFold 3 back in May this year where I wrote that “By accurately predicting protein structures, this AI model could drastically reduce the time and resources required for drug discovery, enabling more efficient and targeted research. This innovation has the potential to transform the pharmaceutical industry, facilitating the development of new therapies and advancing our understanding of complex biological processes.”

My take: AlphaFold is the result of truly amazing work by Baker, Hassabis and Jumper, and it has the potential to chance the entire medical industry forever. Being able to design protein structures for perfect match is a ground breaking approach, and we should soon begin to see major innovations as a result.