Last week OpenAI secured over $10 Billion, promising over $100 Billion in profit by 2029 which is roughly what Nestlé and Target makes each year. To reach those figures in five years OpenAI needs to provide something people value equally as much as food and clothes.

AGI might be such a thing, and maybe this is what they will deliver in five years. If this is the case then our society will change much faster than anyone could ever have anticipated, and the current investment round of $6.6 Billion will seem small in comparison.

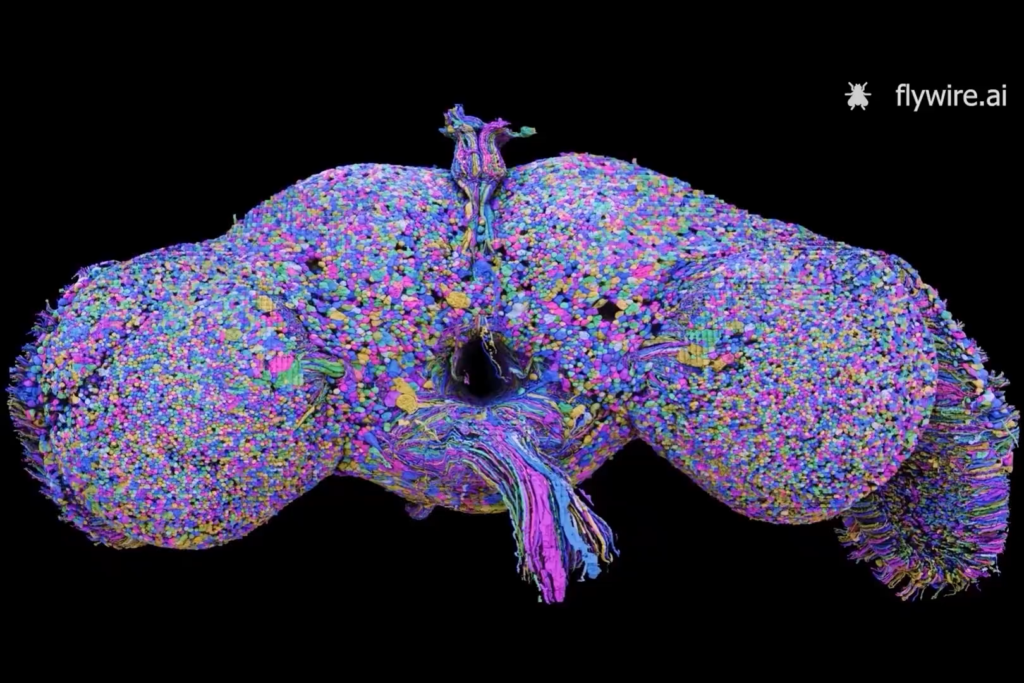

On second news, scientists have now mapped all 140,000 neurons in the fruit fly brain. It was just 5 years ago when scientists managed to map the brain of a tiny worm with just 385 nerons, meaning if we take into account exponential growth, researchers should theoretically be able to map the entire human brain in about 13 years. Amazing!

THIS WEEK’S NEWS:

- Scientists have Now Mapped All 140,000 Neurons in the Fruit Fly Brain

- Sony and Raspberry Pi Launches the Raspberry Pi AI Camera

- OpenAI Launches Canvas – a New Way to Write and Code

- Open AI Secures $6.6B + $4B= $10.6 Billion in Funding

- OpenAI DevDay 2024 : Four Major Announcements

- Anthropic Introduces Contextual Retrieval to Enhance RAG Systems

- Microsoft Upgrades Copilot with Voice and Vision

- Black Forest Labs Launches Flux 1.1 : Faster & Better

- Pika Labs Releases 1.5 with Cinematic Shots and Pikaffects

- Meta Announces Meta Movie Gen – Not Coming Anytime Soon

- Elon Musk’s xAI Promises to Open Source All Models

Scientists have Now Mapped All 140,000 Neurons in the Fruit Fly Brain

https://www.nytimes.com/2024/10/02/science/fruit-fly-brain-mapped.html

The News:

- A fruit fly’s brain is smaller than 1 millimeter, but it packs tremendous complexity. Over 140,000 neurons are joined together by more than 150 meters of wiring.

- Hundreds of scientists mapped out those connections in stunning detail in a series of papers published last Wednesday in the journal Nature. Researchers have studied the nervous system of the fruit fly for generations.

- Previously, a tiny worm was the only adult animal to have had its brain entirely reconstructed, with just 385 neurons in its entire nervous system. The new fly map is “the first time we’ve had a complete map of any complex brain,” said Mala Murthy, a neurobiologist at Princeton who helped lead the effort.

My take: Based on the time it took to go from the previous animal that was reconstructed five years ago, a tiny worm with just 385 neurons in its entire nervous system, we should theoretically be able to map the entire human brain with 86 billion neurons in about 13 years considering exponential growth. So sometime around the year 2040 I wouldn’t be surprised if we have mapped the entire human brain. Maybe faster if we manage to get AGI in that time frame.

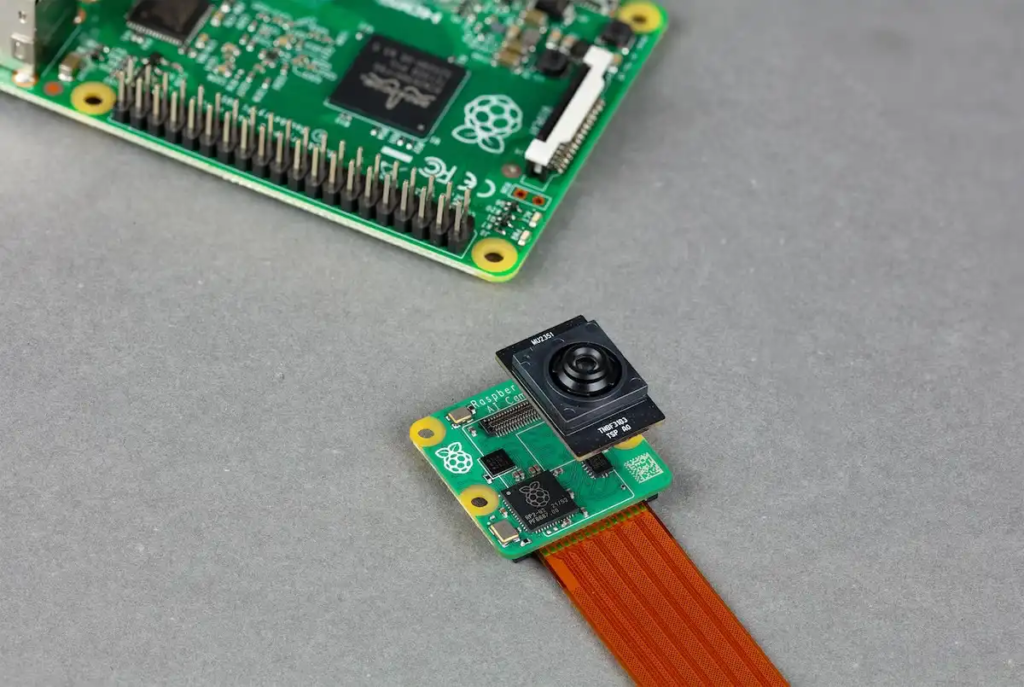

Sony and Raspberry Pi Launches the Raspberry Pi AI Camera

https://www.raspberrypi.com/products/ai-camera

The News:

- Sony Semiconductor Solutions Corporation (SSS) and Raspberry Pi last week announced that they are launching a jointly developed AI camera.

- The AI Camera is powered by SSS’s IMX500 intelligent vision sensor, which is capable of on-chip AI image processing, and enables Raspberry Pi users to easily develop edge AI solutions that process visual data.

- The camera is pre-loaded with the popular MobileNetSSD object detection framework.

- The Raspberry Pi AI Camera is available for purchase immediately for a suggested retail price of $70.00.

What you might have missed: If you are trying to run an object detection framework like YOLO v8 on the latest Raspberry Pi 5 8GB you will be able to process at most 1-2 frames per second. However if you add an AI accelerator like the Raspberry Pi AI Kit using Hailo PCIe gen3 you will get up to 80 frames per second. The end result will be costly, large and draw lots of power, which is why this small AI Camera was launched.

My take: The main benefit of the Raspberry Pi AI Camera is that it works with ALL Raspberry Pi models using the standard camera connector cable. However if you want the best object detection framework on a Raspberry Pi you should use YOLO v8, Raspberry Pi 5 and the Raspberry Pi AI Kit.

Read more:

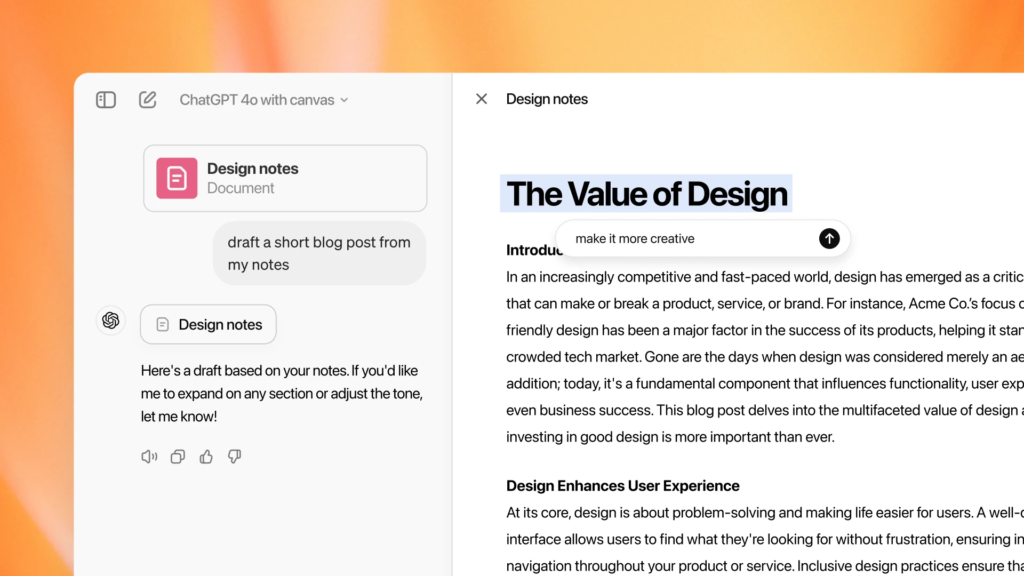

OpenAI Launches Canvas – a New Way to Write and Code

https://openai.com/index/introducing-canvas

The News:

- OpenAI just launched Canvas, a collaborative interface where you can interact with sections of the generated content, adjust length and complexity, debug code, and much more.

- Canvas opens in a separate window next to the chat, similarly to Artifacts used by Claude.

- The way you can interact with sections of the generated text or code is very similar to how you work in the Cursor editor.

- Canvas is initially rolling out to Plus and Team users.

What you might have missed: When fine-tuning GPT for Canvas, OpenAI used o1-preview to post-train the model for its core behaviors. “We measured progress with over 20 automated internal evaluations. We used novel synthetic data generation techniques, such as distilling outputs from OpenAI o1-preview, to post-train the model for its core behaviors.”

My take: If you are used to the process of (1) generating a text with ChatGPT, (2) asking it to change something, (3) waiting for it to rewrite it all, and then repeating, you are in for a treat. Create the text once, then pinpoint exactly what you want to change and how you want it to be changed. This is very much how the development environment Cursor works, and I think it will be a game changer for how people work with the results from large language models going forward.

Open AI Secures $6.6B + $4B= $10.6 Billion in Funding

https://openai.com/index/scale-the-benefits-of-ai

The News:

- OpenAI just closed their latest funding round at $6.6 billion, valuing the company at $157 billion. This is the largest VC round of all time.

- In addition to securing $6.6 billion, OpenAI also established a new $4 billion credit facility with JPMorgan Chase, Citi, Goldman Sachs, Morgan Stanley, Santander, Wells Fargo, SMBC, UBS, and HSBC.

- OpenAI currently generates $3.6 billion in revenue, but projected losses of more than $5 billion are set to outpace revenue according to Reuters.

- OpenAI expect revenues to increase to $25 billion by 2026, and $100 billion by 2029 according to investor documents.

What you might have missed: Apparently OpenAI asked investors to not back rival start-ups such as Anthropic and Elon Musks xAI, resulting in Elon Musk posting “OpenAI is evil” on X.

My take: One can only hope that their losses does not scale at the same pace as the projected revenue growth, resulting in a projected loss of over $100 billion in 2029. While OpenAI aims to “make advanced intelligence a widely accessible resource”, it’s very clear that this resource will not come for free. The only way I can see OpenAI making $100 billion in revenue is if they have invented AGI and it can truly replace human workers. You could then charge virtually anything for it. Continuing to sell monthly Chat licenses and API access tokens will never generate that amount of money.

OpenAI DevDay 2024 : Four Major Announcements

The News:

- At the OpenAI DevDay 2024 the company presented four major announcements:

- Realtime API : OpenAI Realtime API can be used to build “low-latency, multimodal experiences. Similar to ChatGPT’s Advanced Voice Mode, the Realtime API supports natural speech-to-speech conversations using the six preset voices already supported in the API.”

- Vision Model Fine-Tuning : OpenAI Vision Fine-Tuning on GPT-4o “makes it possible to fine-tune with images, in addition to text. Developers can customize the model to have stronger image understanding capabilities which enables applications like enhanced visual search functionality, improved object detection for autonomous vehicles or smart cities, and more accurate medical image analysis.”

- Model Distillation : OpenAI Model Distillation “lets developers easily use the outputs of frontier models like o1-preview and GPT-4o to fine-tune and improve the performance of more cost-efficient models like GPT-4o mini.”

- Prompt Caching : OpenAI Prompt Caching “allows developers to reduce costs and latency. By reusing recently seen input tokens, developers can get a 50% discount and up to 80% faster prompt processing times.”

My take: Anthropic (Claude) launched prompt caching in August and it was a huge success. The difference with OpenAI prompt caching is that the implementation by OpenAI is applied automatically, making calls cheaper without any additional work by the developer. The Realtime API looks really good, and being able to fine-tune GPT-4o with your own images is fantastic news.

Anthropic Introduces Contextual Retrieval to Enhance RAG Systems

https://www.anthropic.com/news/contextual-retrieval

The News:

- Anthropic introduced a new method called “Contextual Retrieval” that significantly improves the performance of Retrieval-Augmented Generation (RAG) systems. This technique addresses a common limitation in traditional RAG implementations where context is often lost when splitting documents into smaller chunks.

- The new method can reduce failed retrievals by 49% compared to standard RAG systems, and when combined with reranking, failed retrievals can be reduced by up to 67%.

- If you want to explore this technique, Anthropic has released a cookbook for developers to easily implement Contextual Retrieval with Claude.

My take: Being able to maintain the context when retrieving information is critical for many applications, from customer support chatbots to legal research tools. Thanks to prompt-caching, the cost of Context Retrieval is kept to a minimum, meaning that if you use RAG today you should definitely check it out!

Microsoft Upgrades Copilot with Voice and Vision

https://blogs.microsoft.com/blog/2024/10/01/an-ai-companion-for-everyone

The News:

- Microsoft just announced numerous upgrades to their Copilot Assistant that is built into Windows PCs:

- Copilot Voice : Allows users to interact with Copilot using natural speech.

- Copilot Vision for Edge browser : Allows the AI to understand what the user is viewing, and can “answer questions about its content, suggest next steps and help you without disrupting your workflow.”

- Think Deeper : Gives Copilot enhanced reasoning capabilities using chain-of-thoughts introduced with the latest OpenAI o1 model.

My take: While Microsoft never mentions it, I think it’s clear that they are using the latest OpenAI APIs announced at the same day: Realtime Voice, Vision Fine Tuning and Prompt Caching. Microsoft is really going all-in with their OpenAI collaboration, and it will be interesting to see how people react to this. Where Apple specifically asks if you want to send information to OpenAI if it cannot answer requests using their own privacy-protected models, Microsoft is basing their entire AI strategy around OpenAI. And as usual with these new AI releases, they are not available for European citizens.

Black Forest Labs Launches Flux 1.1 : Faster & Better

https://blackforestlabs.ai/announcing-flux-1-1-pro-and-the-bfl-api/

The News:

- Flux 1 Pro is one the best text-to-image AI model available, and version 1.1 is now up to six times faster, while also improving quality and prompt output adherence.

- Flux 1.1 Pro is a paid model and is available from partners like FAL AI, Freepik, Replicate or Together AI. It is not available through xAI Grok.

My take: It’s definitely much better, but it still has problems counting fingers (as can be seen in the image above). I experienced very mixed results, with most times the output looked like something out of a computer game, and not realistic at all. It would be very interesting to hear if you have better luck with it!

Read more:

Pika Labs Releases 1.5 with Cinematic Shots and Pikaffects

The News:

- Pika Labs, one of the first startups to launch their own AI video generation model, launched their first text-to-video tool Pika 1.0 in December 2023.

- Last week Pika Labs announced Pika 1.5, a significantly updated version offering physics, special effects (or “Pikaffects”) and much more!

- These effects are really something else, like cake-ify, crush it, squish it, and more, so if you have a minute click on one of the links below to check them out.

My take: Now this was a new and creative approach to generative video! Upload an image – and in comes a hand or a knife and starts interacting with it. You should definitely check out the examples below, they are wild!

Read more:

- 10 wild examples created with Pika 1.5 – X

- PIKA 1.5 IS HERE! – YouTube

- Pika 1.5 Explodes, Kling Talks, & Minimax Sees! – YouTube

Meta Announces Meta Movie Gen – Not Coming Anytime Soon

https://ai.meta.com/research/movie-gen

The News:

- Meta just announced their upcoming video generator “Meta Movie Gen”. Features include:

- Up to 16 seconds of continuous video generation.

- Precise editing – compared to other text-to-video engines that only do style transfer.

- State-of-the-art video conditioned audio.

What you might have missed: A few hours after the release, Chris Cox , CPO at Meta, posted on Threads that “We aren’t ready to release this as a product anytime soon — it’s still expensive and generation time is too long — but we wanted to share where we are since the results are getting quite impressive”

My take: Meta Movie Gen looks good, and so does Sora. However as we have seen with ChatGPT evolving over the past year with memory, canvas, advanced voice mode and more, it’s clear that entirely new paradigms need to be created to interact and work with these models. It will most probably be the same with text-to-video engines, we have already seen examples of this with the Kling Motion Brush released two weeks ago. So once Meta Movie Gen and Sora are released, I expect at least a year before they are fully effective as daily tools and optimized for production work.

Read more:

Elon Musk’s xAI Promises to Open Source All Models

https://twitter.com/elonmusk/status/1842248588149117013

The News:

- In a recent tweet by Elon Musk, he writes that xAI “has been and will open source its models, including weights and everything. As we create the next version, we open source the prior version, as we did with Grok 1 when Grok 2 was released.”

My take: It sounds good, however Grok 1.0 (released in November 2023) was not open sourced when Grok 1.5 was released on March 28 this year, it was open sourced when Grok 2.0 was released in August. This means that if xAI manages to surpass the competition with one of their upcoming models they can decide wait with open sourcing the previous model by just keeping the same major number and pushing version increments. Also, as can be seen with the pricing recent months, the value of the “previous model” is very quickly approaching zero for all companies.