If you live outside the EU and UK, everything you have ever written and posted to LinkedIn has now been used by LinkedIn and their affiliate partners to train new AI models, and there is nothing you could have done to prevent this. Last week LinkedIn quietly announced that they have trained new AI models on the data of all their users, and that you through a complex two-step out-out process might prevent LinkedIn and their affiliates to use any new data or content going forward to train their models, but you cannot “affect any training that has already taken place”. This is a prime example on how to NOT roll out AI model training, and the critique has been enormous. Hopefully other companies will learn something from this and use opt-in going forward.

THIS WEEK’S NEWS:

- LinkedIn is Training AI Models on Your Data

- Kling Launches Text-to-Video 1.5 with Motion Brush

- TikTok Owner ByteDance is Developing Their Own AI GPUs

- Lionsgate Partners With Runway for GenAI

- Youtube Launches AI-Powered Creation Tools

- Google Uses AI to Understand Whales

- Google Launches Open Buildings – a Dataset for Social Good Applications

- Cruise Robotaxis return to Bay Area

- Microsoft Announces “Microsoft 365 Copilot Wave 2”

LinkedIn is Training AI Models on Your Data

https://www.theverge.com/2024/9/18/24248471/linkedin-ai-training-user-accounts-data-opt-in

The News:

- Last week LinkedIn “quietly” introduced AI model training on all user data, requiring LinkedIn users to opt out manually via account settings and also filling out a separate LinkedIn Data Processing Objection Form to fully prevent future data use.

- LinkedIn writes on a help page that it uses generative AI for purposes like writing assistant features.

- According to LinkedIn: “Opting out means that LinkedIn and its affiliates won’t use your personal data or content on LinkedIn to train models going forward, but does not affect training that has already taken place.” This means that (1) LinkedIn is also giving your personal data and content to affiliate partners, and (2) that there was no chance for anyone to opt out before any AI models were trained on user content.

- According to the LinkedIn and generative AI (GAI) FAQ, LinkedIn does not currently train content-generating AI models on data from members located in the EU, EEA, UK, or Switzerland (my emphasis on currently).

My take: This is a very good example on how to NOT roll out AI model training on user data. The news article I linked below to PCWorld has the subtitle “Thought you couldn’t hate LinkedIn more? You were wrong.” As a LinkedIn content creator my greatest concern is that people will abandon LinkedIn completely instead of opting out. What’s your reaction to this?

Read more:

- LinkedIn scraped user data for training before updating its terms of service | TechCrunch

- LinkedIn is training AI with your data. Here’s how to opt out ASAP | PCWorld

Kling Launches Text-to-Video 1.5 with Motion Brush

https://klingai.com/release-notes

The News:

- Kling just release version 1.5 of their very popular text-to-video AI model. New features include:

- 1080p HD Video Generation, you can now generate videos in full 1080p HD resolution.

- Motion Brush, allowing users to define the movement of individual elements within an image, providing detailed control over movements and performance in generated videos.

- High-Quality Mode, the new “High-Quality Mode” is available at no additional cost, with major quality improvements.

My take: Kling 1.0 was one of the first publicly available text-to-video generators available, and the results you could get from it was almost like playing the lottery, where most of the times you got disappointed by the results. Kling 1.5 however is much better, both in terms of quality but also in the control you have over what is happening in the video. These tools are evolving at a massive speed, and with tools like Motion Brush it’s very apparent that we are witnessing a whole new type of content creation toolchain slowly forming into a complete work package.

Read more:

TikTok Owner ByteDance is Developing Their Own AI GPUs

The News:

- ByteDance, the owner of TikTok, has reportedly spent over $2 billion on more than 200,000 Nvidia H20 GPUs for use in their products.

- According to news outlet The Information, ByteDance is now developing two AI GPUs that will enter mass production by 2026, developed by TSMC.

- One GPU will be designed for training and the other for inference. The chips are according to the report expected to be designed by Broadcom.

My take: As the need for AI GPUs continues to grow, I expect many more companies to launch their own GPUs for training and inference within the next 2-3 years. It remains to see how this will affect Nvidia, based to their current stock price most people seem to believe they will continue growing without any competition the next 5-10 years.

Lionsgate Partners With Runway for GenAI

https://runwayml.com/news/runway-partners-with-lionsgate

The News:

- Lionsgate, the film company behind The Hunger Games, John Wick, and Saw, has teamed up with Runway to create a custom AI video generation model trained on Lionsgate’s film catalogue.

- “Runway is a visionary, best-in-class partner who will help us utilize AI to develop cutting edge, capital efficient content creation opportunities,” said Lionsgate Vice Chair Michael Burns. “Several of our filmmakers are already excited about its potential applications to their pre-production and post-production process. We view AI as a great tool for augmenting, enhancing and supplementing our current operations.”

- Runway is considering ways to offer similar custom-trained models as templates for individual creators, expanding access to AI-powered filmmaking tools beyond major studios.

My take: This is one of the first major collaborations between an AI startup and a major Hollywood company, and if successful it will change the way movies are produced forever.

Youtube Launches AI-Powered Creation Tools

https://blog.youtube/news-and-events/made-on-youtube-2024

The News:

- Youtube just announced several new AI tools designed to assist video creators, including a new text-to-video generation tool and automated audio dubbing.

- Veo is Google’s new video generation tool, allowing creators to create 6-second video clips for Youtube Shorts using text prompts. The resulting video is watermarked using SynthID and labelled as AI-generated.

- A new tab in Youtube Studio called “Inspiration” now includes a “brainstorming buddy powered by generative AI”. It will give you suggestions for video ideas, titles, thumbnails and outlines.

- Audio dubbing lets you generate translated audio tracks for your videos in different languages.

My take: These tools are great news for content creators, especially the Audio Dubbing feature. As an example, in France only 42,75% of the population understands English. Having audio dubbing done automatically means a much larger potential market for all content creators.

Google Uses AI to Understand Whales

The News:

- Google Research just announced a new “whale bioacoustics model” capable of identifying eight distinct species, including multiple calls for two of those species.

- Identifying whale sounds is extremely complex due to the broad acoustic range, ranging from 10 Hz for blue whales all the way up to 120 Khz for odontocetes (toothed whales). Recordings also vary dramatically by location and with time.

- The tool developed by Google is designed to track whale populations and movements through passive acoustic monitoring.

- The model is made available publicly for download at Kaggle.

My take: How do you track a whale? A GPS sensor would probably be the first choice, however we can now add passive acoustic monitoring as a second option. Being able to filter out, identify and analyze sounds using AI will only get better over time, and the use cases are enormous, especially when it comes to wildlife.

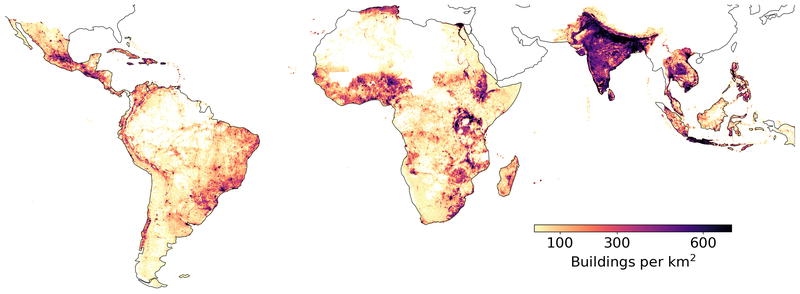

Google Launches Open Buildings – a Dataset for Social Good Applications

The News:

- Google just launched the Open Buildings 2.5D Temporal Dataset, a dataset that tracks building changes across the Global South from 2016 to 2023, including estimates of building presence, counts, and heights.

- Use cases include:

- Government agencies: Gain valuable insights into urban growth patterns to inform planning decisions and allocate resources effectively.

- Humanitarian organizations: Quickly assess the extent of built-up areas in disaster-stricken regions, enabling targeted aid delivery.

- Researchers: Track development trends, study the impact of urbanization on the environment, and model future scenarios with greater accuracy.

- The dataset is can be freely downloaded at https://sites.research.google/gr/open-buildings/

My take: Google uses several innovations to achieve high precision building tracking in all regions, and it’s worth spending a few minutes at their web site going through the work they have done. By 2050 the world’s population is expected to increase by 2.5 billion, with nearly 90% of that growth occurring in cities across Asia and Africa. Tools like Open Buildings help plan for this growth, respond to crises, and understand urbanization’s impact.

Cruise Robotaxis return to Bay Area

https://techcrunch.com/2024/09/19/cruise-avs-return-to-bay-area-year-after-pedestrian-crash

The News:

- Cruise is resuming operations in Sunnyvale and Mountain View, with human-driven vehicles for mapping and plans to progress to supervised AV testing later this fall.

- Cruise has issued software updates and signed a partnership with Uber for robotaxi services starting in 2025.

- The decision to bring Cruise’s autonomous Chevy Bolts back to the Bay Area comes just a few months after the company reached a settlement with California’s Public Utilities Commission, following a crash in October 2023.

My take: I believe 2025 will be the year of the autonomous robottaxi. I expect companies like Waymo and Cruise to expand their coverage on a monthly basis all throughout 2025, and by the end of next year they will probably cover such a large area that robotaxis will be one of the primary means of transportation in cities like San Fransisco, Phoenix and Los Angeles.

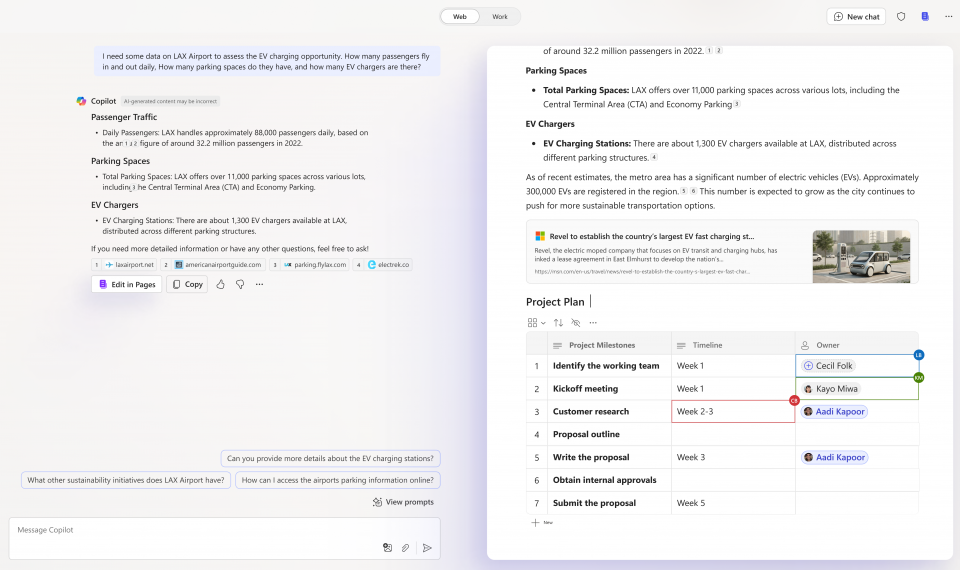

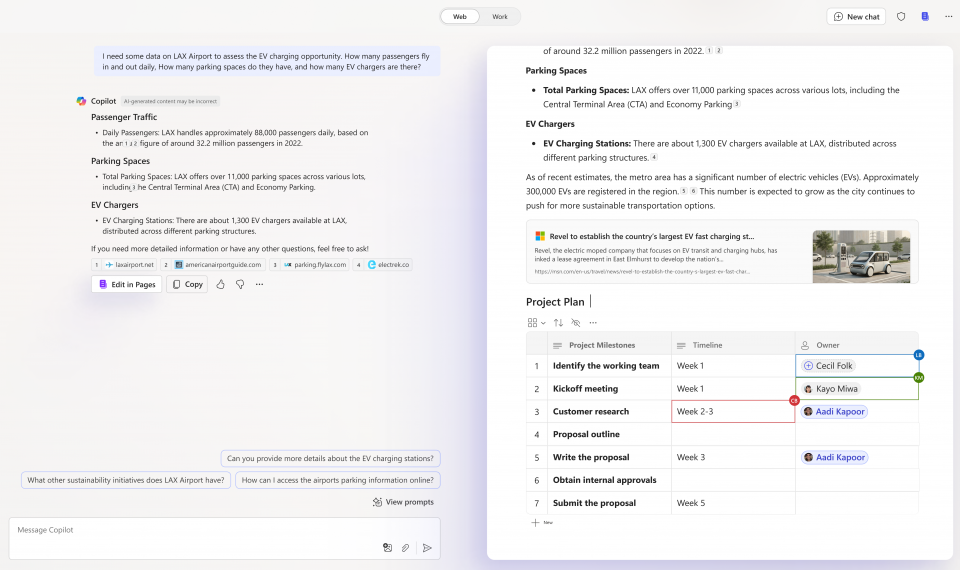

Microsoft Announces “Microsoft 365 Copilot Wave 2”

The News:

- Microsoft just launched “Microsoft 365 Copilot Wave 2” with improvements to Microsoft 365 apps and new features like Copilot Pages and Copilot agents.

- Copilot Pages is a dynamic, persistent canvas designed for multiplayer AI collaboration.

- Copilot Agents, a “no-code tool” for creating custom Copilot agents, makes it easy to automate and execute business processes.

- Copilot in Excel with Python, “combining the power of Python with Copilot in Excel”

- Copilot in Powerpoint, using your prompt to build an outline with topics that you can edit and refine to create a first draft of your presentation.

- Copilot in Teams, can now reason over both the meeting transcript and the meeting chat to give you a complete picture of what was discussed.

- Copilot in Outlook, analyzes your inbox based on the content of your email and the context of your role—like who you report to and the email threads where you’ve been responsive.

My take: Lots of great improvements across all Microsoft 365 apps, and it’s getting harder for everyone to avoid using AI on a daily basis. Within a year I predict we will use AI more than we spend time creating our own content, and if that is a good or bad thing I leave up to you.

Read more: Microsoft’s Office apps are getting more useful Copilot AI features – The Verge