Thanks to Google Audio Overviews, you can now listen to this weekly newsletter as a podcast! If you have not yet experienced Audio Overviews, prepare to have your mind blown! 🤯 In fact, if you have limited time, skip reading this altogether and spend just 1 minute listening to the beginning of this podcast. It’s totally amazing!

Tech Insights Weekly | Podcast on Spotify

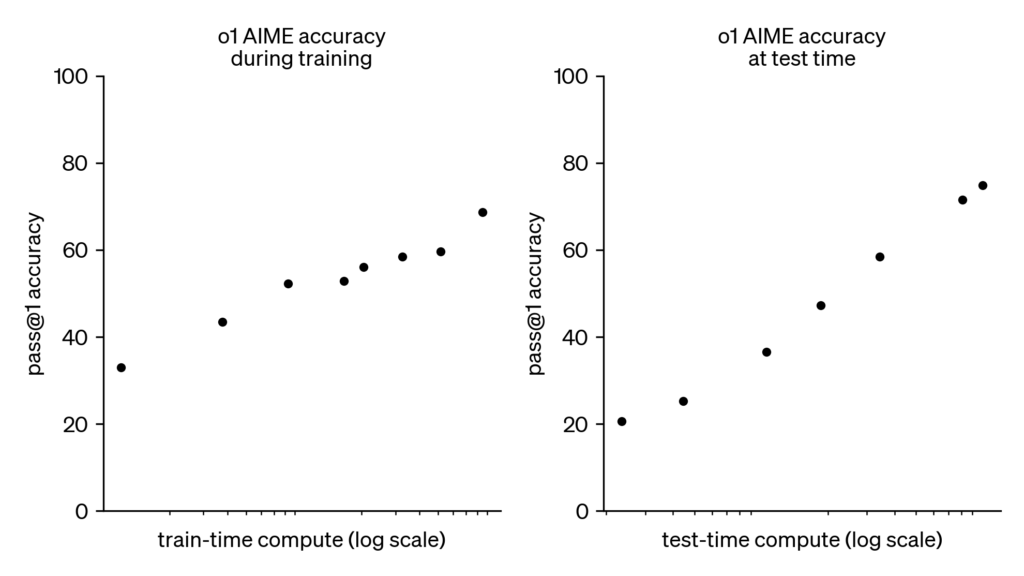

After many weeks waiting for OpenAI’s next model (code named ‘Strawberry’) to be announced, it was finally released as OpenAI ‘o1’ last week. o1 is the first in a new series of models that generate an extensive ‘chain of thought’ before they answer, and the more time they spend doing this (which OpenAI calls ‘test-time compute’), the more their performance increases. This means that we now have a new way to scale the performance of AI models – instead of having train-time increasing from months to years, we can instead increase the time spent in test-time compute. What does this mean in practice? Instead of having the AI respond in less than a second, it might take a few seconds for it to reply to difficult questions. But the quality of those results will be way better than what was possible before. This is still not GPT-5, so expect the performance of o1 (or maybe o2) to increase significantly once Orion / GPT-5 is released in a few months!

THIS WEEK’S NEWS:

- OpenAI Releases o1 – a new ‘Reasoning’ AI model

- Google Launches Audio Overviews – Turn Documents to Podcasts

- Adobe Previews Adobe Firefly for Video

- Google Help Robots Perform Dexterious Movements

- Runway Launches Gen3 Video-to-Video

- Roblox Launches Generative AI That Builds 3D World

- Mistral Releases First MultiModal Model ‘Pixtral’

- Waymo Releases Safety Impact Report

OpenAI Releases o1 – a new ‘Reasoning’ AI model

https://openai.com/index/introducing-openai-o1-preview

The News:

- OpenAI has officially released their new model ‘o1’ as a preview to Premium and Teams users. o1 was previously known as Project Strawberry/Q* and is the first AI model with advanced ‘reasoning’ capabilities.

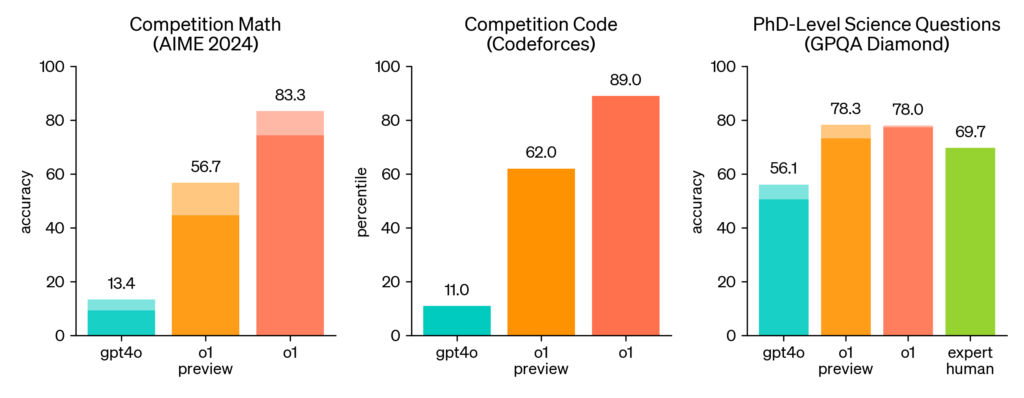

- The model solved 83% of International Mathematics Olympiad qualifying exam problems, compared to GPT-4o’s 13% (see figure above).

- According to OpenAI: “o1 ranks in the 89th percentile on competitive programming questions (Codeforces), places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME), and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA).”

- o1 uses reinforcement learning and chain-of-thought processing, mimicking human problem-solving. OpenAI call this “test-time compute” or “inference-time compute” as opposed to compute that was used to train the model, and is according to Noam Brown at OpenAI a new “scaling paradigm” for LLMs.

My take: I have tried o1 over the weekend, sending in fairly complex software programs I have built with Claude 3.5 and asked for improvements. o1 came up with several suggestions related to program flow, changed some functions to work better with newer versions of JavaScript and suggested fixes to potential security issues that Claude did not consider. o1 is an amazing and intriguing beast – in my very limited experience Claude still generates better source code with the right prompts, but a combination of Claude 3.5 and o1 is what I will be using going forward, with o1 as the “senior architect” reviewing complex code sections written by Claude before being committed.

Read more:

- Learning to Reason with LLMs | OpenAI

- x – o1 solves a complex logic puzzle

- x – Using o1 and Cursor to create an iOS app in under 10 mins

- x – Using o1 to create a 3D version of Snake in under a minute! 🐍

Google Launches Audio Overviews – Turn Documents to Podcasts

https://blog.google/technology/ai/notebooklm-audio-overviews

The News:

- Google has launched Audio Overviews that turns notes, PDFs, Google Docs, Slides, and other documents into AI-generated audio discussions between two virtual AI agents.

- Audio Overviews is a part of NotebookLM, an AI-powered research and writing assistant.

- Audio Overview creates a “deep dive” conversation from uploaded sources, with AI hosts summarizing content and connecting topics across materials.

- NotebookLM can process up to 50 sources, each up to 500,000 words, allowing for a total of 25 million words to be considered when generating the audio.

My take: Wow! Just WOW! Take one minute of your day and listen to this generated podcast by Rowan Cheung using NotebookLM. Amazing! For students this will be a groundbreaking new way to learn things while on the move!

Adobe Previews Adobe Firefly for Video

The News:

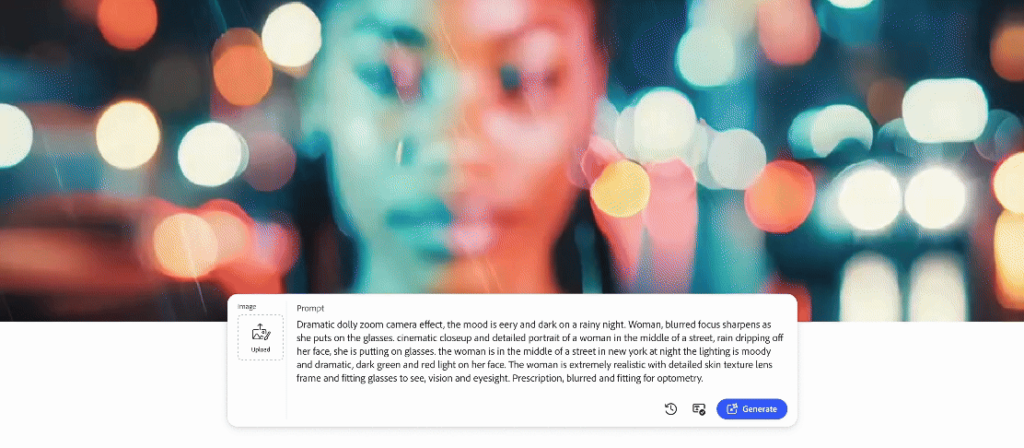

- Adobe has announced Adobe Firefly for Video, allowing you to use text prompts, a wide variety of camera controls, and reference images to generate B-Roll that seamlessly fills gaps in your timeline.

- Adobe Firefly for Video supports rich camera controls, like angle, motion and zoom to create different perspectives on the generated videos.

- Adobe Firefly for Video is designed to be “commercially safe” and will be available in beta later this year.

My take: The samples shown by Adobe are truly outstanding, and I believe this will revolutionize movie editing going forward. Being able to extend clips or fill out gaps in the timeline using generative AI is not only a time saver but can sometimes save an entire section, and it will be interesting to see how other applications like Resolve and Final Cut will approach this going forward.

Read more:

- If you have time, checkout the Firefly for Video examples at the Adobe Blog they are truly outstanding!

Google Help Robots Perform Dexterious Movements

https://deepmind.google/discover/blog/advances-in-robot-dexterity

The News:

- Google DeepMind has launched two new AI systems: ALOHA Unleashed and DemoStart, that help robots learn to perform complex tasks that require dexterous movement.

- Until now, most advanced AI robots have only been able to pick up and place objects using a single arm. ALOHA Unleashed helps robots learn to perform complex and novel two-armed manipulation tasks; and DemoStart uses simulations to improve real-world performance on a multi-fingered robotic hand.

- With this new method, the Google DeepMind robot learned to tie a shoelace, hang a shirt, repair another robot, insert a gear and even clean a kitchen.

My take: We are quickly approaching a future where robots can do most physical and repeatable tasks that humans can do, much faster and with less errors. ALOHA Unleashed and DemoStart are two important pieces to this puzzle, and if you have time do checkout the demos at the link above, very impressive!

Runway Launches Gen3 Video-to-Video

https://twitter.com/runwayml/status/1834711758335779300

The News:

- Runway just launched Gen-3 Alpha Video-to-Video, which they describe as “a new control mechanism for precise movement, expressiveness and intent within generations.”

- To use Video to Video, simply upload your input video, prompt in any aesthetic direction you like, or choose from a collection of preset styles.

- Runway Video-to-Video is currently limited to 3 second video clips, but the results are truly outstanding!

My take: Runway might not be the best text-to-video generator, but all the features they have added to the platform in recent months make it a very compelling package. Do check out their announcement thread at x, they have lots of really good looking examples posted.

Roblox Launches Generative AI That Builds 3D World

The News:

- Roblox just announced plans to roll out a generative AI tool that will let creators make whole 3D scenes just using text prompts.

- Although developers can already create scenes manually in the platform’s creator studio, Roblox claims its new generative AI model will make the changes happen in a fraction of the time.

- Roblox plans to open-source its 3D foundation model so that it can be modified and used as a basis for innovation. “We’re doing it in open source, which means anybody, including our competitors, can use this model,” says Anupam Singh, vice president of AI and growth engineering at Roblox.

My take: This is amazing news for everyone that wants to create their own Roblox game but have been stuck trying to build their first 3D environment for testing. I can see this becoming widely used both for young engineers that are starting out and want to learn programming, but also during the prototype phase of new games where you don’t care so much about the way things look but instead are fully focused on gameplay. Releasing this as OpenSource is also a very welcome bonus.

Mistral Releases First MultiModal Model ‘Pixtral’

https://techcrunch.com/2024/09/11/mistral-releases-pixtral-its-first-multimodal-model

The News:

- Three months ago French AI Startup Mistral finished a $645 million funding round at a $6 billion valuation, positioning them as one of the major players in the European AI landscape.

- Last week at the Mistral AI Summit, Mistral launched Pixtral 12B, their first AI multimodal model at about 24 GB in size.

- Pixtral 12B is released under the Apache 2.0 license, and can be downloaded, fine-tuned and used without restrictions via GitHub and Hugging Face.

- Pixtral 12B allows for images up to 1024×1024 pixels.

My take: There have been lots of great releases from Mixtral recently. Mistral Large 2 matches both Llama 3.1 and Gemini 1.5 Pro in performance, and being fullt open source I can definitely see Pixtral being used in a wide range of applications.

Read more:

Waymo Releases Safety Impact Report

https://waymo.com/safety/impact

The News:

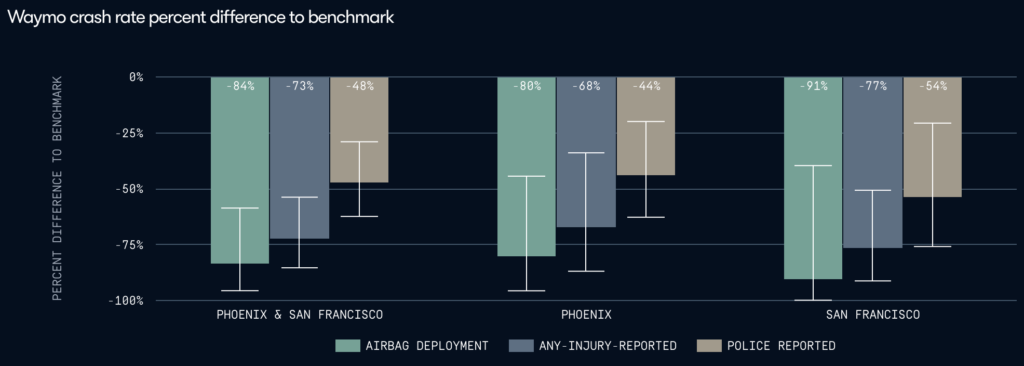

- Waymo has released an analysis of it’s own safety data, showing that the company’s self-driving cars are safer than human drivers on the same roads:

- Waymo vehicles had 48 percent fewer incidents that were reported to the police than vehicles driven by humans.

- Waymo vehicles had 73 percent fewer incidents that caused injuries than vehicles driven by humans.

- Waymo vehicles deployed airbags 84 percent less frequently than vehicles driven by humans.

My take: Waymo’s Safety Impact Report indicates that maybe the biggest feature of autonomous vehicles is the extra safety. Where most people a few years ago were mostly concerned if autonomous vehicles would ever be “as good” as human drivers, the robottaxis operated by Waymo today are clearly much better, reducing the risk of accidents significantly. More data like this is what we need to approve autonomous vehicles in more countries, especially in Europe.

Read more: