Would you trust a robot to fix your dental issues? Maybe I am the minority, but I would definitely try it, especially if it could significantly reduce the time you need to sit still in that chair while someone is drilling in your mouth. Dental-robots are coming, and you can read more about it below. Also, Black Forest Labs Flux that I wrote about last week has been released, and wow the images it creates really are as good as they promised. You can see two generated photos below and try it out online for free. Finally, we now have a language model that can both listen and speak at the same time in real-time, solving one of the last puzzles of engaging discussions. Next year we will all be talking to AI models in our phones like we have been doing it for ages, and it will feel as natural to us as talking to a close friend.

EMAIL NEWSLETTER

If you would like to receive Tech Insights as a weekly email straight to your inbox, you can sign up at: https://techbyjohan.com/newsletter/ . You will receive one email per week, nothing else, and your contact details will never be shared with any third party.

THIS WEEK’S NEWS:

- Robot Dentist Performs World’s First Fully Automated Procedure

- Black Forest Labs Flux Realism + LoRA Creates Stunning AI Photos

- Google Creates Ping-Pong Playing Robot

- Alibaba Claims Top Spot in AI Math Models with Qwen2-Math

- Meta Announces $2 Million in Llama 3.1 Impact Grants

- OpenAI Introduces Structured Outputs in API

- AI Model Achieves Real-Time Listening and Speaking Capabilities

Robot Dentist Performs World’s First Fully Automated Procedure

https://newatlas.com/health-wellbeing/robot-dentist-world-first

The News:

- Boston-based company Perceptive has developed an AI-controlled autonomous robot that has performed the world’s first fully automated dental procedure on a human patient.

- The system uses a handheld 3D volumetric scanner to create a detailed model of the mouth using optical coherence tomography (OCT), eliminating the need for X-rays. For its first procedure, the robot prepared a tooth for a dental crown in about 15 minutes, compared to the typical two-hour process performed by human dentists.

- The robotic system can reportedly operate safely even with patient movement, based on successful dry run testing.

My take: Going to the dentist is never fun, but having the time cut from 2 hours into just 15 minutes is amazing, and we will probably see similar improvements to other dental procedures. I guess the main question is how it will relate to pain – everyone reacts differently and it’s never completely painless when you have things to correct in your mouth. Still, it’s an interesting concept and it will be interesting to see how long it takes before it gets regulatory approval.

Black Forest Labs Flux Realism + LoRA Creates Stunning AI Photos

https://twitter.com/dr_cintas/status/1822302011833938171

The News:

- Last week I wrote about Black Forest Labs “Flux”, a model that according to its creators “surpass popular models like Midjourney v6.0, DALL·E 3 (HD) and SD3-Ultra in each of the following aspects: Visual Quality, Prompt Following, Size/Aspect Variability, Typography and Output Diversity.”

- It turns out this was not an overstatement. People have been testing Flux with LoRA and the results are nothing short of breathtaking – the photos it generates are extremely hard to identify as AI generated photos.

- You can try it out yourself at FLUX Realism LoRA | AI Playground | fal.ai

My take: Well this is it. I can no longer identify AI generated photos, and the models will only get better from here. There are definitely mixed feelings about this – sure it’s a great technological achievement, but also the feeling that you no longer for real can trust anything you see online, in print, or anywhere.

Google Creates Ping-Pong Playing Robot

https://sites.google.com/view/competitive-robot-table-tennis/home

The News:

- Google DeepMind has developed a robotic system capable of playing competitive table tennis at an amateur human level.

- The robot uses a combination of simulated training and real-world data to refine its skills, and can adapt to opponents’ playing styles in real time, adjusting its strategy on the fly.

- The robot arm uses a 3D-printed paddle and was tested against 29 human players of varying skill levels. The system won 45% of matches overall, including 100% against beginners and 55% against intermediate players, but lost all matches against advanced players.

My take: Table tennis is a fast-paced game that requires both high adaptability and skills to master. The fact that the robot beat 55% of all intermediate players is amazingly good, and demonstrates impressive progress in areas like real-time decision making, precise motor control, and adaptability.

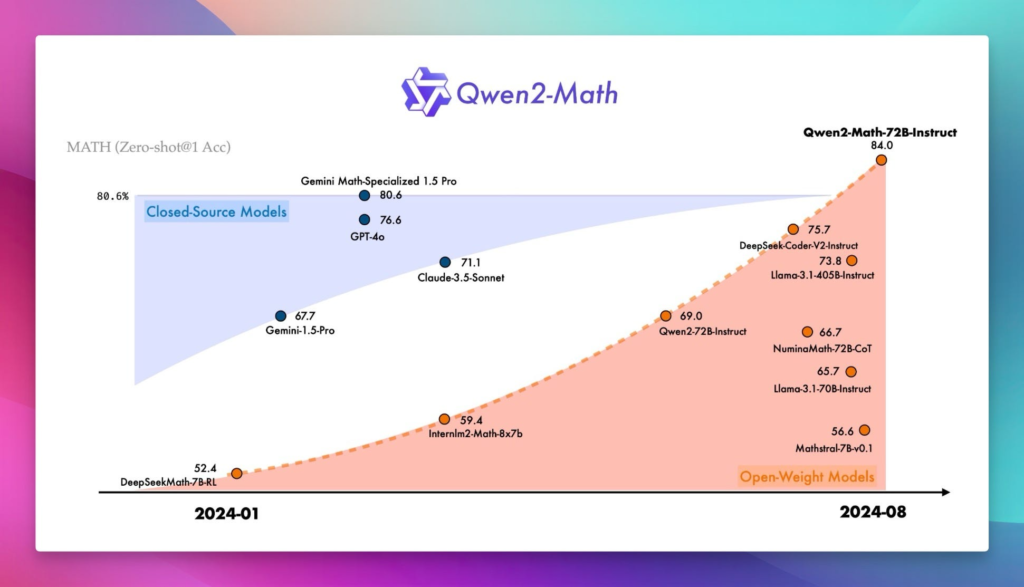

Alibaba Claims Top Spot in AI Math Models with Qwen2-Math

https://venturebeat.com/ai/alibaba-claims-no-1-spot-in-ai-math-models-with-qwen2-math/

The News:

- Alibaba’s AI research department, Alibaba DAMO Academy, has just launched Qwen2-Math 72B, an open source math model with the highest score yet on the GSM8K math benchmark, beating GPT-4, Claude Sonnet 3.5, and Gemini 1.5 Pro!

- The model uses a technique called “Chain-of-Thought” (CoT) prompting, which allows it to break down complex problems into smaller, more manageable steps.

- Qwen-2 is released as open-source under an Apache 2.0 license, meaning you are free to use it, fine-tune it, and adapt it for whatever projects you want!

My take: Another great release by Alibaba DAMO Academy, this time using the Apache 2.0 license! The use of Chain-of-Thought prompting is interesting as it mimics human problem-solving strategies, and other models will probably apply this concept shortly.

Meta Announces $2 Million in Llama 3.1 Impact Grants

https://ai.meta.com/blog/llama-3-1-impact-grants-call-for-applications

The News:

- Meta has launched the Llama 3.1 Impact Grants program, offering up to $2 million USD in total funding for projects that use Llama 3.1 for economically and socially impactful projects.

- Applications can receive up to $500,000 USD, with winners to be announced early next year.

- The application deadline for the Llama 3.1 Impact Grants is November 22, 2024.

My take: With it’s current leadership Meta seems more committed than ever to open-source, and this Impact Grants program will help build a global community of developers and innovators that use their platforms. So far I have only good things to say about how Meta approaches AI and innovation, and this program only strengthens that view.

OpenAI Introduces Structured Outputs in API

https://openai.com/index/introducing-structured-outputs-in-the-api

The News:

- OpenAI has launched Structured Outputs, a new feature in their API that ensures model-generated outputs exactly match JSON Schemas provided by developers.

- The new feature builds on last year’s JSON mode, addressing limitations in generating outputs that conform to specific schemas.

- OpenAI’s latest model, gpt-4o-2024-08-06, achieves 100% reliability in complex JSON schema following evaluations when using Structured Outputs.

My take: For anyone using ChatGPT to create applications that require consistent and structured data outputs this 100% reliability claim is a game-changer. Use cases include dynamically generating user interfaces and extracting structured data, and I can see this being used on a wide scale for research purposes and industrial applications.

Read more:

OpenAI: New 100% Reliable Structured Outputs – YouTube

AI Model Achieves Real-Time Listening and Speaking Capabilities

https://huggingface.co/papers/2408.02622

The News:

- AI researchers just developed a new Listening-While-Speaking Language Model (LSLM) that can listen and speak simultaneously – advancing real-time, interactive speech-based AI conversations.

- LSLM is an end-to-end system equipped with both listening and speaking channels, allowing it to process incoming audio and generate speech simultaneously.

- The model uses a token-based decoder-only Text-to-Speech (TTS) system for speech generation and a streaming self-supervised learning encoder for real-time audio input.

- The system can detect turn-taking in real-time and respond to interruptions, a key feature of natural conversation.

My take: While the new Voice Mode in ChatGPT allows you to interrupt the model while it is talking, being able to both listen and speak at the same time is critical in human dialog to allow engaging discussions. This could potentially revolutionize human-AI interactions – making conversations with machines feel truly natural and responsive.