In a report released by the White House on July 30, it was declared that open source AI models will not be subject for future regulations. This is probably one of many reasons companies like Apple, Google and Meta have chosen to release models, datasets and weights as open source – as long as key components are made publicly available there will be no restrictions taken. Also – if you have not yet seen Metas Segment Anything Model 2 (SAM 2), just go ahead and try it now. It’s one of the most amazing things you will try this year!

EMAIL NEWSLETTER

If you would like to receive Tech Insights as a weekly email straight to your inbox, you can sign up at: https://techbyjohan.com/newsletter/ . You will receive one email per week, nothing else, and your contact details will never be shared with any third party.

THIS WEEK’S NEWS:

- Meta Unveils Segment Anything Model 2 AI (SAM 2) for Video

- Runway Releases Image-to-Video AI

- Meta Launches Instagram-Powered AI Studio

- OpenAI Starts Rollout of Voice Mode

- Google’s Tiny AI model Gemma 2 2B Beats GPT-3.5

- Black Forest Labs Releases FLUX.1 Image Generator

- Apple Releases DataComp-LM (DCLM), a 7B Open-Source LLM

- The White House Says It Will Not Restrict ‘Open Source’ AI with Regulations

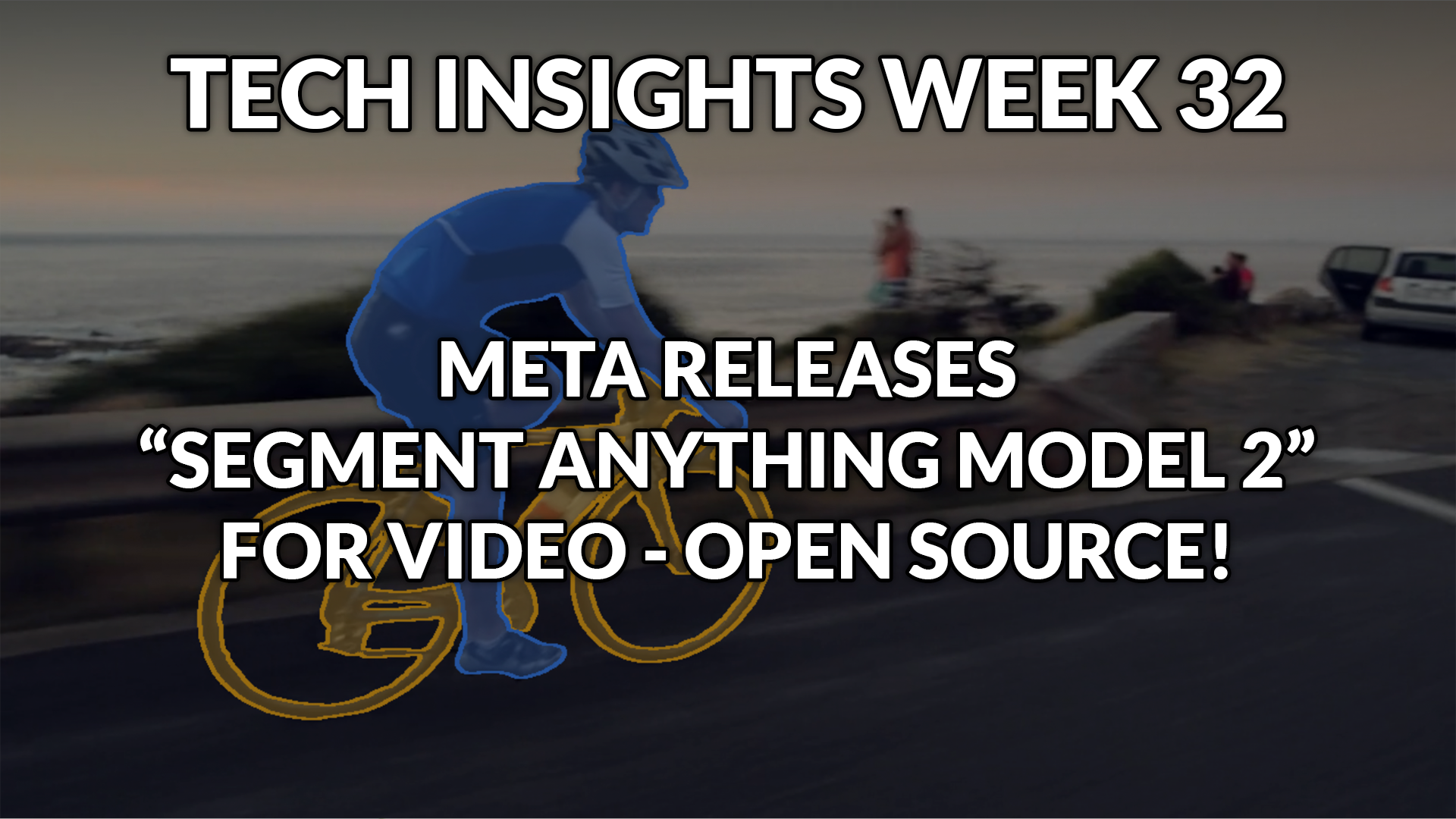

Meta Unveils Segment Anything Model 2 AI (SAM 2) for Video

The News:

- Meta have released Segment Anything Model 2 (SAM 2), an AI model that can track and identify objects across video frames in real-time!

- SAM 2 extends Meta’s previous image segmentation capabilities to video, and can handle things like object occlusion and fast movement.

- The model can segment any object in a video and create cutouts in a few clicks.

- Meta is open-sourcing the model under the Apache 2 license, and is releasing a large, annotated database of 50,000 videos used for training.

What you might have missed: You can try an online demo of SAM 2 at sam2.metademolab.com – it’s amazing!

My take: The online demonstration of SAM 2 is one of the most impressive things I have tried this year. The demo has amazingly good performance and precision. I tried lots of different tracking setups but SAM 2 nails everything. SAM 2 will be a true game changer for video editors going forward, and since Meta released it under the Apache 2 license we should expect to see it in all video editing software shortly. Wow!

Runway Releases Image-to-Video AI

https://twitter.com/runwayml/status/1817963062646722880

The News:

- Four weeks ago I wrote about Runway Gen-3. Last week Runway added image-to-video to Gen-3, meaning you can now create high-quality videos from still images.

- Image-to-video generations are either 5 or 10 seconds in length and take up “credits,“ which you have to pay for through Runway’s subscription tiers. Subscriptions start at $12 per month, which gives you 63 seconds of generations.

- If you want to give it a try, head over to Runway and click “Try Gen-3 Alpha”, upload an image and watch it come to life.

My take: The demonstrations posted by Runway are nothing short of amazing. I see so many use cases for this, especially for visual effects and movies where you have a movie frame that you want to extend with new content. If you have two minutes to spare, don’t miss the sample movies.

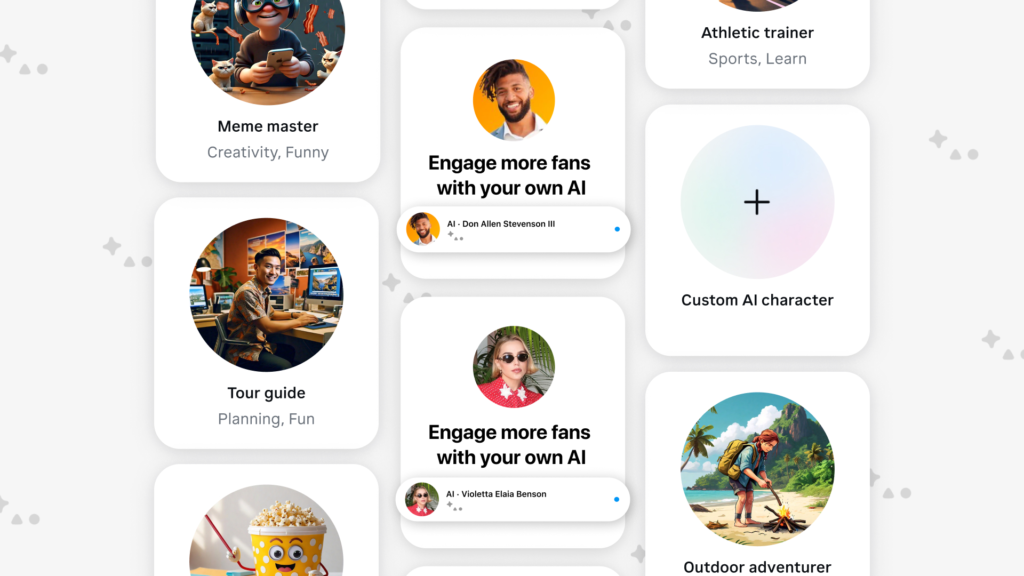

Meta Launches Instagram-Powered AI Studio

https://about.fb.com/news/2024/07/create-your-own-custom-ai-with-ai-studio/

The News:

- Meta has rolled out AI Studio, a platform for Instagram content creators to create a custom avatar of themselves that is trained on their Instagram content.

- Using AI Studio, custom avatars can be made that look like their content creator, and can interact with followers.

- If you live in the US or have a VPN you can explore avatars from creators like Chris Ashley, Violet Benson, Don Allen and Kane Kallaway.

- Users can interact with the AI Avatars on Instagram, Messenger, WhatsApp or Web.

My take: Would you spend time chatting with an AI avatar trained on the content of your favourite influencer? I definitely wouldn’t. While AI Studio as a concept is maybe not so exciting in itself, the use of it is – and that is what I will be following the coming months. Will people really use this on a daily basis?

OpenAI Starts Rollout of Voice Mode

https://twitter.com/OpenAI/status/1818353581713768651

The News:

- OpenAI has started rollout of the new voice mode in ChatGPT. Advanced Voice Mode offers “more natural, real-time conversations, allows you to interrupt anytime, and senses and responds to your emotions.”

- The rollout is done as an alpha release, with the aim to have everyone with a Plus subscription having access to the service during fall 2024.

- Screen and video sharing is not included in the first release and will launch “at a later date”.

My take: As with Meta’s AI Studio (above) I am mostly fascinated about how people will use this in their daily life. While Voice Mode is impressive in technical aspects (it’s server based with very low latency) I don’t see how it will change the way I use LLMs myself going forward. Will people start to “bond” with ChatGPT voice mode? Will they be talking to it like a close friend? These are all things that will be interesting to follow.

Google’s Tiny AI model Gemma 2 2B Beats GPT-3.5

The News:

- Google has just released Gemma 2 “2B”, a lightweight AI model with just 2B parameters that outperforms GPT-3.5 on key benchmarks.

- Gemma 2 2B boasts just 2.6B parameters, but was trained on a massive 2 trillion token dataset.

- It scores 1130 on the LMSYS Chatbot Arena, matching GPT-3.5-Turbo-0613 (1117) and Mixtral-8x7b (1114) — models 10x its size!

- The model is open-source, and developers can download the model’s weights from Google’s announcement page.

My take: AI is moving local and having a model with just 2B parameters means it can fit easily on an 8GB device without having to resort to quantization. The performance of Gemma 2 is amazingly good, pushing Google into a leading position when it comes to small language models.

Black Forest Labs Releases FLUX.1 Image Generator

https://blackforestlabs.ai/announcing-black-forest-labs/

The News:

- Black Forest Labs is a new startup company with founders previously working on VQGAN, Latent Diffusion and the Stable Diffusion models for image and video generation.

- Black Forest Labs just released three new image generator models: FLUX.1 PRO, DEV and SCHNELL.

- FLUX.1 PRO and DEV “Surpass popular models like Midjourney v6.0, DALL·E 3 (HD) and SD3-Ultra in each of the following aspects: Visual Quality, Prompt Following, Size/Aspect Variability, Typography and Output Diversity.”

- Black Forest Labs is also working on an upcoming text-to-video model.

My take: You can try FLUX.1 yourself using API or web based access. This is definitely a model to keep an eye out for – if it does indeed perform better than both Midjourney and DALL-E 3 then FLUX.1 will be a serious contender going forward. Also, the “SCHNELL” model is released under an APACHE 2 license so you can use it any way you want.

Apple Releases DataComp-LM (DCLM), a 7B Open-Source LLM

https://huggingface.co/apple/DCLM-7B

The News:

- Apple has released DCLM, a 7B open-source LLM that fully open-sources everything, including weights, training code, and dataset!

- The DCLM-7B model achieves 63.7% 5-shot accuracy on MMLU, surpassing Mistral 7B and nearing performance of Llama 3 and Gemma.

My take: This is the way to do open source! Don’t just release the model, but include the weights, training code and dataset as well. Great job Apple, and hopefully more companies will follow.

Read more:

- DCLM Model: apple/DCLM-7B · Hugging Face

- Repository: GitHub – mlfoundations/dclm

- Dataset: mlfoundations/dclm-baseline-1.0 · Hugging Face

The White House Says It Will Not Restrict ‘Open Source’ AI with Regulations

https://apnews.com/article/ai-open-source-white-house-f62009172c46c5003ddd9481aa49f7c3

The News:

- Last year President Joe Biden gave the U.S. Commerce Department until July 2024 to talk to experts and come back with recommendations on how to manage the potential benefits and risks of “open” AI models.

- In a report on July 30 the White House is coming out in favor of open-source artificial intelligence technology, arguing in a report Tuesday that there’s no need right now for restrictions on companies making key components of their powerful AI systems widely available.

My take: There are several reasons Apple, Google and Meta are going “open source” with their latest AI models, and this is probably one of them. Open Sourced AI models will not be put under any restrictions short-term, which is good news for Apple, Google and Meta, but not-so-good news for Anthropic and OpenAI.