What an AI week it has been! OpenAI announced SearchGPT, Meta released Llama 3.1 and Mistral launched Large 2! Open Source has definitely caught up with closed source, and it is interesting to see how all current models seem to perform almost identical, open source as well as closed source. Maybe it’s because they all use the same datasets, use similar hardware configurations, and use the same algorithms for training. On July 25 Sam Altman, CEO of OpenAI wrote on X: “AI progress will be immense from here”. This makes it very interesting to see what it is that will push current LLMs into the next generation – will it primarily be new data, new hardware, new algorithms, or everything together?

EMAIL NEWSLETTER

If you would like to receive Tech Insights as a weekly email straight to your inbox, you can sign up at: https://techbyjohan.com/newsletter/ . You will receive one email per week, nothing else, and your contact details will never be shared with any third party.

THIS WEEK’S NEWS:

- OpenAI Announces SearchGPT: AI-Powered Search Prototype

- Meta Launches Llama 3.1 8B, 70B & 405B – Open Source is Catching Up!

- Udio Announces Version 1.5 With Stem Downloads

- Kling AI Video Generator Now Available Globally

- Mistral Releases “Large 2” – Takes on AI Giants

- Google’s AI Scores Silver at Math Olympiad

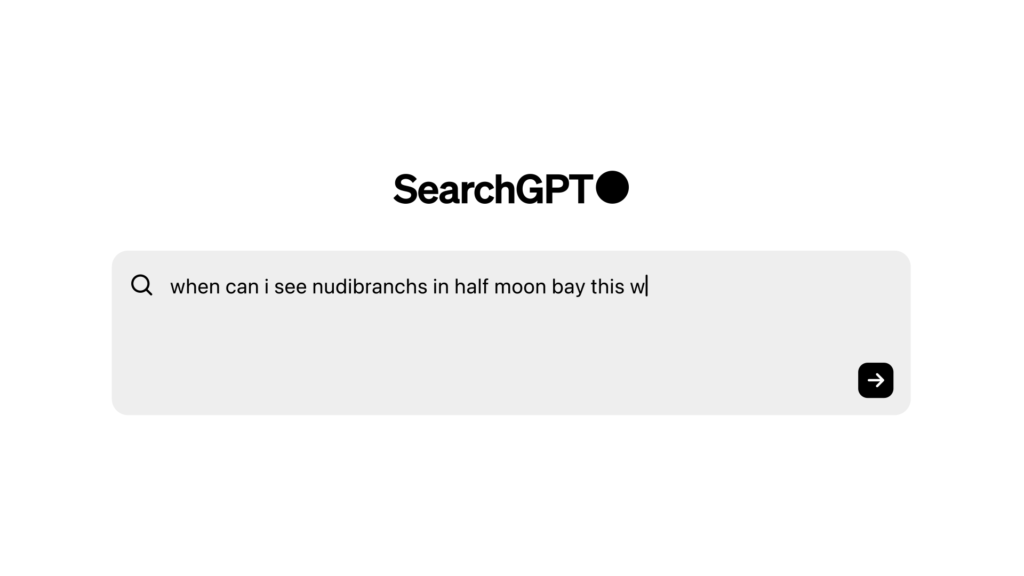

OpenAI Announces SearchGPT: AI-Powered Search Prototype

https://openai.com/index/searchgpt-prototype

“AI search is going to become one of the key ways that people navigate the internet”

Nicholas Thompson, CEO of The Atlantic

The News:

- OpenAI announced a “temporary prototype” called SearchGPT, demonstrating a new AI search feature “combining the strengths of OpenAI models with information from the web”.

- The prototype is powered by GPT-4 and will be initially available to 10,000 test users. It will only be available for a limited time, after which it will be evaluated and parts of SearchGPT will be integrated into ChatGPT.

What you might have missed: To get on the wait list for SearchGPT you need to log into your ChatGPT account and request to join the waitlist here.

My take: The current prototype of SearchGPT looks very similar to Perplexity, showing referenced pages and building a search strategy before doing the actual web crawling. Web search feels like the natural next step for ChatGPT and it will be very interesting to follow the development. Releasing as a “prototype” also makes it easier to accept mistakes presented by the model – even if the results are wildly incorrect they can easily be dismissed since this is a “time limited test version”.

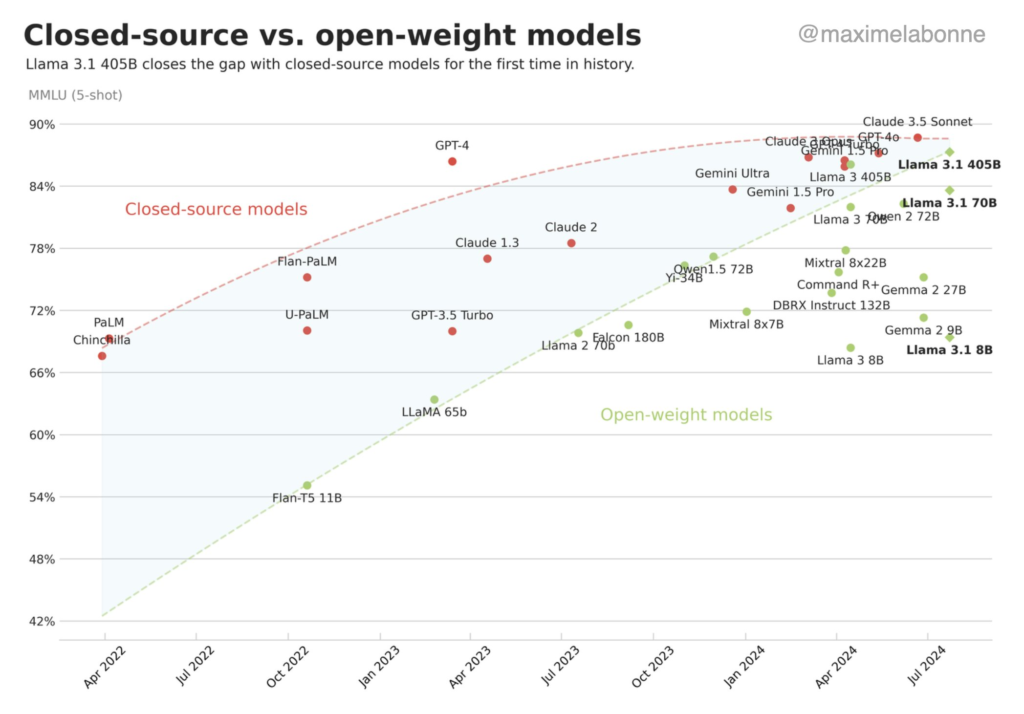

Meta Launches Llama 3.1 8B, 70B & 405B – Open Source is Catching Up!

https://ai.meta.com/blog/meta-llama-3-1

Open Source Models Catching Up to Closed Source (photo credit @maximelabonne)

The News:

- Meta has just launched Llama 3.1 – a family of multilingual Large Language Models (LLMs) in 8B, 70B, and 405B sizes (text in/text out). The new models has a context length of 128K and adds support for eight languages.

- The high-end model Llama 3.1 405B was trained on over 15 trillion tokens, on a cluster of over 16.000 H100 GPUs.

- In AI benchmarks, the high-end Llama 3.1 405B model performs similarly to GPT-4o and Claude 3.5 Sonnet.

- Compared to Llama 3, Llama 3.1 is now released under a permissive license that allows for free use, modification, and distribution of the model.

- Also, compared to Llama 3, you can now train other models than Llama models on the outputs of Llama 3.1. In the previous license version, you could only train Llama models.

What you might have missed:

- The Llama 3.1 license is similar to the Apache 2.0 license, with three key differences: (1) you must prominently display “Built with Llama” on your related website, user interface, blogpost, about page, or product documentation, (2) if you to create, train, fine tune, or otherwise improve an AI model with Llama, your model name must be prefixed with “Llama”, and (3) if you have more than 700 million active monthly users you must ask Meta for a commercial license.

My take: This is a significant achievement by Meta, and it clearly shows how quick Open Source models have caught up with OpenAI, Anthropic and other “Closed Source” AI platforms. Based on my personal tests however Claude 3.5 is still miles ahead of the competition in terms of code generation, including Llama 3.1 405B. So while Llama 3.1 has caught up in many benchmarks, it’s still not there for coding. Maybe Meta will catch up in coding too within 6-12 months, but then it’s time for the next generation LLMs to be released and I expect the gap to increase yet again.

Read more:

Udio Announces Version 1.5 With Stem Downloads

https://www.udio.com/blog/introducing-v1-5

The News:

- Udio 1.5 just launched with three main new features: Improved Audio Quality, Key control, Stem downloads and Audio to audio (remixing audio uploads).

- Stem downloads means you can now download your generated tracks split into four separate stems: Vocals, Bass, Drums, and everything else. This is HUGE for music producers, who can now cut, mix, rearrange and process each stem individually!

- Audio-to-audio means you can take one style of music and make it into another – very cool, check the demo!

- Key control means you can now add keys (C minor, Ab major etc) to your prompts to guide the AI.

My take: Udio is growing a significant fanbase among music producers and with features like key control and stem downloads it is quickly becoming the “de facto” music production tool in almost any music studio worldwide today.

Kling AI Video Generator Now Available Globally

The News:

- Kling AI, developed by Chinese tech giant Kuaishou Technology, just released its impressive AI video model “Kling” globally.

- Kling can generate videos up to two minutes long, and the global version offers 66 free credits daily, with each generation costing 10 credits.

- According to Kuaishou, Kling utilizes advanced 3D reconstruction technology for more natural movements.

- The platform accepts prompts of up to 2,000 characters, allowing for detailed video descriptions.

My take: When Kuaishou initially launched Kling you could only access it if you had a Chinese phone number. Global users are still limited to 5-second generations, however for many use cases such as Youtube B-roll that limit will be totally acceptable.

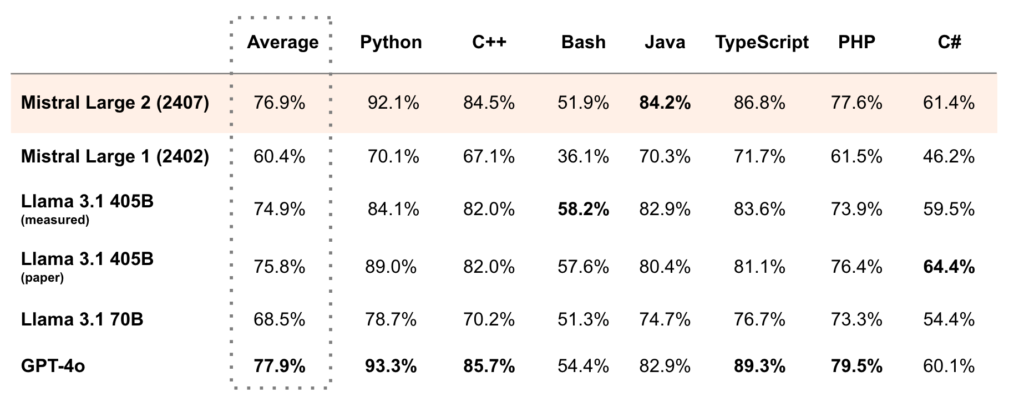

Mistral Releases “Large 2” – Takes on AI Giants

https://mistral.ai/news/mistral-large-2407

The News:

- Mistral have released their new LLM “Large 2” which is “on par with leading models such as GPT-4o, Claude 3 Opus, and Llama 3 405B”.

- Large 2 boasts 123 billion parameters, less than a third of Meta’s Llama 3.1 405B, yet outperforms it in code generation and math.

- The model features a 128,000 token context window and improved multilingual support for 12 languages and over 80 coding languages.

- Large 2 is released under the Mistral Research License, which allows usage and modification for research and non-commercial purposes. A paid license is required for commercial use.

My take: It’s interesting how all current models seem to perform almost identical today, open source as well as closed source. Maybe it’s because they all use the same datasets, use similar hardware configurations, and use the same algorithms for training. This makes it very interesting to see what it is that will push current LLMs into the next generation – will it be new data, new hardware or new algorithms, or everything together? Still, Large 2 is an impressive accomplishment – it’s around one-third the size of Llama 3.1 405B but still compares well to GPT-4o.

Google’s AI Scores Silver at Math Olympiad

https://deepmind.google/discover/blog/ai-solves-imo-problems-at-silver-medal-level

The News:

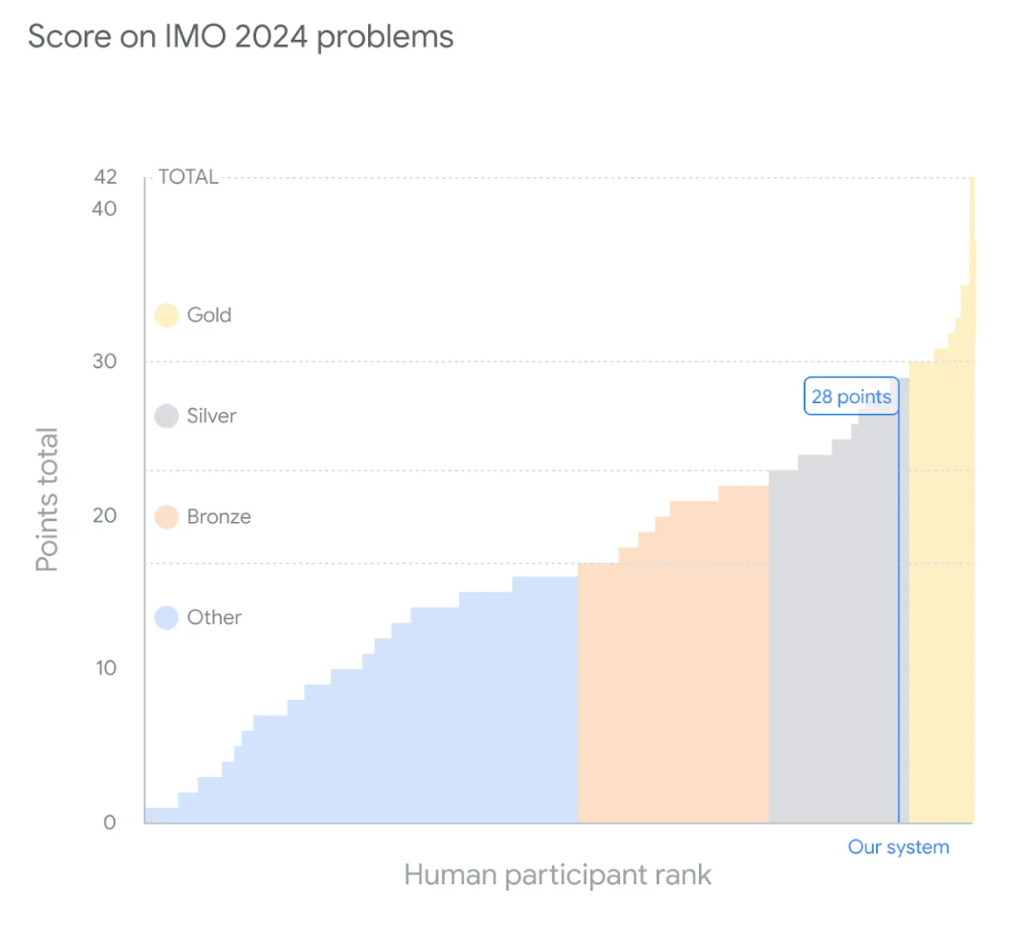

- Google DeepMind’s AI systems: AlphaProof and AlphaGeometry 2, achieved a significant milestone in AI’s math reasoning capabilities, attaining a silver medal-equivalent score at this year’s International Mathematical Olympiad (IMO). AlphaProof uses a fine-tuned Gemini model to translate and solve complex math problems.

- The AI system solved 4 out of 6 problems from the 2024 IMO, scoring 28 out of 42 points. In previous AI attempts models could barely solve 1 in 100 past IMO problems.

My take: You could argue what “smart” means in terms of AI and LLMs, but the fact that the model is able to solve difficult math problems at breakthrough speeds means we now have tools at our disposal that should make research and development both easier and faster in the near future.