If you are living in the EU and have looked forward to Meta’s upcoming multimodal LLM, they just released news that it will not be available for European companies and researchers due to “the unpredictable nature of the European regulatory environment”. In contrast to this, The Washington Post has obtained a document by Trump allies, with plans to “immediately review and eliminate ‘burdensome regulations’ on AI development” in the US. The gap between Europe and the US is growing, and depending on how the election turns out later this year it could increase exponentially next year.

This week’s news:

- Meta Won’t Bring Future Multimodal AI Models to EU

- Trump Allies Plans to Remove AI Regulations in the US

- Tech Giants Trained AI on YouTube Without Consent

- Mistral AI And Nvidia Unveil New Language Model: Mistral NeMo 12B

- OpenAI Uses Two AIs to Make them Easier to Understand

- Google Shutting Down its URL Shortener, Breaking All Links

- Tech Giants Unite to Secure AI: The Launch of CoSAI

- Anthropic Launches Claude AI Android App

- OpenAI Announces “GPT-4o Mini” – Replacing GPT 3.5

Meta Won’t Bring Future Multimodal AI Models to EU

https://www.axios.com/2024/07/17/meta-future-multimodal-ai-models-eu

“We will release a multimodal Llama model over the coming months, but not in the EU due to the unpredictable nature of the European regulatory environment,” Meta said in a statement to Axios.

The News:

- Meta will withhold its next multimodal AI model — and future ones — from customers in the European Union because of what it says is a lack of clarity from regulators.

- Meta says its decision also means that European companies will not be able to use the multimodal models even though they are being released under an open license.

- Meta’s decision follows a similar move by Apple, which last month that it won’t release its Apple Intelligence features in Europe because of regulatory concerns.

What you might have missed:

- Meta announced in May that it planned to use publicly available posts from Facebook and Instagram users to train future models. Meta said it sent more than 2 billion notifications to users in the EU, offering a means for opting out, with training set to begin in June.

- Meta says it briefed EU regulators months in advance of that public announcement and received only minimal feedback, which it says it addressed.

- In June — after announcing its plans publicly — Meta was ordered to pause the training on EU data. A couple weeks later it received dozens of questions from data privacy regulators from across the region.

My take: This affects not only affect companies and researchers working with AI in Europe, but consumers as well. Any company planning to use Metas upcoming multimodal AI will now have to choose between (1) implementing two different models – one for EU and one for the rest of the world, or (2) skip AI features for European customers completely. Currently it looks like (2) will be the most likely choice, at least in the short term, which is a shame since we are right on the brink to the next evolution of LLMs trained on the next-generation NVIDIA hardware.

Trump Allies Plans to Remove AI Regulations in the US

https://www.washingtonpost.com/technology/2024/07/16/trump-ai-executive-order-regulations-military

The News:

- A document obtained by the Washing Post includes a ‘Make America First in AI’ section, calling for “Manhattan Projects” to advance military AI capabilities.

- According to Washington Post, Silicon Valley investors are now “flocking to support the former president”.

- The document is written by former U.S. President Donald Trump’s allies. The plan would immediately review and eliminate ‘burdensome regulations’ on AI development, and repeal President Biden’s AI executive order.

What you might have missed:

- Elon Musk will be donating $45 million a month – $180 million from July through October – to America PAC, a political action committee dedicated to reelecting former President Donald Trump.

- Last Tuesday, venture capitalists Marc Andreessen and Ben Horowitz endorsed Trump on their podcast. They said he was the best candidate for “Little Tech”. “What he said to us is, ‘AI is very scary, but we absolutely have to win,’” Horowitz recounted. “’Because if we don’t win then China wins, and that’s a very bad world.’”

My take: With this document at hand it’s easy to understand why industry giants such as Elon Musk and Peter Thiel officially support the Trump campaign. My guess is that if Trump wins the election, the gap between EU and US will be bigger than ever, especially in terms of AI and IT regulations. Several companies will be accelerating their AI development significantly in the US due to less regulations, which in turn will make it even harder to launch their products in the EU.

Tech Giants Trained AI on YouTube Without Consent

https://www.proofnews.org/apple-nvidia-anthropic-used-thousands-of-swiped-youtube-videos-to-train-ai

The News:

- An investigation by Proof News has revealed that tech companies like Apple, Nvidia, Anthropic, and Salesforce, have used subtitles from thousands of YouTube videos to train their AI models without their creators consent.

- The dataset, called “YouTube Subtitles,” contains transcripts from 173,536 videos across 48,000 channels, including content from educational institutions, media outlets, and popular YouTubers.

- The dataset was part of a larger collection called “The Pile,” created by AI researchers at EleutherAI, a ”non-profit AI research lab that focuses on interpretability and alignment of large models.” Content from channels like Khan Academy, MIT, Harvard, and popular YouTubers such as MrBeast and PewDiePie was included.

What you might have missed:

- Apple, Nvidia, and Salesforce — companies valued in the hundreds of billions and trillions of dollars — describe in their research papers and posts how they used ”The Pile” to train their AI. Documents also show Apple used the Pile to train OpenELM, a high-profile model released in April, weeks before the company revealed it will add new AI capabilities to iPhones and MacBooks.

My take: This is great research done by Proof News. The Pile does not contain credits for the Youtube subtitles, so Proof News wrote a script to match the subtitles to public Youtube channels to find out where the data came from. It baffles me that even big companies like Apple, Nvidia and Salesforce don’t even consider the rights of the data they are using, and even posts about the use of it in their public research papers. Everything seems to be allowed when training AI models, as long as the model gets better and the company remains competitive.

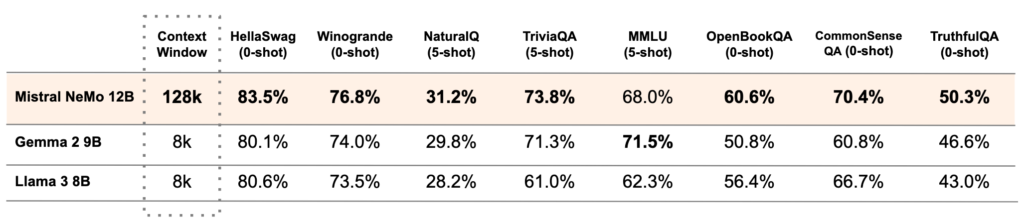

Mistral AI And Nvidia Unveil New Language Model: Mistral NeMo 12B

https://mistral.ai/news/mistral-nemo

The News:

- French company Mistral AI and NVIDIA have launched Mistral NeMo 12B, a state-of-the-art language model for enterprise applications such as chatbots, multilingual tasks, coding, and summarization.

- Mistral NeMo is optimized for quantization and can run on standard consumer PC hardware such as the GeForce RTX 4090.

- Mistral NeMo is released under the Apache 2.0 license, which allows for both research and commercial use without restrictions.

My take: Great news and an impressive feat by Mistral AI and Nvidia! Great to see them use the Apache 2.0 license for the model, and I can see NeMo 12B becoming the “de facto” standard for local corporate LLMs in the fall of 2024.

OpenAI Uses Two AIs to Make them Easier to Understand

https://openai.com/index/prover-verifier-games-improve-legibility

The News:

- OpenAI just published a paper detailing a method to make large language models produce more understandable and verifiable outputs, using a game played between two AIs to make their output easier to understand.

- The technique uses a “Prover-Verifier Game” where a stronger AI model (the prover) tries to convince a weaker model (the verifier) that its answers are correct.

- Through multiple rounds of the game, the prover learns to generate solutions that are not only correct, but also easier to verify and understand.

My take: As AI models become more competent, they still need to be able to explain things to us average-intelligence humans. The Prover-Verifier Game approach is interesting because it doesn’t rely on direct human feedback to improve legibility. I believe this will be important in the future as AI systems become more advanced and potentially surpass human understanding in certain domains.

Google Shutting Down its URL Shortener, Breaking All Links

https://developers.googleblog.com/en/google-url-shortener-links-will-no-longer-be-available

The News:

- On July 18 Google announced that they will permanently disable their popular URL shortener https://goo.gl/

- The service was deprecated in 2018, and as of August 2025 these URLs will no longer return a response.

My take: I have used goo.gl myself for many years since it was launched in 2010. Deprecating the service and no longer accepting new links is one thing, but shutting it down completely means potentially millions of links will be lost forever. Personally I think this was a bad decision – keeping this service up and running can not have been costly, but the implications of shutting the service down can be significant. One more service added to the Google Graveyard.

Tech Giants Unite to Secure AI: The Launch of CoSAI

The News:

- On July 18 a coalition of major tech companies announced the formation of the Coalition for Secure AI (CoSAI). Companies include Google, IBM, Intel, Microsoft, NVIDIA, PayPal, Amazon, Anthropic, Cisco and OpenAI.

- CoSAI is an open-source initiative designed to give all practitioners and developers the guidance and tools they need to create Secure-by Design AI systems. CoSAI will foster a collaborative ecosystem to share open-source methodologies, standardized frameworks, and tools.

- CoSAI’s scope includes securely building, integrating, deploying, and operating AI systems, focusing on mitigating risks such as model theft, data poisoning, prompt injection, scaled abuse, and inference attacks.

- Initially, CoSAI will focusing on three workstreams: software supply chain security for AI systems, preparing defenders for a changing cybersecurity landscape, and AI security governance

My take: There is a growing discussion and many concerns about AI safety, and the formation of CoSAI is a significant step towards addressing these. I see two main challenges with this coalition however: (1) Getting all these companies to collaborate and deliver something useful – it’s unclear who is driving the initiative and how much investment is being done by the companies, and (2) it’s still not clear how smaller companies not part of this coalition will benefit from the standards created by CoSAI.

Anthropic Launches Claude AI Android App

https://www.anthropic.com/news/android-app

The News:

- In May, Anthropic released their iPhone app for Claude AI, and they have now finally released their Android client.

- The native “Claude” app supports vision, so you can take camera photos for analysis. It can also do real-time language translation.

- While not as popular as ChatGPT, the Claud app for iOS got 157,000 downloads the first five days, compared to 480,000 downloads for ChatGPT app the first week.

My take: I switched to Claude 3.5 a few weeks ago from ChatGPT and have not looked back. It has better reasoning, it is great for programming, and it writes better English. If you have not yet checked it out, I wholeheartedly recommend it at https://www.anthropic.com

OpenAI Announces “GPT-4o Mini” – Replacing GPT 3.5

https://openai.com/index/gpt-4o-mini-advancing-cost-efficient-intelligence

The News:

- OpenAI has released GPT-4o Mini, a smaller cost-efficient multimodal model set to replace GPT-3.5. GPT-4o mini supports text and vision in the API, with support for text, image, video and audio inputs and outputs coming in the future.

- GPT-4o Mini has a 128K context window and knowledge up to October 2023. It has an improved tokenizer shared with GPT-4o, so it also handles non-English languages better.

- GPT-4o Mini outperforms GPT-3.5 Turbo on academic benchmarks, scoring 82% on MMLU compared to 69.8%.

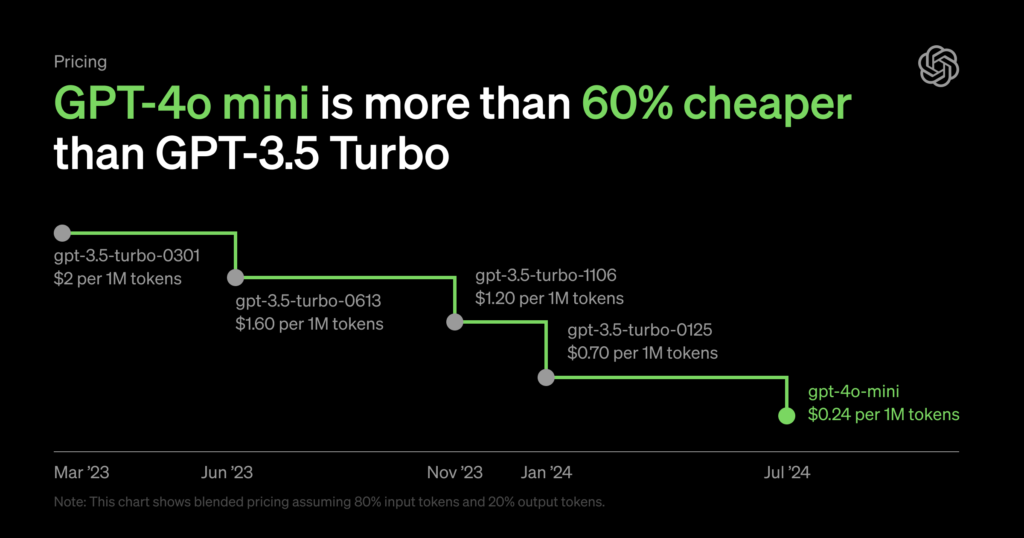

- GPT-4o Mini is over 60% cheaper than GPT-3.5 Turbo.

My take: OpenAI envisions “a future where models become seamlessly integrated in every app and on every website”. And in that future, smaller models like GPT-4o Mini plays a very important role. People do however tend to get used to the incredible performance of the latest state-of-the LLMs like Claude 3.5 and GPT-4o, so the potential uses of these smaller models become less the more people gets used to the high-end models. It will be interesting to see how quickly the large models keep improving, and if the gap to the smaller models grows or if the small models keep improving at the same rate.