Wow, this was the most feature-packed AI week of 2024 yet! Google made FIVE major AI launches paving the way for agentic AI, we got a new quantum computing chip Willow, and OpenAI finally released Sora and Advanced Voice Mode with Vision!

We also got a new weather prediction model from Google called GenCast, which outperforms the current industry standard by 97.2%, a new open-source dataset from Harvard with over 1 million books, and xAI launched Aurora, their new image generator.

The main takeaway from last week is how strong Google can be when they manage to weave all their services together, building an AI ecosystem instead of individual services. The future is based around AI agents, and Google just took the lead!

THIS WEEK’S NEWS:

- Google News: Gemini 2.0, Deep Research, Mariner, Astra, Jules

- Google announces Willow Quantum Computing Chip

- OpenAI News: Advanced Voice Mode with Vision and Sora

- Harvard Releases Dataset of 1 Million Books for AI Training

- Google DeepMind Announces GenCast

- Anthropic Launches Clio – Real-world Analysis of AI Use

- Google DeepMind Launches NotebookLM Improvements

- X Launches Aurora – Grok’s New AI Image Generator

- GM Abandons Cruise Robotaxi – Leaving Tesla Alone in US

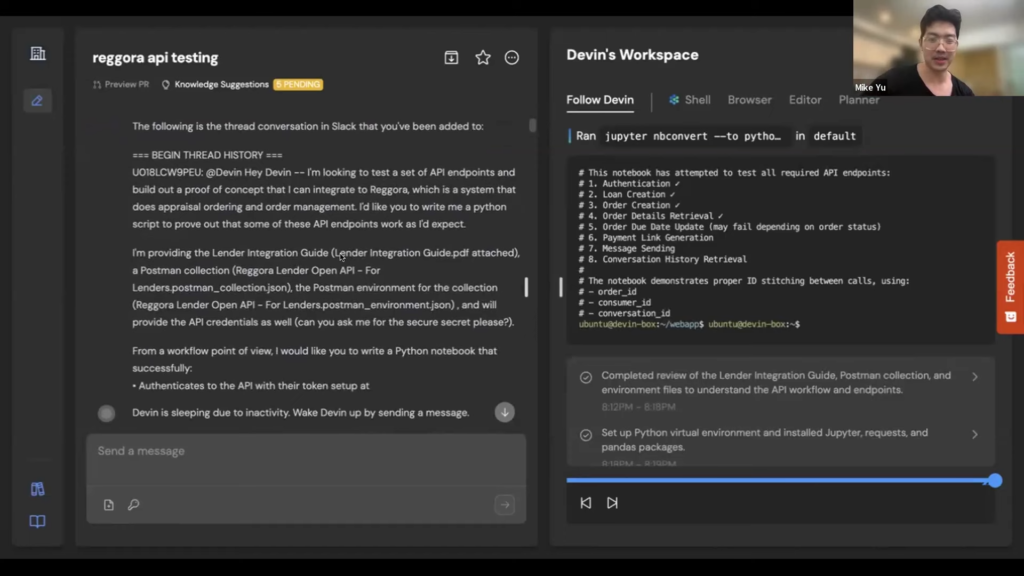

- AI Software Engineer Devin Now Available for $500/Month

Google News: Gemini 2.0, Deep Research, Mariner, Astra, Jules

https://blog.google/technology/google-deepmind/google-gemini-ai-update-december-2024

The News:

Gemini 2.0 – Next-gen AI with agentic capabilities

- Google just launched Gemini 2.0, designed for the “agentic era” of AI, with improved capabilities in multimodal processing, autonomous decision-making, and task execution.

- Key features of Gemini 2.0 include: Native image and audio generation, Improved multimodal capabilities (text, images, audio, video), and Native tool use, including Google Search and Maps integration.

- Gemini 2.0 Flash is available now to developers through the Gemini API in Google AI Studio and Vertex AI.

Deep Research – AI research assistant

- Google also introduced Deep Research, a new AI tool that acts as a research assistant for Gemini Advanced users.

- Deep Research can browse the web, summarize information, and create detailed reports on complex topics.

- Deep Research uses Google Search’s framework to find relevant information, performing multiple searches and iterations to collect data.

- Deep Research utilizes Gemini’s advanced reasoning capabilities and a 1M token context window to create its reports.

Project Mariner – Web-based AI agent for all tasks

- Project Mariner is a new AI agent powered by Gemini 2.0 that can autonomously navigate and control web browsers.

- Project Mariner comes as a Chrome browser extension that can understand screen content, including text, images, code, and web forms, and achieved an 83.5% success rate on the WebVoyager benchmark for real-world web tasks.

- Project Mariner is currently only available to select “trusted testers” in the US.

Project Astra – Multilingual & Multimodal AI assistant

- Project Astra is Google’s research prototype for a universal AI assistant and can interact through speech, text, images, and video. It is very similar to OpenAI Advanced Voice Mode with Vision.

- Project Astra has 10 minutes of memory, and can use Google Search, Lens, and Maps.

- Project Astra is currently being tested by a select group of users.

Jules – An AI Agent That Fixes Code While You Sleep

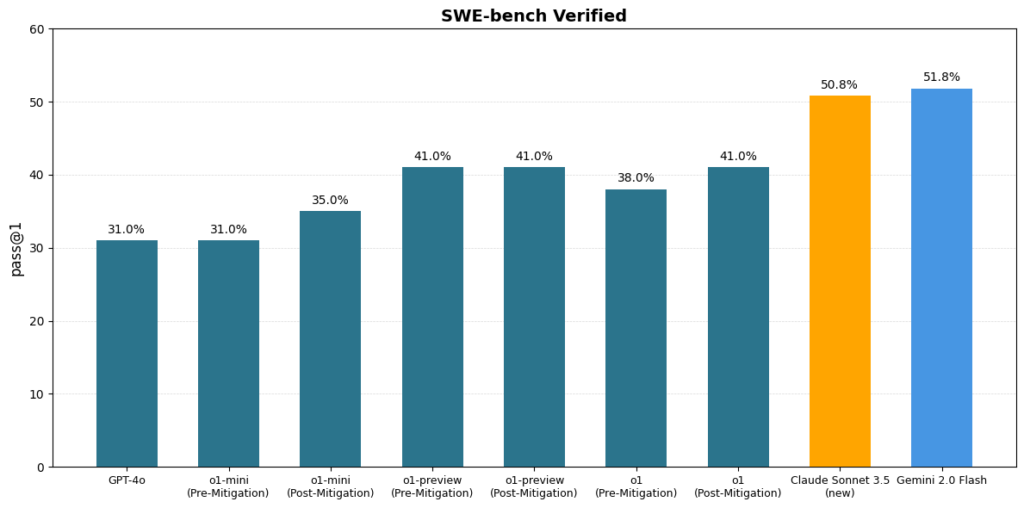

- Jules is an experimental AI-powered coding assistant that autonomously fixes code defects and bugs.

- Built on Google’s new Gemini 2.0 platform, Jules can analyze complex codebases and implement fixes across multiple files simultaneously.

- The AI assistant integrates directly with GitHub workflows for Python and JavaScript projects, and can create multi-step strategies for addressing issues and prepares pull requests automatically.

- Jules is currently being tested by a select group of users.

My take: What an amazing week of news from Google! 2025 will be the year of interactive AI agents, and Google just kickstarted it off with Gemini 2.0, Deep Research, Astra, Mariner and Jules! If you have three minutes, checkout their launch video it’s very impressive. From what I have seen so far from Deep Research, it can trigger hundreds of Google searches for simple prompts, greatly outclassing other research engines like Perplexity. And Jules with its direct GitHub integration looks extremely promising! Google is not only back in the AI game, they are at the top, and next year we will use AI agents in ways we never thought was possible.

Read more:

- Google introduces Gemini 2.0: A new AI model for the agentic era

- Gemini: Try Deep Research and Gemini 2.0 Flash Experimental

- Gemini 2.0 now can edit image by talking to it, it’s insane. – x.com

- Introducing Gemini 2.0 | Our most capable AI model yet – YouTube

- The next chapter of the Gemini era for developers – Google Developers Blog

Google announces Willow Quantum Computing Chip

https://blog.google/technology/research/google-willow-quantum-chip

The News:

- Last week Google unveiled “Willow”, a quantum computing chip that can solve certain problems in 5 minutes that would take modern supercomputers 10 septillion years.

- The chip achieves a major breakthrough in quantum error correction – as more qubits are added, errors decrease exponentially, contrary to previous quantum systems.

- The performance is so remarkable that Google suggests it may indicate computation occurring across multiple universes: “It lends credence to the notion that quantum computation occurs in many parallel universes, in line with the idea that we live in a multiverse” (from the official Google announcement).

- Despite the achievement, experts note that Willow, with its 105 qubits, is still too small for meaningful real-world calculations, as millions of qubits would be needed for practical applications.

- The technology still requires extreme cooling to near absolute zero, which poses scaling challenges.

My take: Going from 105 qubits to millions will probably take quite some time, and I don’t think anyone even want to take a guess of how many years it will take to create a quantum computer that can actually do something practical. Still, quantum computing is moving forward rapidly now and it would not surprise me if we soon discover a giant leap in quantum computing performance like we had with AlexNet in 2012, which thanks to the use of GPUs was the first innovation to actually make neural networks usable.

Read more:

- Google says its new quantum chip indicates that multiple universes exist | TechCrunch

- Google claims quantum milestone — but can’t solve real-world problems

OpenAI News: Advanced Voice Mode with Vision and Sora

The News:

Advanced Voice Mode with Vision

- OpenAI just launched Advanced Voice Mode with Vision for ChatGPT, enabling real-time visual analysis through phone cameras. The feature is exclusively available for ChatGPT Plus, Team, and Pro subscribers.

- Users can point their phones at objects and receive near real-time responses from ChatGPT. The feature also includes screen sharing capabilities to help with app navigation or assist with math problems.

- There is no launch timeline for Advanced Voice Mode with Vision in the EU, Switzerland, Iceland, Norway, and Liechtenstein. Enterprise and Edu users will get access in January 2024.

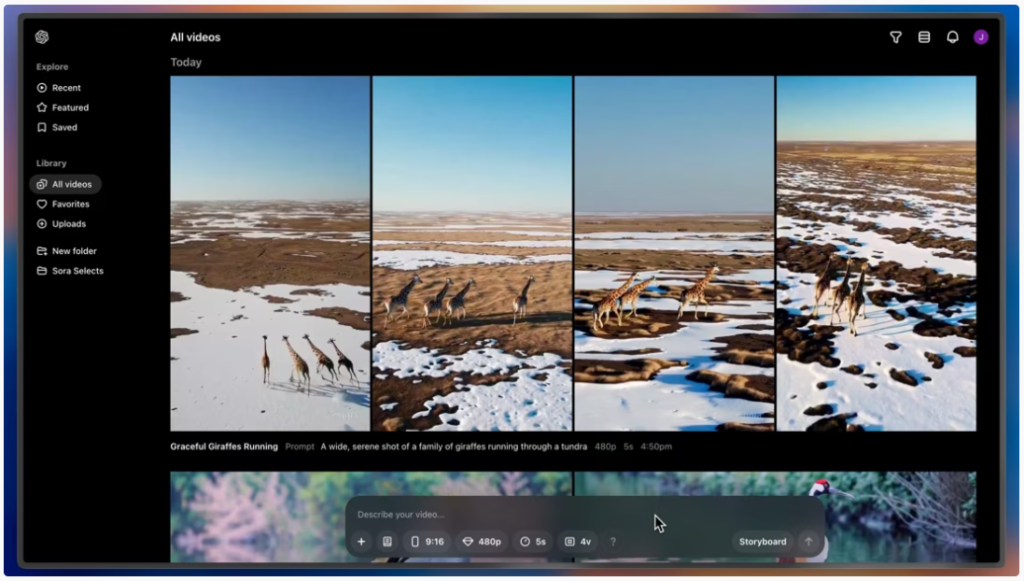

- OpenAI also publicly released Sora last week, its AI video generation tool that creates videos from text descriptions.

- Sora can generate Full HD videos up to 20 seconds long in widescreen, vertical, or square formats from text prompts, extend existing videos, and generate videos from static images.

- Sora is available to ChatGPT Plus and Pro subscribers, but not in the UK, EU, or Switzerland.

My take: Both of these tools look amazing, and while Sora has several issues such as rendering realistic humans and showing correct physics behavior, when used within its limitations I believe the possibilities with Sora are great. For everyone outside Europe that is, because neither Vision nor Sora are being launched here, and there are no immediate plans for it as well.

Read more:

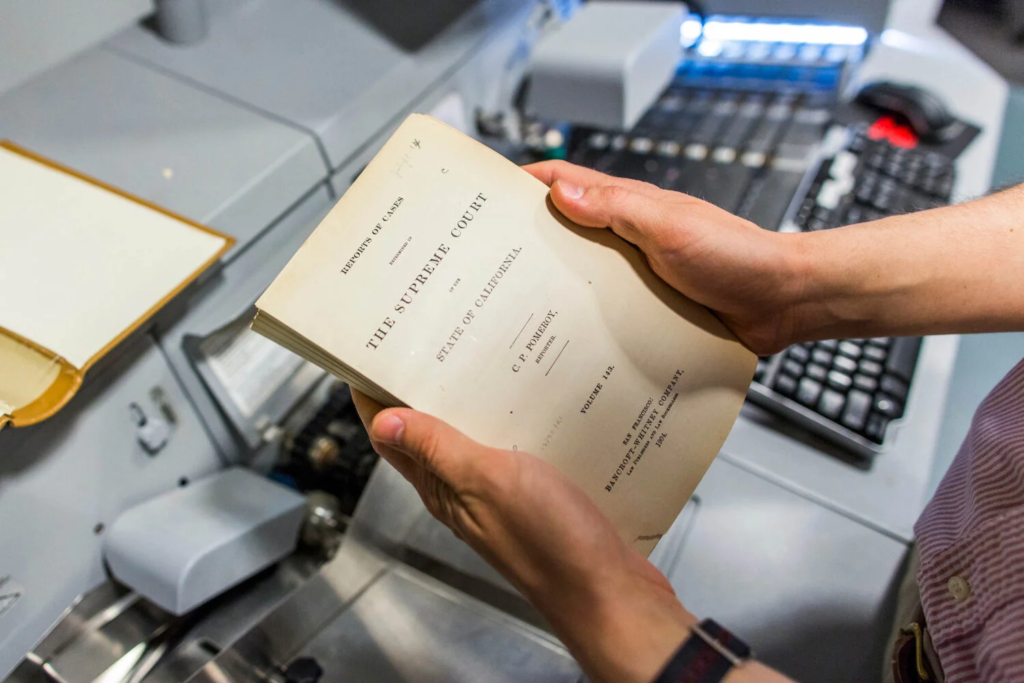

Harvard Releases Dataset of 1 Million Books for AI Training

The News:

- Harvard University just announced a new dataset containing nearly 1 million public-domain books for AI model training. The dataset includes classic works from Shakespeare, Charles Dickens, and Dante, alongside specialized content like Czech math textbooks and Welsh dictionaries.

- Both Microsoft and OpenAI provided funding for Harvard’s Institutional Data Initiative (IDI) to create the dataset, and the books were originally scanned as part of the Google Books project and are no longer protected by copyright.

- As a comparison, the dataset is approximately five times larger than the Books3 dataset used to train Meta’s Llama model.

- The dataset has undergone rigorous review and quality control measures, and Harvard envisions the dataset becoming a foundational resource for AI development, similar to how Linux became a foundational operating system.

My take: This is great news for smaller AI companies and researchers that now have access to a large volume of high-quality training data. This project involved collaboration between Harvard, Google, Microsoft, and OpenAI, and we need more projects like this to fill the ever-growing need of new data to train next-generation models.

Read more:

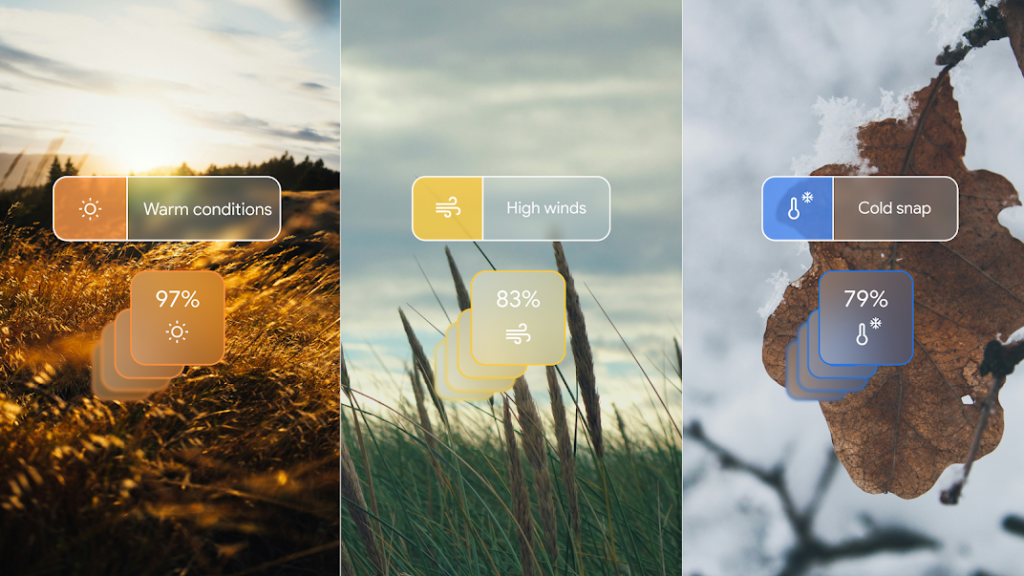

Google DeepMind Announces GenCast

The News:

- Google DeepMind announced GenCast, a new AI model that provides accurate weather forecasts up to 15 days. The model outperformed the current industry standard (ECMWF’s ENS system) in 97.2% of 1,320 test cases from 2019.

- GenCast can generate 50 different weather scenarios in just 8 minutes, compared to traditional systems that take several hours, and was trained on 40 years of historical weather data (1979-2018) including temperature, wind speed, and atmospheric pressure.

- GenCast is available as an open model, with its code, weights, and forecasts being publicly accessible.

My take: I previously reported on Microsoft Aurora in Week 24, which outperformed traditional models on 92% on many variables. That was pretty good figures back then, but GenCast providing 97.2% more accurate results than ECMWF-ENS is in a completely different league. GenCast will soon be integrated into Google Search and Google Maps, where realtime data and historical forecasts are already available for researchers and existing operational models. My prediction is that 2025 is the year when we get super accurate down-to-kilometer based weather forecasts up to 36 hours in the future and down to 10-minute resolution. Want an AI agent to help you plan your walk with the dog with minimum rain? It can do that. Want an AI to plan your evening jogging tour with minimum winds? Check. Combine GenCast with AI agents and you have an extremely powerful combination!

Read more:

- DeepMind’s GenCast AI beats traditional weather forecasting for the first time

- Google Unveils GenCast Weather Forecasting Revolution – The Pinnacle Gazette

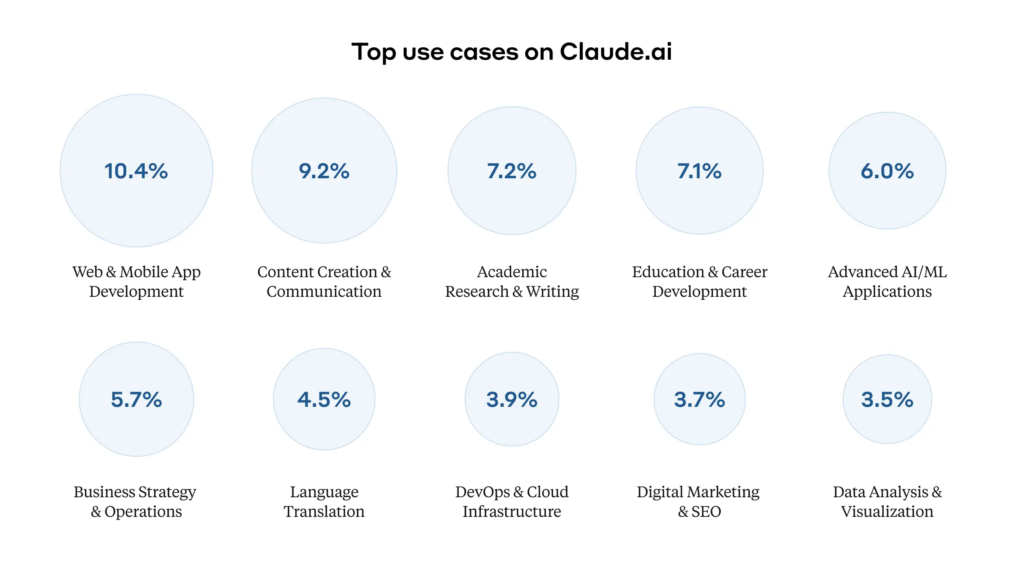

Anthropic Launches Clio – Real-world Analysis of AI Use

https://www.anthropic.com/research/clio

The News:

- Anthropic launched Clio (Claude Insights and Observations), a new AI system that analyzes how people use Claude while preserving user privacy.

- Clio works by analyzing conversations and clustering similar interactions together to identify usage patterns and potential safety concerns.

- Clio can be used to identify both normal uses and policy violations, such as monitoring election-related activities. Clio has already helped improve safety systems by identifying patterns of violative behavior and strengthening safety classifiers.

My take: Claude has published the technical details of Clio in a research paper, encouraging other AI companies to implement similar technologies to maintain the safety of their systems while protecting user privacy. Innovations such as Clio becomes especially valuable when we move into a world full of AI agents, where we need ways to track what millions of agents are doing, while still keeping the privacy of users.

Read more:

- How Claude uses AI to identify new threats

- Paper: Clio: Privacy-Preserving Insights into Real-World AI Use

Google DeepMind Launches NotebookLM Improvements

https://blog.google/technology/google-labs/notebooklm-new-features-december-2024

The News:

- Google just announced major updates to NotebookLM, including a complete redesign and the launch of NotebookLM Plus and switching to Gemini 2.0 Flash.

- NotebookLM Plus is a new enterprise offering, including 5x more Audio Overviews, notebooks, and sources per notebook, customizable style and tone settings and shared team notebooks with usage analytics.

- Audio Overviews now also includes an interactive mode (BETA) allowing users to join conversations and ask questions using voice commands! Here’s how it works:

- (1) Create a new Audio Overview, (2) Select the Interactive mode (BETA) button, (3) Press ‘Join’ during playback and then ask questions when prompted by the hosts. The hosts will respond to your questions using information from your sources and then continue with the original discussion.

My take: I am still missing the option to switch out the two virtual hosts to other AI personas. Using the Plus account it’s now possible to customize the style and tone of responses to some degree, but I would have liked full personas customization. NotebookLM Plus is currently $20/month and will be included in the Google One AI Premium Subscription starting in 2025.

X Launches Aurora – Grok’s New AI Image Generator

https://x.ai/blog/grok-image-generation-release

The News:

- xAI just announced Aurora, a new AI image generation model that will be integrated into its Grok chatbot.

- Aurora is currently accessible through the Grok tab on X’s platform in select countries, with a global rollout planned within a week for all users.

- Unlike other models Aurora has minimal content restrictions, allowing for generation of public figures and copyrighted characters, though it maintains restrictions on explicit content.

- Aurora is available to X Premium subscribers, with X Premium+ users receiving expanded usage limits.

My take: According to xAI Aurora was trained on “billions of examples from the Internet”, and as you can probably guess from reading that it means that Aurora is not available in the EU and won’t be available here for the foreseeable future. Based on early feedback however the model has problems rendering multiple objects together and displaying hands and arms, so I don’t feel that we in the EU are missing out on something important here.

Read more:

- X says its new image generator, Aurora, will launch for all users within the week | TechCrunch

- Grok Aurora image generator is live — here’s how to use it | Tom’s Guide

GM Abandons Cruise Robotaxi – Leaving Tesla Alone in US

https://news.gm.com/home.detail.html/Pages/news/us/en/2024/dec/1210-gm.html

The News:

- General Motors just that announced it will stop funding its Cruise robotaxi division and end all autonomous taxi development.

- As a perspective, GM invested over $10 billion in Cruise since acquiring it in 2016. By cancelling all Robotaxi work, GM expects to save more than $1 billion annually.

- GM will integrate Cruise’s technical team into its main operations, focusing instead on driver assistance technologies like Super Cruise

- Kyle Vogt, founder of Cruise and former CEO posted on X : “In case it was unclear before, it is clear now: GM are a bunch of dummies”.

My take: Only one company in the US is left now that are betting on Robotaxis and that’s Tesla. My view is that many companies in 2016 actually believed that fully autonomous robotaxis could be launched within 2-3 years, but when the years went by I fully understand the frustration from top management due to the lack of progress. Building autonomous cars are extremely difficult, but Waymo has shown that it is possible, we just need new technology that do not cost over $200.000 to manufacture. And this is something we still do not have today.

Read more:

AI Software Engineer Devin Now Available for $500/Month

https://www.cognition.ai/blog/devin-generally-available

The News:

- Cognition Labs has made its AI software engineer ‘Devin’ available for a monthly subscription of $500 per month. Devin, announced earlier this year, is an AI platform that can performing complex coding tasks autonomously, including writing code, creating and deploying websites and apps, and fixing bugs.

- The subscription includes access to Devin’s Slack integration, their IDE extension and API, as well as an onboarding session and support from Cognition Labs’ engineering team.

- What makes Devin unique is that it can complete entire software development projects from start to finish, learn unfamiliar technologies by searching the Internet, build and deploy apps end-to-end, and autonomously find and fix bugs in codebases.

- Cognition Labs has not disclosed what LLMs Devin is based on.

My take: My view is that current LLMs are not quite there yet in features to support complex tools like Devin, so I am very unsure of the actual value this tool brings today. I predict we will have dozens of tools like Devin within 6-12 months, and I believe the winner will be the one that companies trust and already have agreements with. I also think we also need to see proof that LLMs like Google Jarvis (see above) can actually do well on simpler tasks before we let loose something like Devin that supposedly can do every single task related to software engineering. Devin was announced 8 months ago in a launch video which mostly was fake, and I would be very cautious paying $500 for Devin in its current state.

Read more: