“Browsing no longer needs to be a lonely experience”. Microsoft just launched Copilot Vision that will keep you company while shopping, planning the weekend or playing computer games. Unsure if a sweater will look good on you? Just ask Copilot. In Microsoft’s vision of the future Copilot is your best friend that is always there for you, giving you honest advice and will help you plan your life.

Other news this week: ChatGPT greatly outperformed doctors in diagnostic accuracy in a study, OpenAI launched o1, o1-pro and a Pro Subscription for $200/month, ElevenLabs launched “Conversational AI Platform” for simple agent configurations, Supabase launched “AI Assistant v2”, Google launched PaliGemma 2 – the latest version of their vision-language model, and Meta launched Llama 3.3!

THIS WEEK’S NEWS:

- Microsoft Launches Copilot Vision – “A New Way to Browse”

- ChatGPT Outperforms Doctors in Diagnostic Study

- OpenAI Launches o1, o1-pro and Pro Subscription

- ElevenLabs Launches Conversational AI Platform

- Supabase Launches AI Assistant v2

- Google Launches PaliGemma 2 – Powerful Vision-Language Models

- Google DeepMind Introduces Genie 2 – Large-scale Foundation World Model

- Nvivia Introduces Fugatto Sound Machine

- Meta Launches Llama 3.3

- Amazon Announces Nova Foundation AI Models

- Kling AI Launches Virtual Try-On for Fashion Visualization

Microsoft Launches Copilot Vision – “A New Way to Browse”

The News:

- Microsoft just launched a new featured called “Copilot Vision”, which allows the Copilot Assistant to see and interact with your web pages in real-time.

- Use cases from Microsoft include planning weekend activities, shopping and “co-gaming” with an AI.

- According to Microsoft, “Browsing no longer needs to be a lonely experience with just you and all your tabs.”

- Availability: Currently available to paying Copilot subscribers in “Copilot Labs”, and is set to general release in early 2025.

My take: The promotional videos by Microsoft follow the typical US Microsoft-style, which feels like they live in this alternate universe where people behave in ways no normal human would ever consider. Like the IT engineer browsing sweaters, asking the AI assistant which sweaters would look good on him. Well for all you people who feel alone when browsing, Microsoft has you covered in just a few more months – hang in there!

Read more:

- Co-planning with Copilot Vision – YouTube

- Co-shopping with Copilot Vision – YouTube

- Co-gaming with Copilot Vision – YouTube

ChatGPT Outperforms Doctors in Diagnostic Study

https://www.sciencedaily.com/releases/2024/11/241113123419.htm

The News:

- A new study by UVA Health tested ChatGPT Plus against physicians in diagnostic accuracy, with results showing ChatGPT achieving 92% accuracy compared to 76% for doctors using AI and 74% for those using conventional methods.

- The study involved 50 physicians across three major hospitals, testing their diagnostic abilities on complex clinical cases.

- Doctors using ChatGPT completed their diagnoses slightly faster (519 seconds vs 565 seconds) but showed minimal improvement in accuracy.

- Physicians often disregarded AI suggestions when they conflicted with their own opinions, highlighting the need for better AI integration training.

- The study also used real-life patient cases that were not previously published, ensuring ChatGPT hadn’t trained on them.

My take: If you removed the doctors completely from interacting with patients the accuracy for diagnostics was 92%. And as soon as you involved doctors the accuracy dropped below 80%. Next year I am willing to bet ChatGPT will be over 95%, and with tools like PaliGemma 2 the accuracy could probably be even higher. The main question then becomes: how can we get doctors to use and trust AI in their daily work? In the EU, AI systems used for medical decision-making are classified as high-risk, and since ChatGPT is not approved for medical diagnostics in the EU at least we won’t have this issue in the EU for years to come. We will have to stick with our 74% accuracy and be happy with it.

Read more:

- ChatGPT Defeated Doctors at Diagnosing Illness – The New York Times

- ChatGPT beat doctors at diagnosing medical conditions: study

OpenAI Launches o1, o1-pro and Pro Subscription

https://openai.com/index/introducing-chatgpt-pro

The News:

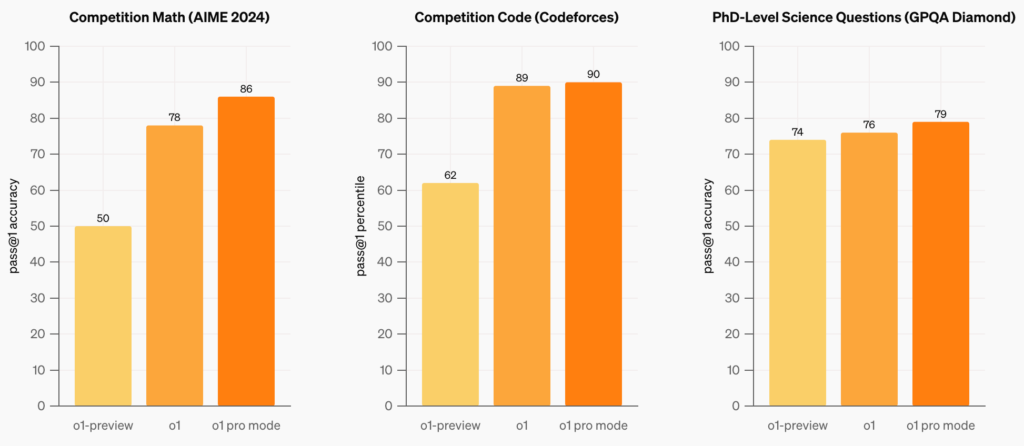

- OpenAI just launched the full version of “o1”, their test-time-compute Large Language Model that solves complex problems by reasoning through them step by step.

- o1 is now multimodal, and produces faster, more accurate responses than o1-preview, with 34% fewer errors on complex queries.

- OpenAI also introduced a new $200 Pro plan that includes unlimited access to o1, GPT-4o, Advanced Voice, and future compute-intensive features.

- Pro plan subscribers get exclusive access to the new ‘o1 pro mode,’ which features a 128k context window and stronger reasoning on difficult problems (the plus subscription of o1 only has 32k context window).

- Availability: o1 and o1-mini are not yet available in the API, only through the webpage.

What you might have missed: In the launch video above, OpenAI mentions that “right now we’re in the process of swapping all our GPUs from 01 pro preview to 01”. This probably explains the huge variety in test reports, with many reporting that the quality of o1 Pro was worse than o1-preview, when it in fact should have been much higher.

- 25 Experiments In o1 Pro Mode – What Worked, What Didn’t, and Why : “o1 Pro mode is still very much no ready for every day use”

- I spent 8 hours testing o1 Pro ($200) vs Claude Sonnet 3.5 ($20) : “Unless you specifically need vision capabilities or that extra 5-10% accuracy for specialized tasks, Claude Sonnet 3.5 at $20/month provides better value for most users than o1 Pro at $200/month.”

- o1-pro kicks ass, benchmarks are now officially useless : “With the release of o1 models it’s also clear most of the stupid prompts people try new models with are also quite worthless.”

My take: For programming, o1 still seems better at refactoring than generating new code, especially when compared to Claude 3.5. To me, o1 is like a super smart academic that loves spending weekends solving logical puzzles, but have major issues doing simple tasks like speaking to other people or writing code that is easy to change and easy to follow. o1 still won’t replace Claude 3.5 for me as coding assistant, but it’s a very good complement.

Read more:

ElevenLabs Launches Conversational AI Platform

https://elevenlabs.io/blog/conversational-ai

The News:

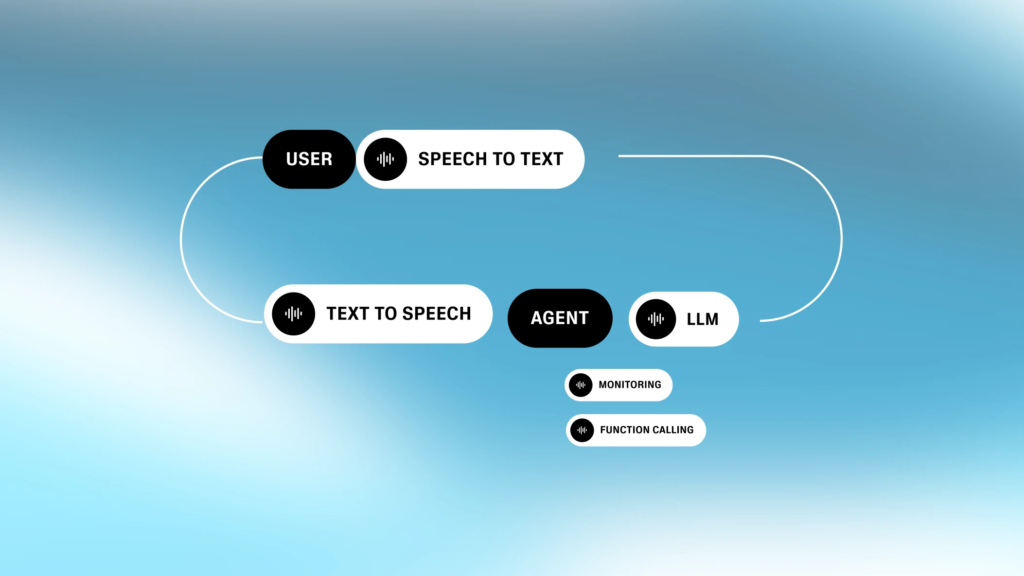

- ElevenLabs just launched a new platform that allows users to create interactive voice agents with customizable personalities, supporting multiple languages and various AI models like Gemini, GPT, and Claude.

- The platform combines text-to-speech, speech-to-text, and language models with natural turn-taking capabilities and interruption handling.

- Users can create agents for purposes like customer support, tutoring, or gaming characters, with pricing starting at $0.10 per minute for business plans.

- The system also includes native Twilio integration for phone system deployment and supports 31 languages.

- Developers can access the platform through SDKs for Python, JavaScript, React, and Swift, or directly via WebSocket API.

- Availability: Now.

My take: We are very close to a future where it’s almost impossible to hear that the person you just called is an AI agent and not actual human. The Conversational AI Platform by ElevenLabs is the best yet, and if you have not yet seen the demos take one minute and go watch them. They are great!

Read more:

- Introducing ElevenLabs Conversational AI – YouTube

- ElevenLabs drops new conversational AI — it’s as natural as chatting to a human | Tom’s Guide

Supabase Launches AI Assistant v2

https://supabase.com/blog/supabase-ai-assistant-v2

The News:

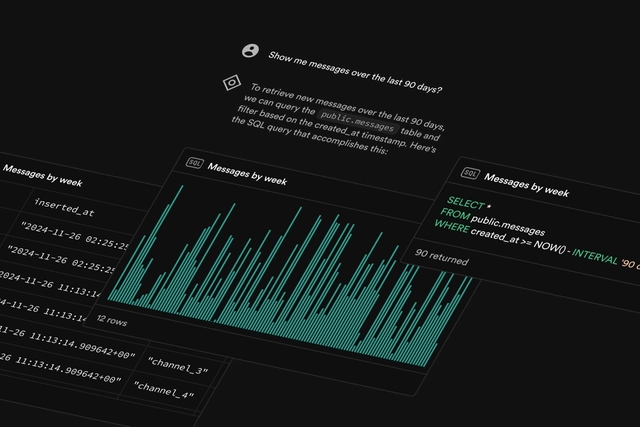

- Supabase just released version 2 of their AI Assistant, a tool that helps developers design and manage PostgreSQL databases with text prompts.

- The assistant introduces new capabilities including schema design, data querying with automatic chart generation, error debugging, and automated creation of PostgreSQL policies, functions, and triggers.

- No data is sent to the underlying language model during queries, maintaining data privacy while still providing analytical capabilities.

- Availability: Now.

My take: Supabase is my #1 tool for working with SQL databases, and with the new AI Assistant v2 things just got a lot better. It’s not that you cannot do these things by hand yourself, it’s just that things go so much faster with the AI Assistant. Supabase themselves describe the AI Assistant like “Cursor for databases” and I tend to agree.

Read more:

- We improved Supabase AI … A lot! – YouTube

- Paul Copplestone on LinkedIn: Today we’re releasing the Supabase AI assistant v2. It’s like Cursor for databases

Google Launches PaliGemma 2 – Powerful Vision-Language Models

The News:

- PaliGemma is Google’s vision-language AI model that can process pictures and describe what it sees in natural language and was launched in May 2024.

- PaliGemma 2 is a significant upgrade to PaliGemma that can now generate detailed descriptions of images, including actions, emotions, and narrative context.

- The model comes in various sizes (3B to 28B parameters) and various resolutions (up to 896px).

- PaliGemma 2 shows “leading performance” in specialized tasks like chemical formula recognition, music score interpretation, spatial reasoning, and X-ray report generation.

- Availability: Now, including the EU.

My take: In a recent study, ChatGPT was able to give better medical diagnosis than doctors with diagnostic accuracy of more than 92%. PaliGemma 2 will help to further increase the accuracy, by being able to understand the deeper meaning of images like X-ray reports. For those of us who live in the EU however, using this model could be problematic. Under the EU AI Act, AI systems that identify or infer emotions from biometric data are classified as high-risk and are subject to strict regulations. Even if the feature is not used, just having it in the model means that it can be subject to compliance obligations. So let’s hope they remove the emotions from the model in the future, so we in the EU can use it for other things like x-ray report analysis.

Read more:

- Google releases PaliGemma 2, its latest open source vision language model

- Questionable: Google’s PaliGemma 2 can recognize emotions | heise online

Google DeepMind Introduces Genie 2 – Large-scale Foundation World Model

https://deepmind.google/discover/blog/genie-2-a-large-scale-foundation-world-model

The News:

- Google DeepMind just introduced Genie 2, a large-scale multimodal foundation world AI model that converts single images into interactive, playable 3D environments with real-time physics, lighting effects, and player controls.

- Genie 2 is a world model, meaning it can simulate virtual worlds, including the consequences of taking any action (e.g. jump, swim, etc.).

- Genie 2 maintains spatial memory, remembering areas players have visited, even when they’re off-screen.

- Genie 2 works with keyboard and mouse inputs, supporting first and third-person perspectives with 720p output.

- Availability: Unknown, no official release date announced.

My take: Genie 2 is not meant to replace game engines, rather complement them for prototyping and game design. The longer time Genie 2 runs, the more memory it uses for its spatial memory, which means it does not scale for longer game sessions. However, for idea generation, level design and game prototyping it could definitely have its uses, but it’s still very early stages and it will be interesting to follow up if any large-scale game studios have adopted it into their process next year if it ever gets released.

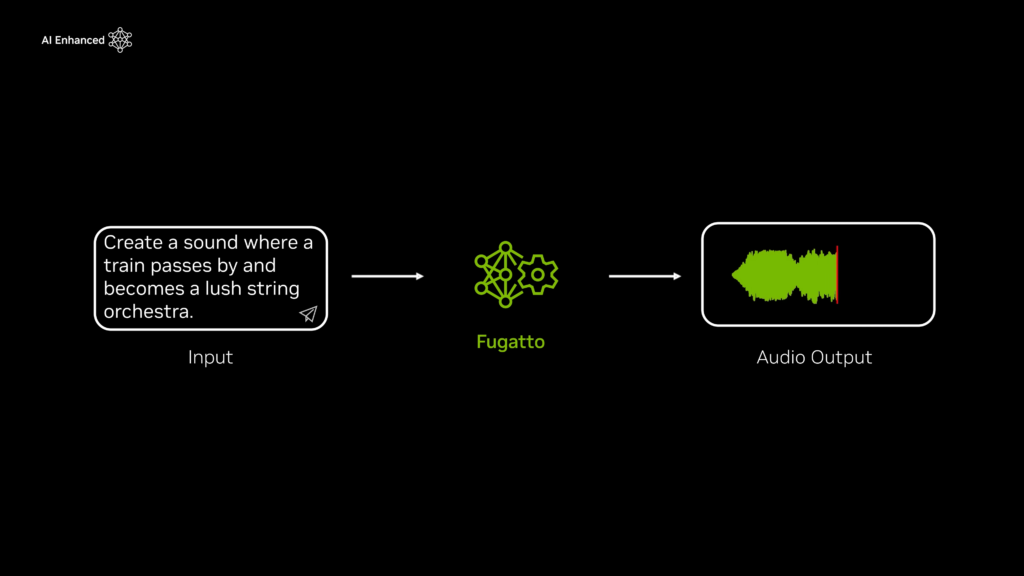

Nvivia Introduces Fugatto Sound Machine

https://blogs.nvidia.com/blog/fugatto-gen-ai-sound-model

The News:

- NVIDIA just introduced Fugatto, an AI model that can generate and transform audio, music, and voices using text prompts.

- It can perform tasks like adding instruments to existing songs, changing voice characteristics, and creating entirely new soundscapes that evolve over time.

- Unlike previous models, Fugatto can create sounds beyond its training data, such as making a trumpet bark or a saxophone meow.

- Fugatto was trained on a diverse dataset, so it has both multi-accent and multilingual functionality.

- Availability: No immediate plans for release.

My take: This is an amazing and complex model, and it has the potential to make life much easier for all Foley artists creating audio for movies. My favorite feature is “temporal interpolation” that allows for sounds evolving over time, which could be especially interesting for game and music production. The model, while impressive, looks like it will stay as an internal prototype with no planned release date due to “risks with generative technology”.

Read more:

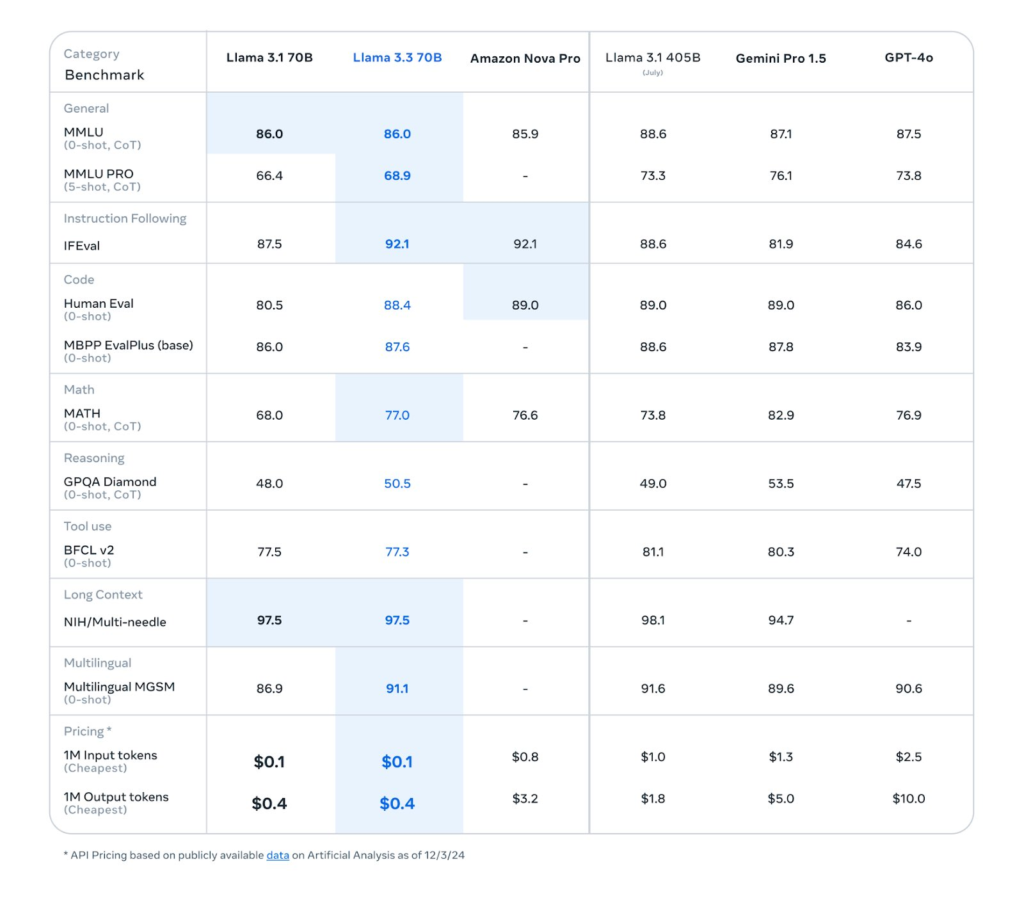

Meta Launches Llama 3.3

https://techcrunch.com/2024/12/06/meta-unveils-a-new-more-efficient-llama-model/

The News:

- Meta just released Llama 3.3 70B, a new language model that matches the performance of their much larger 405B parameter model while requiring significantly less computational resources.

- The model shows improved capabilities in coding, reasoning, and general knowledge tasks, outperforming competitors like Google’s Gemini 1.5 Pro and OpenAI’s GPT-4 on several benchmarks.

- The model supports multiple languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

- Availability: Now.

My take: You have probably seen several people saying “2024 was the year when open-source AI caught up with closed source”. Open-source AI is definitely improving and getting better, but try to use Llama 3.3 for coding it’s like trying to code while only using your nose on your keyboard, not a fun experience at least compared to using Claude 3.5. Meta is currently building a new $10 billion data center in Louisiana for future model development, and maybe this is what is required to finally catch up and even surpass closed-source models. I think the main problem with open-source models is that they have to keep the size down so anyone actually can run it on regular hardware, and this makes it hard to compete with much larger models from closed-source companies.

Read more:

- Meta’s new Llama 3.3 70B Instruct model now available on watsonx.ai

- Meta Launches Llama 3.3 70B AI Model with Enhanced Performance at Lower Cost

Amazon Announces Nova Foundation AI Models

https://www.aboutamazon.com/news/aws/amazon-nova-ai-canvas-reel-aws-reinvent

The News:

- Last week Amazon Web Services (AWS) held its annual re:Invent conference in Las Vegas, announcing major updates across its AI and cloud services portfolio.

- The main news is the introduction of Amazon Nova, a new collection of foundation models integrated with Amazon Bedrock, supporting text, image, and video inputs to generate text output. According to Amazon, Nova excels in Retrieval-Augmented Generation (RAG), function calling, and agentic applications.

- AWS also unveiled the next generation of Amazon SageMaker with unified data and AI development environments, including SageMaker Catalog and SageMaker Lakehouse for simplified data management. SageMaker is a cloud-based service that helps you build, train, and deploy different machine learning models.

- AWS launched a ‘Buy with AWS’ button for cloud software partners, reducing marketplace fees to 3% to compete with Microsoft and Google.

- AWS announced Trainium2 and Trainium3 chips for AI model training, with Trainium3 expected to be four times more powerful than its predecessor. Trainium2 is currently used to train the next version of Claude.

- Amazon Bedrock received new safeguards against AI hallucinations, multi-agent collaboration features, and model distillation capabilities for creating smaller, more efficient models.

- Availability: Q1 2025 for Amazon Nova.

My take: Amazon claims Nova models are at least 75% less expensive than comparable models on Amazon Bedrock and offer the fastest performance in their respective intelligence classes. The new Nova AI models will be released in early 2025, so we will have to wait until then to see if they are also competitive on features and capabilities.

Read more:

- Amazon Nova, Amazon Bedrock innovations, Trainium3 chips, and more: 11 key announcements from AWS re:Invent 2024

- That’s a wrap on AWS re:Invent 2024 – YouTube

Kling AI Launches Virtual Try-On for Fashion Visualization

https://klingai.com/release-notes

The News:

- Kling AI just released a “virtual try-on tool” that allows users to digitally fit clothes on AI models and create visualization videos.

- The system supports multiple garment uploads and can generate videos showing how clothes move and fit on models.

- Users can also upload their own garment images against a white background for visualization.

- Availability: Now.

My take: This is definitely not the first virtual try-on released, but the quality of the results are better than what I have seen previously. For e-commerce this is probably the way forward, saving time and money by not having to shoot and process thousands of photos with models posing with the clothes.

Read more: