Last week Anthropic launched their Model Context Protocol (MCP), and Claude can now connect to any system in the world and do basically anything you can imagine. This goes way beyond the basic read-only app integrations OpenAI recently introduced in ChatGPT for macOS, MCP is an open-source framework to integrate Claude with any service using local ”plugins”. Currently MCP is limited in that it can only run locally on your computer and you must explicitly allow Claude access to every service you have installed for every chat you create, but the possibilities are enormous.

Other news this week: Anthropic will switch from Nvidia to AWS Trainium chips for its largest models, Claude got support for custom writing styles, Cursor rolled out a new “Agent” feature, Alibaba’s Qwen team launched QwQ-32B a new open-source AI reasoning model, LumaAI launched a major update to Dream Machine and ElevenLabs launched GenFM, a podcast generator for their iPhone app!

THIS WEEK’S NEWS:

- Anthropic Launches Model Context Protocol (MCP)

- Anthropic Switches From Nvidia to AWS Trainium

- Anthropic Introduces Custom Styles for Claude

- Cursor Rolls Out Version 0.43 With AI Agent and Bug Finder

- OpenAI Adds Support for More Desktop Apps in macOS Client

- Andrew Ng Launches AIsuite – Unified AI Provider Framework

- Alibaba Launches QwQ-32B-Preview a New Reasoning Model

- Luma AI Launches Major Update to Dream Machine

- Runway Introduces Frames – a New Image Generation Model

- ElevenLabs Launches GenFM Podcast Feature in iOS App

- Tesla Rolls Out Fully Self Driving 13 With Major Improvements

Anthropic Launches Model Context Protocol (MCP)

https://www.anthropic.com/news/model-context-protocol

The News:

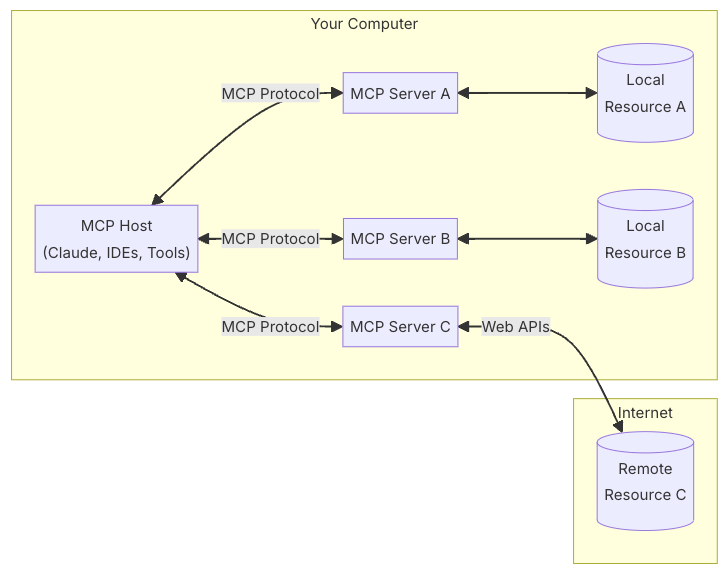

- Anthropic has released a ground-breaking new featured to access and control third-party services from Claude called Model Context Protocol (MCP).

- Where the OpenAI app integration uses macOS accessibility to read data from a few select apps, Anthropic chooses another direction where developers create small “plugins” that expose Claude to any service!

- You can easily connect your own service to Claude using MCP, and Anthropic has published the source code for integrating with services like GitHub, Google Drive, Slack, Web Search using Brave, and the local file system.

- Currently MCP is only available for the Claude desktop app, so it is not something most users can use since it’s a bit complicated to install. I do however expect a “Claude MCP plugin manager” to be released, either from Anthropic or from a third-party developer (just a guess from my side but it makes sense).

What you might have missed (1): To give you a good overview what MCP can do, check this video by All About AI. It shows examples using prompts like “Search BRAVE and find info about Anthropic’s MCP (model context protocol), write a summary, and save it on my system as mcp_info.md” and “I need some information about the development in the Bitcoin price the last 24h, search the web for this info and save the most important info to bitcoin.md”.

What you might have missed (2): Here’s a good collection of MCP plugins: Awesome MCP Servers @ GitHub. Examples include Kagi Search, Fetch Youtube subtitles and transcripts, PostgreSQL integration, Google Drive integration, Google Maps integration, and much more.

What you might have missed (3): In the movie Tron, the main antagonist is called Master Control Program (MCP), a rogue computer program that ruled over the world inside ENCOM’s mainframe. The fact that Claude uses MCP to access the entire world takes a different meaning depending on what MCP you refer to.

My take: We are quickly moving into a world where LLMs are no longer chatbots producing text from a prompt, but are instead used as central coordinators and agents integrating with the world around them. MCP is an important step in that direction. If you are curious how MCP works, you can check this video by Mervin Praison, it gives a very good overview on how the MCP plugin architecture works. My best guess for the future is that Anthropic will develop some kind of plugin system for Claude desktop apps, where you can install and authorize plugins that run on your device without restrictions. My best guess is that OpenAI will do something similar with their upcoming release of Operator, but that they will keep their app integrations in ChatGPT for direct connections with user-installed client apps.

Read more:

- Anthropic’s Model Context Protocol: Add YOUR App to Claude AI! – YouTube

- GitHub – Model Context Protocol Servers

Anthropic Switches From Nvidia to AWS Trainium

https://www.aboutamazon.com/news/aws/amazon-invests-additional-4-billion-anthropic-ai

The News:

- On November 22 Amazon announced that they are investing $4 billion into Anthropic, the company behind Claude.

- As a part of the agreement, Anthropic will switch to AWS Trainium and Inferentia chips to train and deploy its largest foundation models.

- Anthropic is now naming AWS its primary training partner, in addition to continuing to be its primary cloud provider.

What you might have missed: Most AI companies today have switched to Nvidia Hopper GPUs during 2024, such as OpenAI (H200 GPUs), xAI (100,000 H100 GPUs), and Meta (100,000 H100 GPUs). The H100 GPU was announced in March 2022, and started shipping in Q1 2023. AWS Trainium2 was announced in November 2023, and will start shipping in Q4 2024. Nvidia announced the follow-up to Hopper called Blackwell in March 2024, but it has not yet started shipping due to production issues.

My take: AWS Trainium2 is specifically designed for large foundation models over 100 billion parameters, and has a different architecture than Nvidia Hopper. It’s going to be very interesting to see the results in 6 months when we have the latest Claude Opus trained on Trainium2, ChatGPT trained on Nvidia H200, and Grok 3 and Llama 4 trained on Nvidia H100. Will there be a significant difference, and can it be traced back to the training platform used?

Read more:

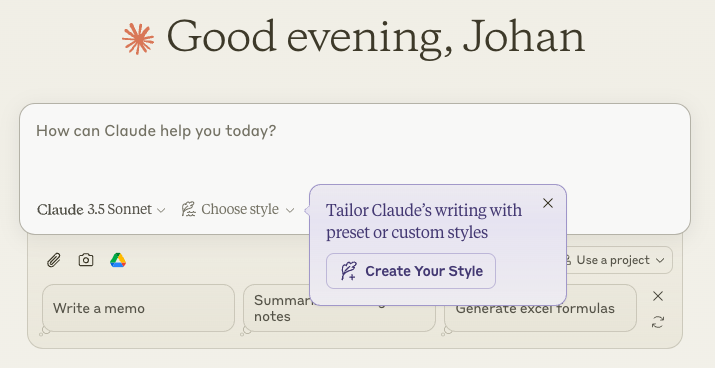

Anthropic Introduces Custom Styles for Claude

https://www.anthropic.com/news/styles

The News:

- Anthropic just launched a new feature Claude that allows users to customize Claude’s output to match specific tones and writing structure.

- Claude offers three pre-defined styles: Formal, Concise and Explanatory, and users can also create their own custom styles by uploading sample content.

- One of the early companies using these styles are GitLab, saying “Claude’s ability to maintain a consistent voice while adapting to different contexts allows our team members to use styles for various use cases including writing business cases, updating user documentation, and creating and translating marketing materials.”

My take: I tried training Claude on my newsletters and asked it to write some news sections, and the resulting text is still a big difference to how I would them. So in this first iteration I would rate it maybe 3/5, at least for my way of writing. But this is definitely the way forward for Large Language Models – once they are able to write in the same style as yourself, the number of use cases for them increase exponentially.

Cursor Rolls Out Version 0.43 With AI Agent and Bug Finder

The News:

- Using the new Agent feature, version 0.43 of AI editor Cursor can now “pick its own context, use the terminal and complete entire tasks”.

- In practice this means Cursor can generate files, compile them, start servers, evaluate the output, and then adjust the source code until the output meets the requirements. All 100% automatically.

- Cursor also got a new Bug Finder feature, that “analyzes code changes between your current branch and the main branch in your remote repository. For the best results, run it on feature branches before merging into main to catch potential issues early in development.”

- The update is slowly being rolled out, however you can download it directly using this link.

My take: Two great new features in this update making Cursor, my current favorite development tool, even better. The Bug Finder however is quite unstable and can be very expensive, but it gives a good indication where things are heading the next 6-12 months!

Read more:

- Cursor UPDATE: BEST Code Editor just got EVEN BETTER! (Autonomous Coding, AI Agents, Etc!) – YouTube

- The future of AI agents | Cursor Team and Lex Fridman – YouTube

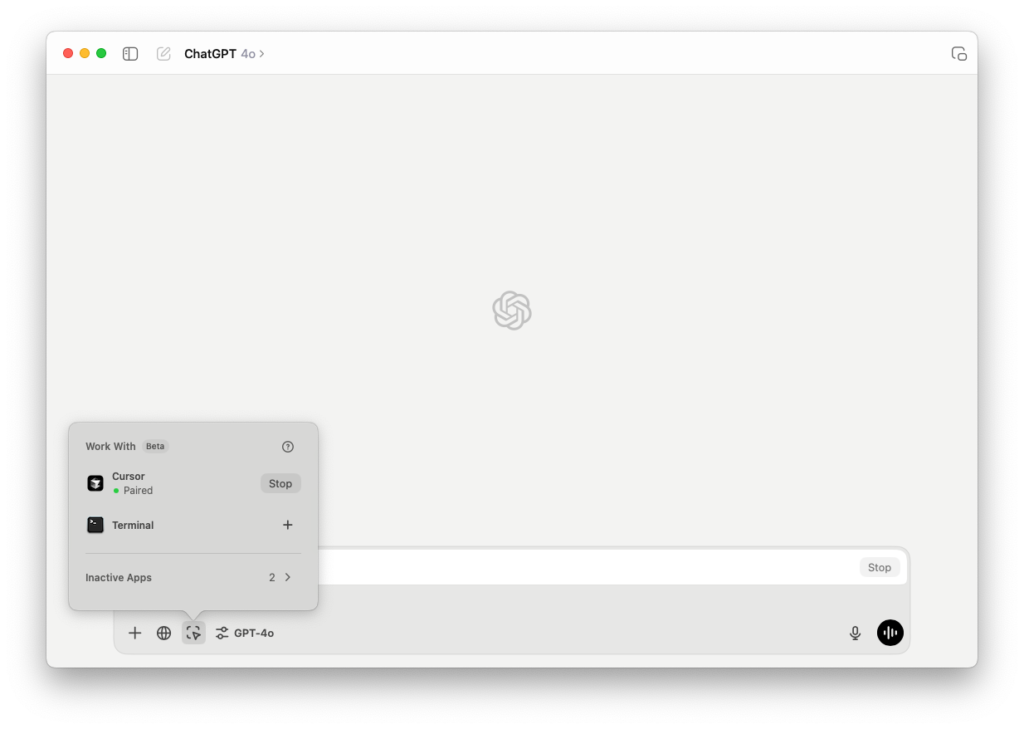

OpenAI Adds Support for More Desktop Apps in macOS Client

https://www.linkedin.com/feed/update/urn:li:activity:7267302436501950468

The News:

- OpenAI has really ramped up their native app development, and last week they added support for the following apps in ChatGPT Desktop for macOS:

- VS Code forks: Code Insiders, VSCodium, Cursor, Windsurf

- JetBrains: Android Studio, IntelliJ, PyCharm, WebStorm, PHPStorm, CLion, Rider, RubyMine, AppCode, GoLand, DataGrip

- Panic: Nova & Prompt

- Bare Bones: BBEdit

My take: Justin Rushing, the main macOS developer at OpenAI, has previously worked at Apple and Slack for over 10 years as a Swift developer. And this shows: the macOS client of ChatGPT is native, written in Swift, and is a joy to use. And being native means that ChatGPT can do things that Claude cannot, since Anthropic just wrapped their entire website inside an Electron framework. I really do hope other companies take note of this, and I would love to see more companies focusing on native app experiences going forward.

Read more:

- Top feedback was to make ChatGPT work with more apps. We’ve got you covered!

- Daring Fireball: Anthropic’s Claude AI Chatbot Now Has a Mac App, But It’s an Electron Turd

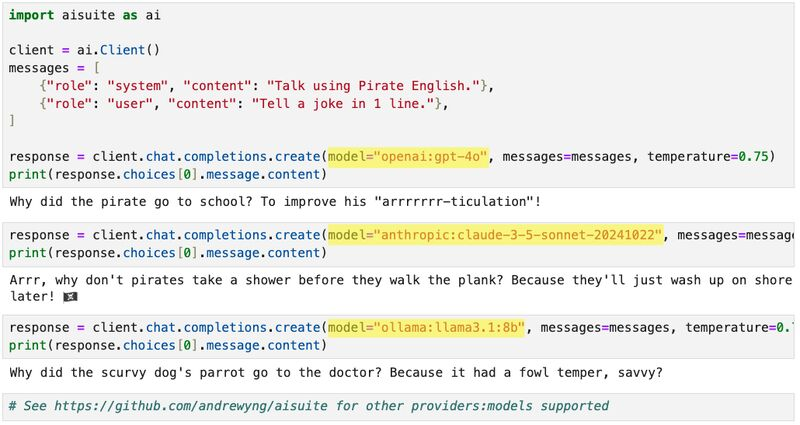

Andrew Ng Launches AIsuite – Unified AI Provider Framework

https://github.com/andrewyng/aisuite

The News:

- Andrew Ng (Professor of Computer Science at Stanford) with colleagues just launched AIsuite, a framework for developers to use large language models from multiple providers.

- AIsuite lets you pick a “provider:model” just by changing one string, like openai:gpt-4o, anthropic:claude-3-5-sonnet-20241022, ollama:llama3.1:8b, etc.

- AIsuite is released as open source with MIT License and can be installed with

pip install aisuite

My take: If you are developing apps with Large Language Models in Python you should definitely check this one out. Highly recommended!

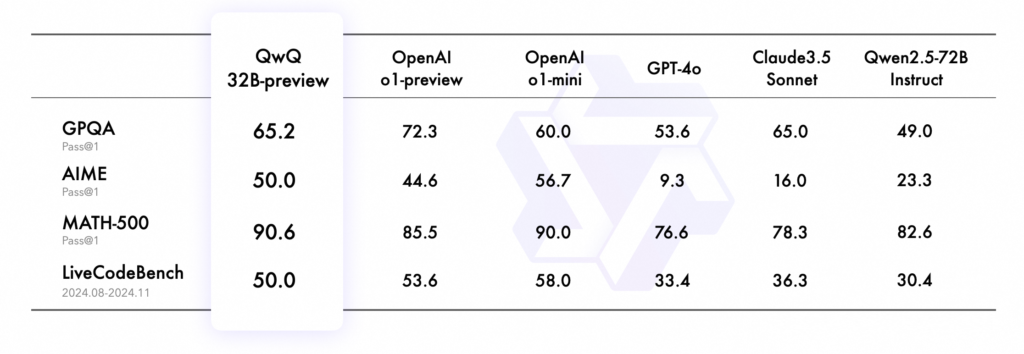

Alibaba Launches QwQ-32B-Preview a New Reasoning Model

https://qwenlm.github.io/blog/qwq-32b-preview

The News:

- Alibaba’s Qwen team just released QwQ-32B-Preview, a new open-source AI reasoning model that can reason step-by-step and directly competes with OpenAI’s o1 “preview” series across several benchmarks.

- QwQ features a 32K context window, outperforming o1-mini and competing with o1-preview on key math and reasoning benchmarks.

- QwQ uses “deep introspection,” going through problems step-by-step and questioning and examining its own answers to reason to a solution.

- Currently in preview, while performing well under several benchmarks, the model has multiple issues such as (1) getting stuck in reasoning loops, (2) unexpectedly switching between languages, (3) struggling with common sense.

- QwQ-32B-Preview is released under the Apache 2.0 license, meaning you can use it however including commercial purposes!

My take: Chinese companies are definitely stepping up the open-source game, and with reasoning models such as DeepSeek R1-Lite-Preview and QwQ they are closing the distance to current state-of-the-art closed models. However, while that distance according to a few select benchmarks might look fairly small, the amount of work required to catch up those few percentages is probably much harder and more complex than the percentages tell.

Read more:

- Try it out at QwQ-32B-Preview – a Hugging Face Space by Qwen

Luma AI Launches Major Update to Dream Machine

https://twitter.com/LumaLabsAI/status/1861054912790139329

The News:

- Luma AI just released a major upgrade to Dream Machine, including a new image generation model called Photon and new creative controls.

- Photon is a “next generation image model build from the ground-up for visual thinking and fast interaction.” According to Luma AI, Photon is up 8x faster than competing models.

- Dream Machine can now generate consistent characters from a single reference image and maintain them across both images and videos.

- Dream Machine also includes new Visual Camera Controls, style transfer, and Brainstorm for creative exploration.

My take: My experience with Dream Machine was previously quite mixed. I typically send in my favorite prompt “A middle-aged man running in a Swedish forest during the golden hour.” and I usually get back some weird floating zombie. But this new version is completely different! Just check the image above, and if you have time check the video below. This is actually usable, and wow what an improvement in just 10 weeks (I last wrote about Dream Machine in Tech Insights Week 28)! Definitely check this one out if you have the need for AI-generated video!

Read more:

Runway Introduces Frames – a New Image Generation Model

https://runwayml.com/research/introducing-frames

The News:

- Runway keeps rolling out amazing features, and last week they launched a new image generation model called Frames.

- According to Runway, Frames “excels at maintaining stylistic consistency while allowing for broad creative exploration. Frames can establish a specific look for your project and reliably generate variations that stay true to your aesthetic.”

- Frames is gradually being rolled out inside Gen-3 Alpha and the Runway API.

My take: If you have a minute go ahead and check the examples Runway posted on their web site. Similarly to Flux RAW mode the rendering looks superb, but the AI model still has issues with hands and fingers, just look at the image above. I think the main limitation of current models is the limited number of parameters they use, and upcoming models trained on next-generation hardware should improve things considerably.

ElevenLabs Launches GenFM Podcast Feature in iOS App

https://elevenlabs.io/blog/genfm-on-elevenreader

The News:

- If you use an iPhone, you can now use the new GenFM feature in the ElevenLabs mobile app to generate a podcast from PDFs, articles, ebooks, docs or imported text.

- The podcasts are similarly structured as NotebookLM by Google, but you can customize the voices and also render the audio in 32 different languages.

- The feature is only available in the iOS app, and the podcasts cannot be exported as audio files.

My take: Listening to some example podcasts generated by GenFM the dynamics is not up to par with NotebookLM. It’s a good first try, and will definitely get better over time. Definitely worth keeping an eye out for once the feature becomes available on the web with full export capabilities.

Tesla Rolls Out Fully Self Driving 13 With Major Improvements

https://www.reddit.com/r/TeslaLounge/comments/1h3oz3f/fsd_13_is_here/

The News:

- Yesterday Tesla started to roll out their latest Fully Self Driving (FSD) version 13.2 to owners, featuring up to six times distance between necessary human interventions versus FSD v12.5.4.

- FSD 13.2 also supports reverse mode, and can both park and leave parking spots automatically.

- FSD 13.2 can be started from parked by just pressing a button on the display.

My take: You can see a full test drive of FSD 13.2 in the video below. It still has issues parking the car and keeping it within a parking slot, but overall it looks quite smooth.

Read more: