Last week OpenAI launched a major update to ChatGPT, and if you have one minute to spare go ahead and listen to the Eminem-style rap song below, written with the latest “Creative Update” of ChatGPT and rendered with the new Suno v4. This is a whole new level of content, and we are rapidly approaching the point where AI is good enough for all text writing and all song production. How many people do you know who could have written a song text like this?

Other top news this week: Elon Musk is now ranked the #1 Diablo IV player worldwide after spending close to 100 hours per week playing the game since October, Black Forest Labs (the company behind Flux) just launched new AI Image Editing tools, Mistral launched web search, canvas, document analysis and new models, and Runway launched a groundbreaking new Expand Video feature!

Song: The Quantum Cipher (GPT-4o, Suno v4)

https://x.com/kyleshannon/status/1859355131738734824

THIS WEEK’S NEWS:

- OpenAI Launches Major Creativity Update for ChatGPT

- Google and OpenAI Keeps Fighting for #1

- Runway Launches Expand Video Feature

- Suno Launches v4

- SAMURAI: Zero-Shot Visual Tracking with Motion-Aware Memory

- Elon Musk is Ranked #1 Diablo IV Player WorldWide

- Elon Musk Encourages Users to Upload Health Data to Grok

- Black Forest Labs Launches AI Image Editing Suite FLUX.1 Tools

- Mistral Launches Web Search, Canvas, Document Analysis and New Models

- Anthropic integrates Google Docs into Claude.ai

- Chinese Startup DeepSeek Launches R1-Lite-Preview

- Perplexity Launches Shopping Assistant

OpenAI Launches Major Creativity Update for ChatGPT

The News:

- OpenAI just improved GPT-4o and the new version (20241120) is now actually amazingly good at creative writing.

- As an example, Kyle Shannon, co-founder of storyvine.com, has been testing the same prompt for two years: “write an Eminem style cipher about quantum mechanics”. ChatGPT has always generated the best results, but still ”no LLM has ever been able to capture the sophisticated internal rhyming structures of Eminem”. Until now.

What you might have missed: Here is the full recipe to generate the lyrics for The Quantum Cipher rap song:

- Asked the new “creative” chatGPT4o to write an Eminem style rap about quantum mechanics. It was better than I have ever seen, but still had an unsophisticated rhyming scheme.

- I asked it to tell me what makes Eminem’s writing so incredible and to write examples. It gave me 10 points like “internal rhyming schemes,” etc.

- I asked it to rewrite the lyrics using those ideas. It gave me what I shared. It was essentially the first output I got!

- I asked ChatGPT to write me a prompt I could share with a music producer for eminem style music and then had it shorten it and it gave me a version of this: “Detroit-style Female rap, with rapid, machine-gun-like delivery rap with intricate multisyllabic rhymes, balanced by emotional depth and a driving beat that amplifies the intensity.”

- I added in the [SINGLE TIME] & [DOUBLE TIME] not thinking they would work, but they did. BOOM.

My take: If you have a minute, check out the lyrics generated by ChatGPT 4o and listen to the final song generated by Suno v4. This is truly some next-level stuff! I have always felt that the texts produced by ChatGPT have been awkward at best, but this latest update is so much better at writing that I finally see myself using ChatGPT for more things than I would have considered before. If you already are using ChatGPT on a daily basis and wondered what happened last week when it suddenly got much better at writing text and songs, now you now.

Read more:

- Kyle Shannon on X: “Hey @OpenAI your @ChatGPTapp 4o “Creative Writing” upgrade today is INSANE!

- OpenAI just gave ChatGPT a major ‘creativity’ upgrade — here’s what’s new | Tom’s Guide

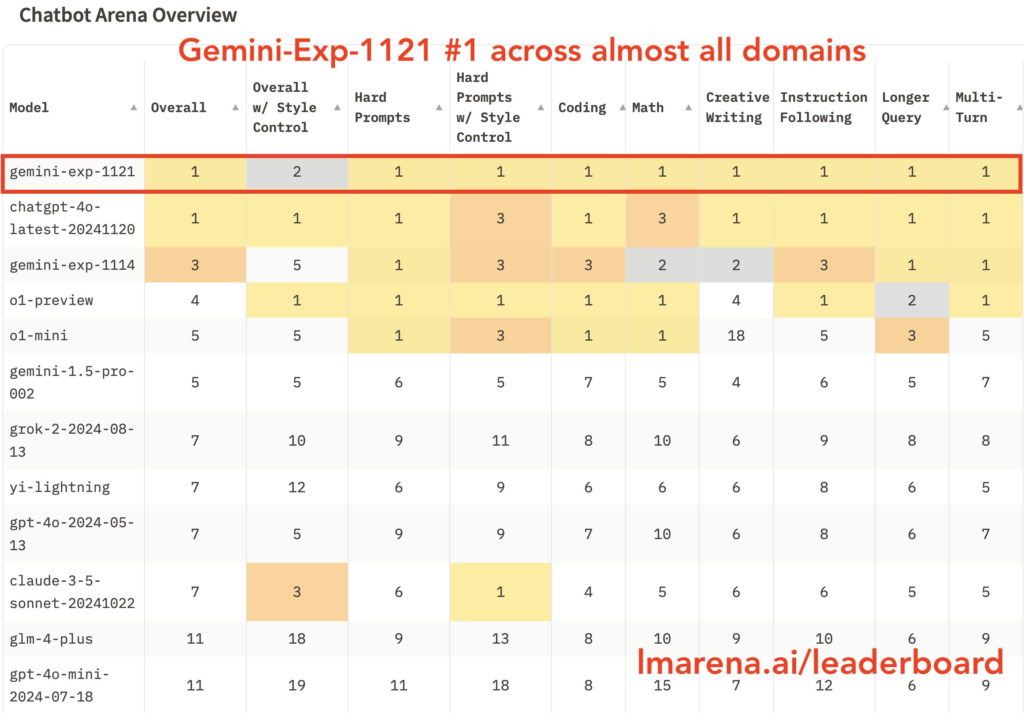

Google and OpenAI Keeps Fighting for #1

https://twitter.com/lmarena_ai/status/1859673146837827623

The News:

- In just one week both Google and OpenAI has significantly improved the performance of both their models.

- It began last week, when Google launched Gemini-1114 that quickly took the #1 position, then OpenAI countered with their updated GPT-4o (2024-11-20) just a few days later reclaiming the top spot, and now Google is back again with Gemini-1121 that again positions it at the absolute top position in almost all categories.

My take: Benchmarks is one thing, and we all know that those seldom adapt well to real-world usage. I would very much like to try out the latest Gemini 1121 from Cursor, but they haven’t released any of the newer models in their API yet. Until they do I will happily continue using Claude which now apparently ranks #4 for coding according to these benchmarks, which I don’t agree with at all. This race to the top is however interesting to follow, and the improvements just the last weeks have been amazingly good.

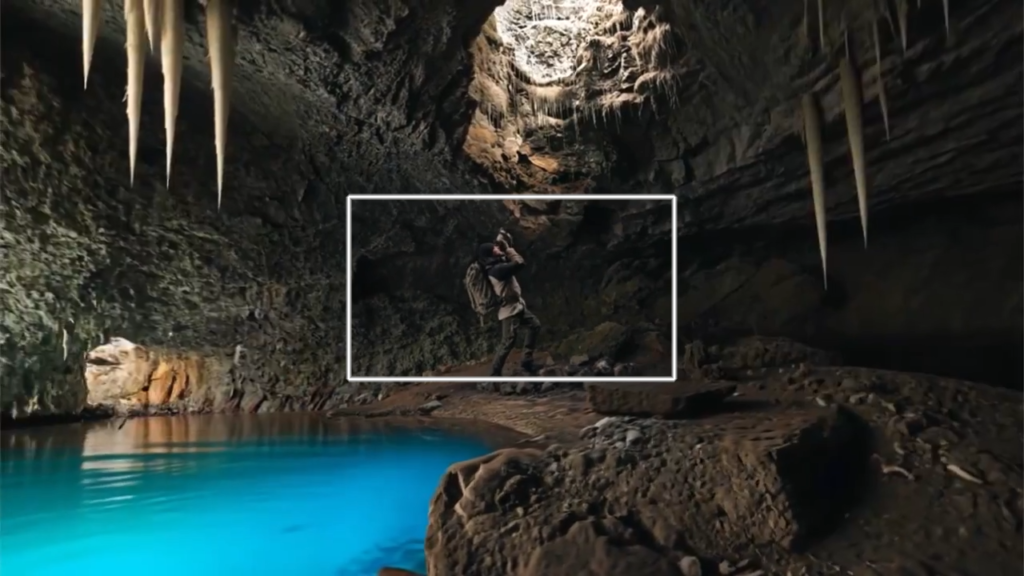

Runway Launches Expand Video Feature

The News:

- Runway (the AI video company that recently partnered with Lionsgate) just launched a groundbreaking new feature called “Expand Video”. Just send in any video, scale it down, and Runway v3 will generate video around your clip. Features include:

- Dynamic Aspect Ratio Transformation: Expand Video allows users to reframe existing videos into different formats without cropping. Instead of removing parts of the original content, Expand Video generates additional visuals beyond the video’s edges to fit the desired aspect ratio.

- Intelligent Output Orientation: Automatically determines the output orientation based on the input video’s dimensions. For instance, a landscape input results in a vertical output, and vice versa. Square videos offer the flexibility to choose between vertical or landscape outputs.

- Prompt Integration: While Expand Video works without additional input, users can enhance control by incorporating text or image prompts. Providing a guiding image, especially an outpainted version of the video’s first frame, ensures visual consistency in the expanded areas.

- Expand Video is rolling out gradually to all users of Runway Gen-3.

My take: Wow, what a great feature! For cinematic movie production, this will be a killer feature and should significantly cut down production times. I can see this feature becoming a hit especially when converting media between landscape and portrait formats, and the source video was shot too “tight” without enough content to fill the frame.

Read more:

- Runway | Introducing, Expand Video. This new feature allows you to transform videos into new aspect ratios by generating new areas | Instagram

- Creating with Expand Video on Gen-3 Alpha Turbo – Runway

Suno Launches v4

The News:

- Suno, the leading AI music company, just launched v4 – a major update “that takes music creation to the next level”. v4 delivers cleaner audio, sharper lyrics, and more dynamic song structures. v4 also comes with two new features:

- Remaster. Enhance tracks you made with older models, upgrading the audio, to v4 quality.

- Lyrics by ReMi. A new lyrics model designed to write more creative lyrics.

My take: If you haven’t yet checked out the song about quantum mechanics I wrote about above, I really recommend you do that now. Suno v4 is starting to sound pretty good, and at the current pace of improvement they should be able to generate songs almost indistinguishable from professionally produced songs within 1-2 years. Unless they go bankrupt before then due to all the record labels suing them for $150,000 per song.

Read more:

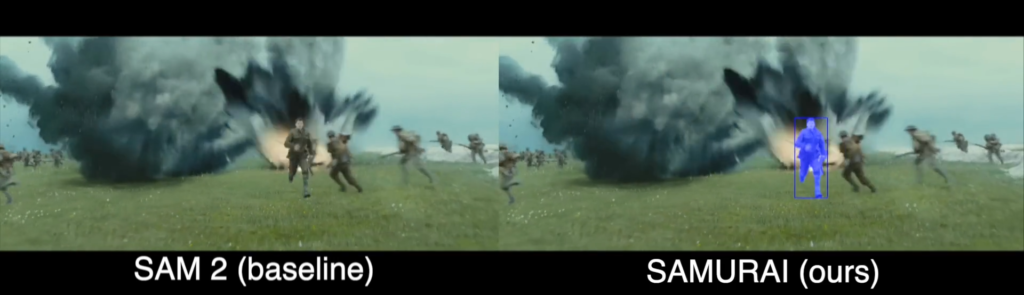

SAMURAI: Zero-Shot Visual Tracking with Motion-Aware Memory

https://yangchris11.github.io/samurai

The News:

- Researchers from the University of Washington just announced SAMURAI: an enhanced adaptation of the Segment Anything Model 2 (SAM 2) by Meta specifically designed for visual object tracking.

- SAMURAI integrates temporal motion cues through a motion-aware memory selection mechanism, enabling precise object motion prediction and mask refinement without retraining or fine-tuning.

- SAMURAI operates in real-time and demonstrates strong zero-shot performance across diverse benchmark datasets, showcasing its ability to generalize without fine-tuning.

My take: Here’s a good example of the true power of open-sourcing software. It is now over 4 months since Meta released their Segment Anything Model 2, and thanks to them releasing it as open-source we now have even better models such as SAMURAI. Everyone benefits from this, and I sincerely hope Meta continues to release all their innovations in the AI space as open-source, and that other companies follow suit.

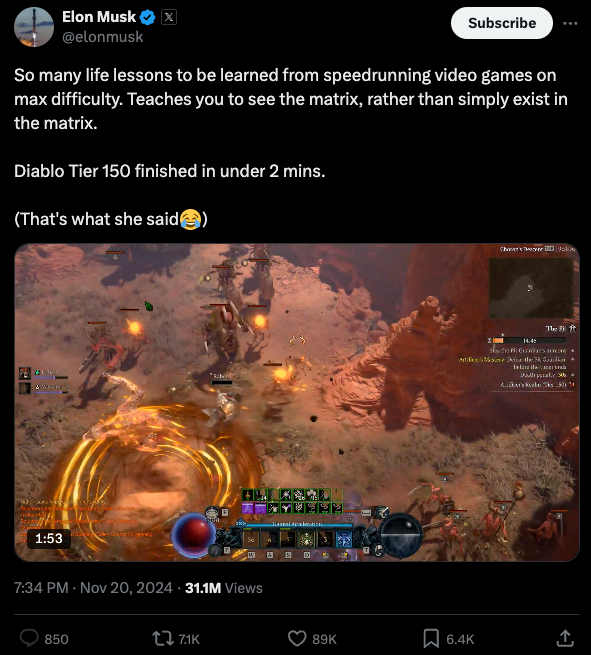

Elon Musk is Ranked #1 Diablo IV Player WorldWide

The News:

- Elon Musk just achieved the number one position in Diablo 4’s global rankings by completing the game’s most challenging Tier 150 dungeon, the Artificer’s Pit, in under two minutes.

- Elon Musk has been streaming Diablo IV gameplay live since the recent expansion “Vessel of Hatred” launched on October 7.

- Elon Musk has also been playing together with top Diablo YouTuber Rob2628 in several videos.

My take: Top Diablo IV players spend 8-10 hours per day playing the game, and just looking at the level Elon Musk has progressed you can easily see that he spent at least 10-15 hours per day playing the game the first weeks. In fact, when SpaceX caught the Starship Booster mid-air on October 13, Elon Musk had spent the past week playing Diablo IV close to 15 hours per day. So if you wonder why he looked a bit sleepy on the great day, now you know. Elon himself says playing computer games calms his mind, but aiming for #1 spot globally by playing around 100 hours per week? That’s a whole different league.

Read more:

Elon Musk Encourages Users to Upload Health Data to Grok

https://twitter.com/elonmusk/status/1851206080564773344

The News:

- Over the past few weeks, users on X have been submitting X-rays, MRIs, CT scans and other medical images to Grok, the platform’s artificial intelligence chatbot, asking for diagnoses.

- The reason: Elon Musk, X’s owner, suggested it in a post: “Try submitting x-ray, PET, MRI or other medical images to Grok for analysis. This is still early stage, but it is already quite accurate and will become extremely good.”

- In its privacy policy, X says it will not sell user data to a third party but it does share it with “related companies.”

My take: Add this to your list of things that will probably never happen in the EU. Will LLMs of the future be able to do a proper medical diagnostic, better than most doctors? I believe so. Studies done today even show that ChatGPT outdid human physicians when assessing medical case histories. Will it benefit from having access to huge amounts of medical images? Absolutely. In the EU however we have three legal bodies to consider for these kinds of systems: General Data Protection Regulation (GDPR), Artificial Intelligence Act (AI Act) and European Health Data Space (EHDS). To train an AI on medical data you need to consider all three legal options, and you must also receive a digital CE marking for your software since the system would be classified as “high risk”. This entire process is complex, costly and takes much time, compared to Elon Musk just encouraging people to upload their X-rays on X.

Read more:

- Elon Musk Asked People to Upload Their Health Data. X Users Obliged – The New York Times

- X is training Grok AI on your data—here’s how to stop it – Ars Technica

- Elon Musk’s X is changing its privacy policy to allow third parties to train AI on your posts | TechCrunch

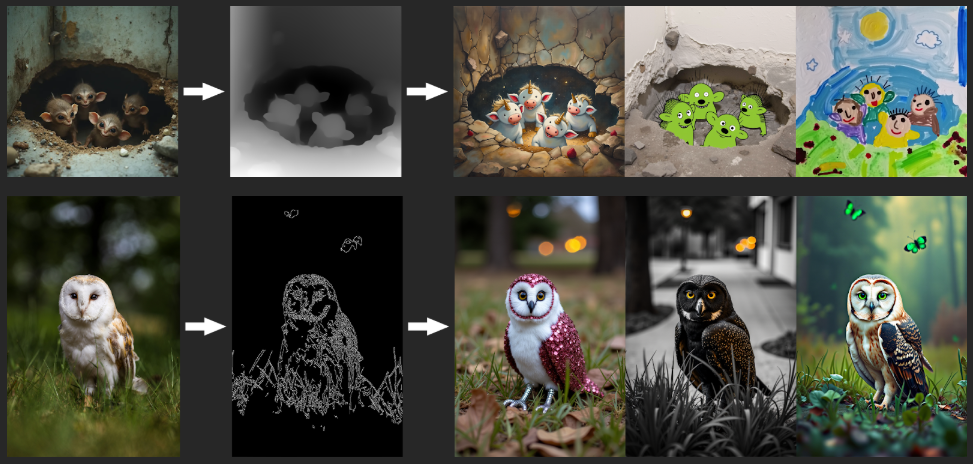

Black Forest Labs Launches AI Image Editing Suite FLUX.1 Tools

https://blackforestlabs.ai/flux-1-tools/

The News:

- Black Forest Labs just released FLUX.1 Tools, four new AI image manipulation features that allow for precise editing and control of AI-generated images using the company’s FLUX models.

- FILL, offers advanced inpainting and outpainting capabilities, allowing editing and expansion of images based on text descriptions and binary masks.

- DEPTH, enables image generation by analyzing the 3D structure (depth) of objects in an input image, allowing consistent perspective and lighting effects.

- CANNY, focuses on edges and outlines of input images, transforming sketches or shapes into visuals based on text prompts.

- REDUX, blends input images with text descriptions to recreate or restyle visuals, allowing for artistic reinterpretations or variations.

- These four tools are currently only available through API access, which means you have to create masks etc in another tool before sending it to Flux. I do predict however that we will see a complete web-based editor based on Flux Tools fairly soon.

My take: Flux is my absolute favorite AI image generator, and these tools are about to make it even better. If you have tried AI image generation in the past but haven’t tried Flux, I really recommend that you give Flux a try, it’s like moving from ChatGPT to Claude for software programming. These four tools are currently only available through an API, but if you want to try them out you can test them at fal.ai.

Read more:

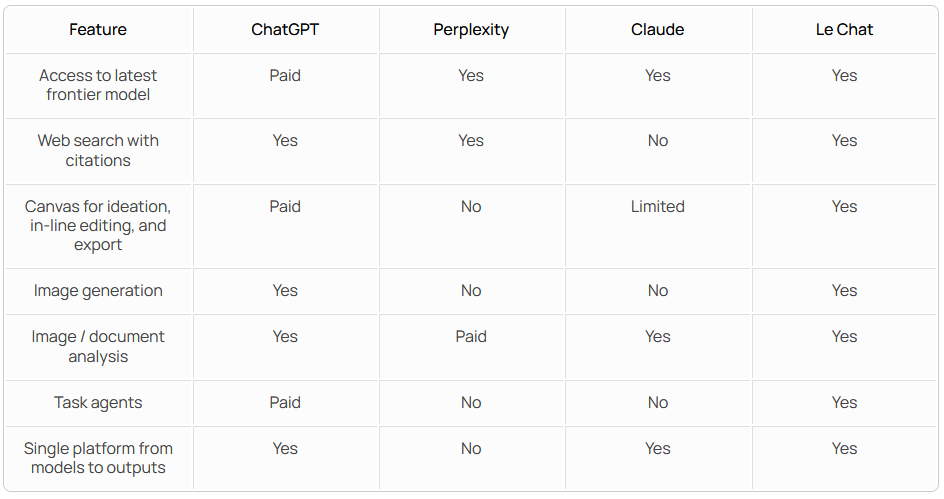

Mistral Launches Web Search, Canvas, Document Analysis and New Models

https://mistral.ai/news/mistral-chat

The News:

- European company Mistral just launched an amazing set of new features to compete with OpenAI and Anthropic, based around their online chat engine “Le Chat”.

- Web Search. Mistral’s Le Chat can now search the web with citations, similarly to ChatGPT and Perplexity.

- Canvas. Le Chat now offers a Canvas feature, similar to Claude’s artifacts and ChatGPT’s similarly named Canvas feature. This includes in-line code/writing editing, versioning, diffs, and much more.

- Document Analysis OCR. Le Chat is now able to process large, complex PDF documents and images, for analysis and summarization thanks to its new multimodal model Pixtral Large.

- Image Generation. Mistral has partnered with Black Forest Labs to bring image generation to Le Chat with Flux Pro.

- Mistral released both Pixtral Large and Mistral Large 2.1 (new version) as open-source. And as usual with Mistral, you are not allowed to use the open-source models for commercial use.

My take: Great progress by Mistral! And while all these features are great additions, Le Chat (1) does not match ChatGPT for usability (their native macOS app is fantastic), Le Chat (2) does not match Claude for code-generation, Le Chat (3) does not match o1 for reasoning, and Mistral (4) does not match Meta Llama 3 for open-source licensing terms (you can use Llama 3 commercially). And (5) I don’t really like the name “Le Chat”, I think it’s even worse than “Bing”. You don’t need to be best at all things to succeed in the LLM arena, but I do think you need to be best on at least one thing. So it will be interesting to see if Mistral manages to up their game, once the final o1 is out together with Llama 4 and Grok 3 it will be much more difficult to compete on features alone.

Anthropic integrates Google Docs into Claude.ai

https://twitter.com/alexalbert__/status/1859664138072621228

The News:

- Anthropic just added support for Google Docs in Claude.ai, so you can easily paste a link or select from your recent documents to add them to your chats and projects.

- This makes it much easier for use cases where you always include a reference document to each chat, such as when you want Claude to respond in a certain way and a keep a certain structure.

My take: I have several documents with template structures I use for output generation, and this makes my life much easier not having to drag a document into the chat every time I want to use a special structure.

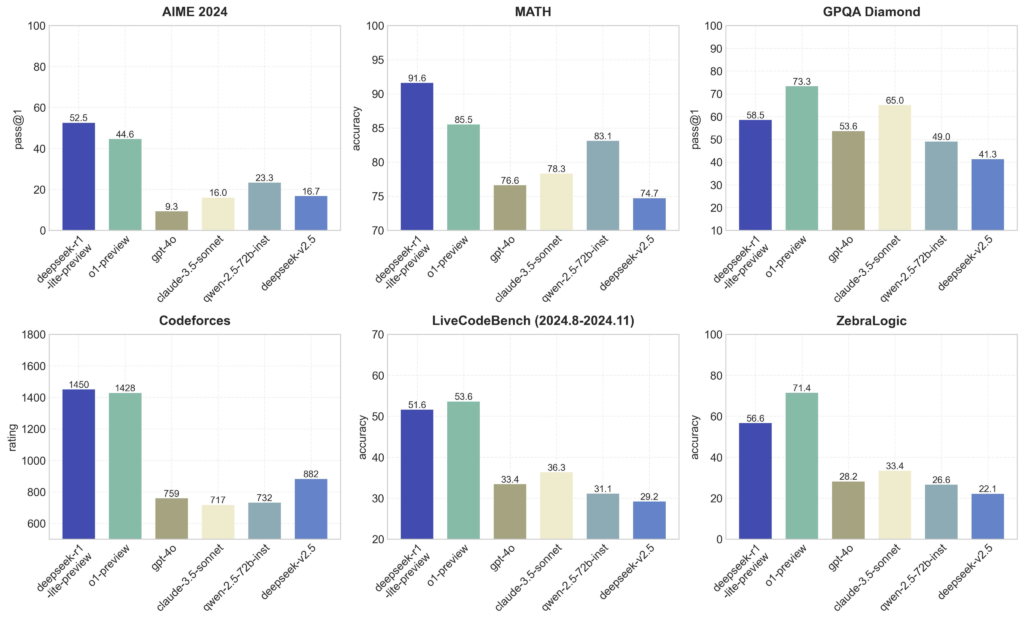

Chinese Startup DeepSeek Launches R1-Lite-Preview

https://api-docs.deepseek.com/news/news1120

The News:

- Chinese startup company DeepSeek (深度求索) just released R1, a reasoning model that claims to match the performance of OpenAI’s o1-preview on key math and coding benchmarks.

- The model spends more time considering questions to improve accuracy, potentially taking tens of seconds to respond depending on the complexity of the task.

- DeepSeek is making a preview version of its model available for a limited number of inquiries, but they plan to make an open-source version available soon.

My take: I only mention this due to all the news like R1 beats o1 and R1 beats o1 performance. Right now the only way to access R1 is through a very limited chat interface, there is no API. And beating the current version of o1-preview is in my opinion not worth so much. According to Sam Altman: “o1-preview is deeply flawed, but when o1 comes out, it will feel like a major leap forward.” This matches my experience well. For generating code I find Claude much better to write new code, but for code review and refactoring work, o1-preview is much better. I’m willing to bet that the final version of o1 will be better for all these things, and matching the current o1-preview in some limited benchmarks won’t be worth so much in just a few days.

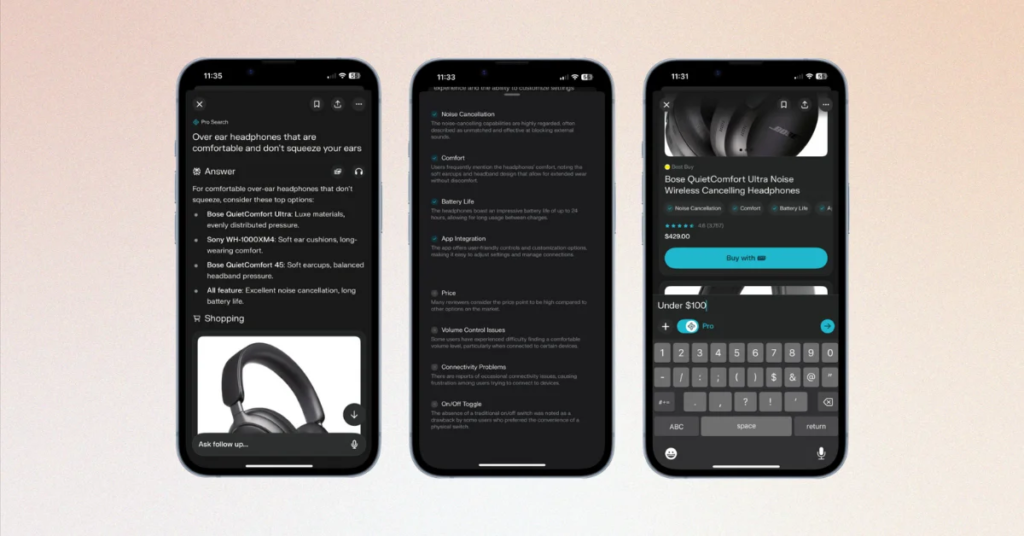

Perplexity Launches Shopping Assistant

https://www.perplexity.ai/shopping

The News:

- Perplexity just launched “Perplexity Shopping” with product recommendations and payments:

- One-Click Checkout “Buy with Pro”. Users can now buy products directly within the Perplexity web och mobile apps, using their saved billing and shipping information.

- “Snap to Shop” Visual Search Tool. The new “Snap to Shop” feature allows users to upload photos of items so Perplexity can identify and suggest similar products available for purchase.

- Perplexity CEO Aravind Srinivas says “he doesn’t fully understand how his company’s AI system steers shoppers to certain products”. However retailers that provide data to Perplexity as part of its new free merchant program will earn “Increased chances of being a ‘recommended product’”.

My take: You can probably see where this is going. It was just over a week ago when Perplexity started showing ads in their free tier, and now they want merchants to register with them in order for their products to rank higher in recommendations. Perplexity is clearly seeing themselves as the new “hub” for users worldwide, the place you go for all your news, information, and shopping. And if companies want to be seen by users they need to register themselves with Perplexity, which is currently free but my best guess is that it will start to cost money quite soon.

Read more: