Do you apply to jobs on LinkedIn? Then it’s time to buy Premium. Only Premium members can see if they are a Top Applicant, and only Top Applicants get prioritized by the new AI Hiring Assistant. Is there a risk that great talents are missed? Yes. Can large organizations optimize their recruitment processes? Absolutely. I think it will be very interesting to see how many companies adopt the AI Hiring Assistant and how it will affect the job application process as a whole going forward.

Among other news this week: GitHub reports a 59% surge in GenAI projects year-over-year, finally making Python the most popular language over JavaScript. ByteDance (owners of TikTok) unveiled X-Portrait 2, which promises to change movie-making and social media forever once it is released. Black Forest Labs launched a new “RAW” mode which creates images that look very close to real photos, at least when it comes to shading and textures. NVIDIA launched four new tools for robot development, and Microsoft unveiled Magentic-One, a new multi-agent AI system.

THIS WEEK’S NEWS:

- LinkedIn Launches AI Hiring Assistant for Recruiters

- Python Overtakes JavaScript as Most Popular Language on GitHub

- Social Media is About to Change Forever: ByteDance Unveils X-Portrait 2

- Black Forest Labs Launches Flux Ultra and RAW Modes

- ElevenLabs launches “Voice Design” for Custom and Unique Voices

- Waymo Robotaxis Cost More and Has Higher Trip Time

- NVIDIA Launches Major Updates for Robot Development

- Microsoft Unveils Magentic-One: Multi-Agent AI System

- Oasis Launches First Playable Open-World AI Model

- Tencent Releases Hunyuan3D-1.0, First Open-Source Text and Image-to-3D Model

- Tencent Releases Hunyuan-Large, Industry’s Largest Open-Source MoE Model

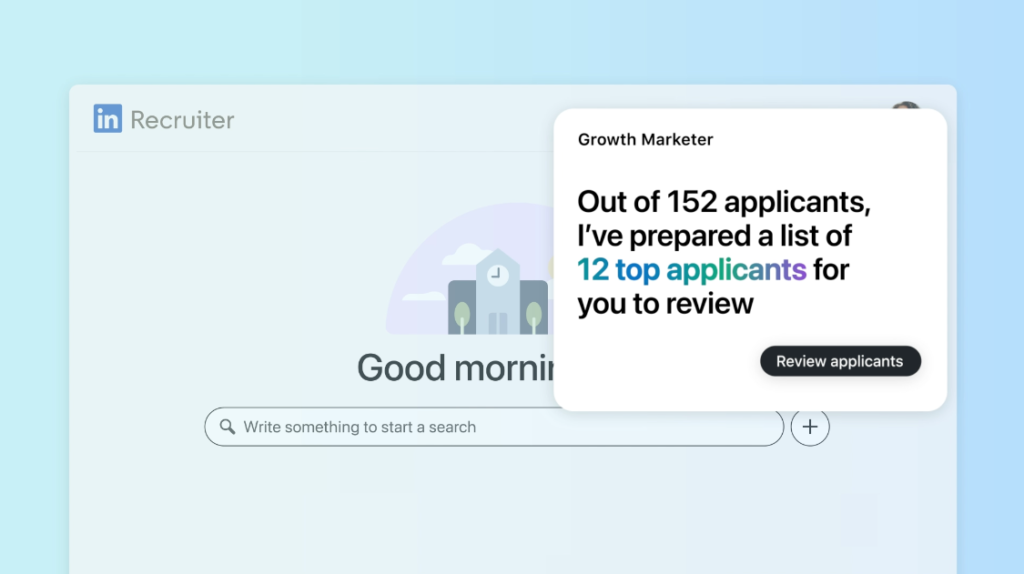

LinkedIn Launches AI Hiring Assistant for Recruiters

The News:

- LinkedIn just launched their first AI agent “Hiring Assistant” that can automate recruitment tasks and “streamline repetitive administrative tasks”.

- The Hiring Assistant can generate job descriptions from brief notes, build candidate pipelines, and manage applicant reviews automatically.

- The Hiring Assistant will soon get features like messaging, interview scheduling and candidate follow-ups.

My take:

- You can probably see where all this is going to end up. The only way to be sure your job application ends up on the Hiring Assistants shortlist is to buy LinkedIn Premium and fine-tune your profile until you are a top applicant for that job. I’m willing to bet that most large organizations will start using Hiring Assistant since it makes their job more efficient, even if it has the potential to filter out great candidates that may not have fine-tuned their LinkedIn profile. This means that basically anyone that applies to jobs on LinkedIn will be forced to buy a premium subscription if they want to be absolutely sure they are not disqualified automatically in the first AI filtering step.

Read more:

Python Overtakes JavaScript as Most Popular Language on GitHub

https://github.blog/news-insights/octoverse/octoverse-2024/

The News:

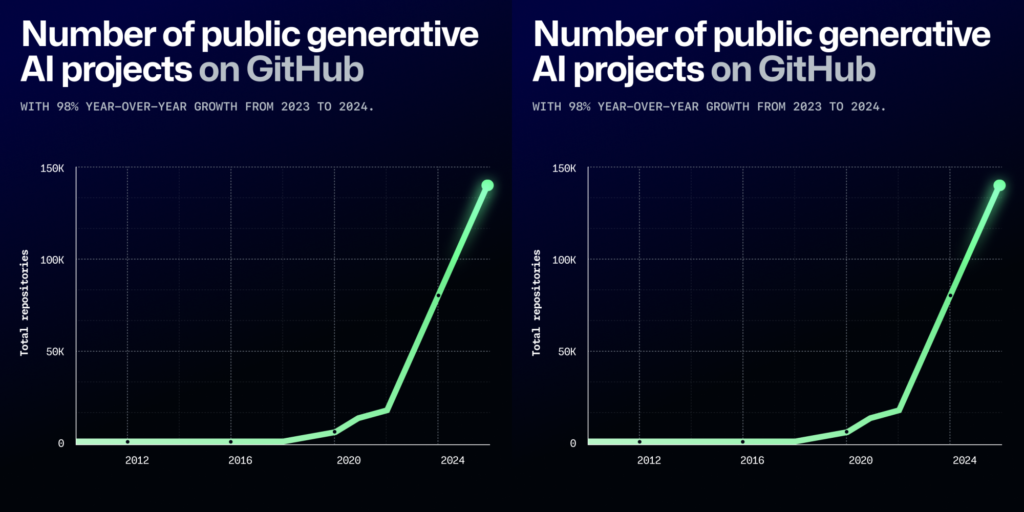

- Python has become the most popular programming language on GitHub, dethroning JavaScript after its 10-year reign as the top language.

- According to GitHub, in 2024 there was a 59% surge in the number of contributions to generative AI projects and a 98% increase in the number of projects overall.

- Jupyter Notebooks usage increased by 92% year-over-year, showing the growth of data science and machine learning projects.

What you might have missed: GitHub posted three important takeaways from this news:

- Generative AI models are becoming core building blocks in all software development.

- The global community of developers on GitHub is expanding rapidly—and the next generation of developers is getting started on GitHub.

- The notion of who a developer is and the scope of what a developer does is changing. The rise in Python, HCL, and Jupyter Notebooks, among other things indicates that the notion of a developer extends beyond software developers to roles like operations or IT developers, machine learning researchers, and data scientists.

My take: I believe that every organization and software development team can benefit from Generative AI. From amazing tools like v0, Replit and Cursor, to amazing APIs such as the OpenAI Real-time API. There are very few processes that cannot benefit from AI, and I meet companies every week that are just starting their AI journey and once they see all the benefits they just want to accelerate. If you and your organization are not using Generative AI today, feel free to reach out to me and let’s set up a meeting where I can give you pointers where to start.

Social Media is About to Change Forever: ByteDance Unveils X-Portrait 2

https://byteaigc.github.io/X-Portrait2

The News:

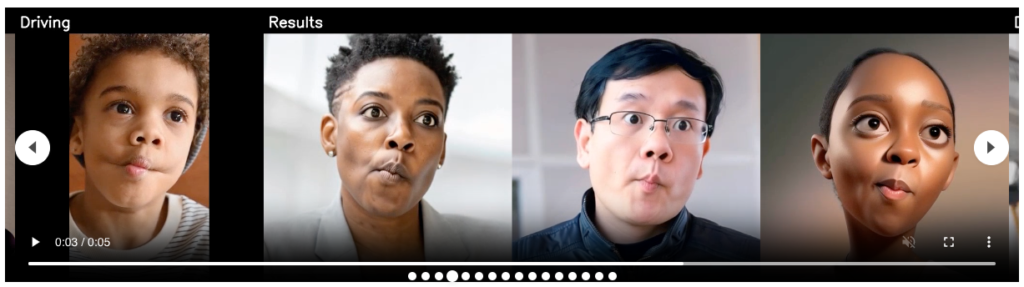

- ByteDance (owner of TikTok) has just released X-Portrait 2, that enables highly expressive character animations from just a static image and a driving video.

- The system combines a state-of-the-art expression encoder with diffusion models to capture and transfer subtle facial expressions, including movements like pouting, tongue-out, and cheek-puffing.

- Compared to competitors like Runway Act-One (launched just two weeks ago), X-Portrait 2 is much better at transferring fast head movements and minor expression changes while maintaining high fidelity.

My take: There are two sides to this. On the plus side — soon anyone with a smartphone will be able to create good looking cartoons or movies at home, without having to consider lightning, expensive cameras or post production tools. On the flip side, it won’t be long before social media as we know it will be messed up in ways we could never have imagined. It has now been 1.5 years since the Bold Glamour filter was launched on TikTok, one of the first filters that reconstructs the face entirely using a generative adversarial network (GAN). X-Portrait 2 continues in the same direction, but now uses a model that basically allows you to impersonate anyone or anything. With AI models such as Flux rapidly improving in quality (the new RAW mode launched last week is incredible), it’s getting increasingly difficult to detect AI-generated content and videos.

Black Forest Labs Launches Flux Ultra and RAW Modes

https://blackforestlabs.ai/flux-1-1-ultra/

“Middle-aged man sitting at a cafe in an ice cream eating challenge. Shot with iPhone 11.”

The News:

- Black Forest Labs just launched two major improvements to Flux 1.1 Pro:

- Ultra mode, now provides up to 4MP images while still maintaining below 10 seconds generation time.

- RAW mode, generates images “with a less synthetic, more natural aesthetic. Compared to other text-to-image models, raw mode significantly increases diversity in human subjects and enhances the realism of nature photography”.

My take: Flux is my current favorite among all AI image generators, and I have been wishing for higher resolutions for quite some time, so the new Ultra mode is very welcome. And while the RAW mode do generate better “look” in the images overall, the AI is still just as bad in generating fine details as previous versions, if you zoom in you can quickly determine the image is AI generated. But it’s getting there!

Read more:

- You can try the new Flux 1.1 Ultra here, just remember to toggle “RAW” on: FLUX1.1 [pro] ultra | Text to Image | AI Playground | fal.ai

ElevenLabs launches “Voice Design” for Custom and Unique Voices

https://elevenlabs.io/docs/product/voices/voice-lab/voice-design

The News:

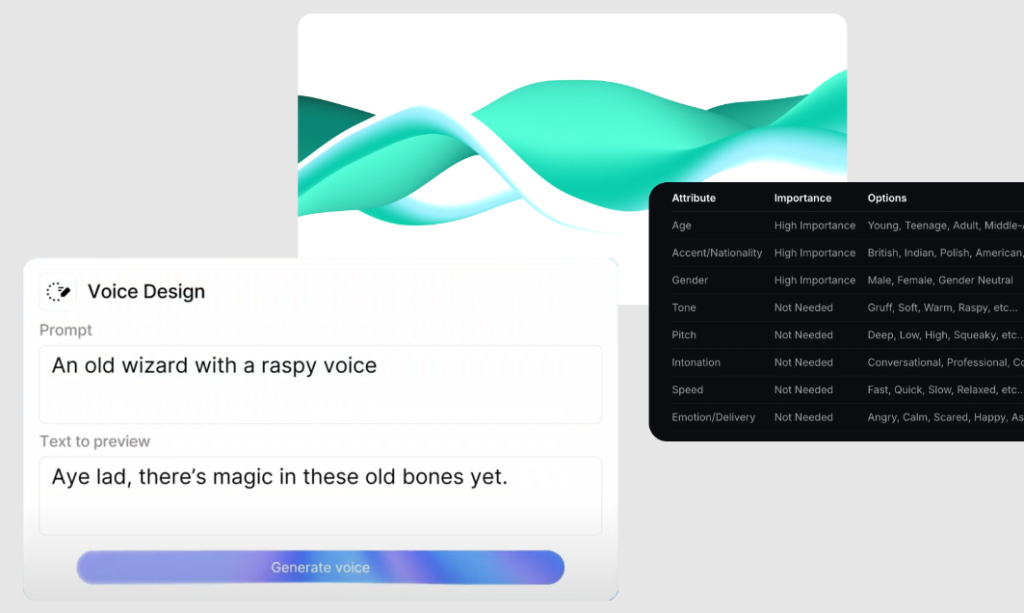

- ElevenLabs has launched Voice Design – a feature that lets users create unique voices using text prompts to specify characteristics like age, gender, accent, and emotion.

- According to ElevenLabs “Voice Design helps creators fill the gaps when the exact voice they are looking for isn’t available in the Voice Library. Now if you can’t find a suitable voice for your project, you can create one”.

- The system can also adjust pitch, speed, intonation, and tone.

My take: ElevenLabs is my current go-to system for text-to-speech, and the new Voice Design feature just made it even better. ElevenLabs is the only model I have found that creates long sections of Swedish text that I can actually listen to without getting crazy over all the mispronunciations. If you are using ElevenLabs or any other text-to-speech engine I think it’s worth trying out the new Voice Design feature, it looks very promising!

Waymo Robotaxis Cost More and Has Higher Trip Time

https://futurism.com/the-byte/waymo-expensive-slower-taxis

The News:

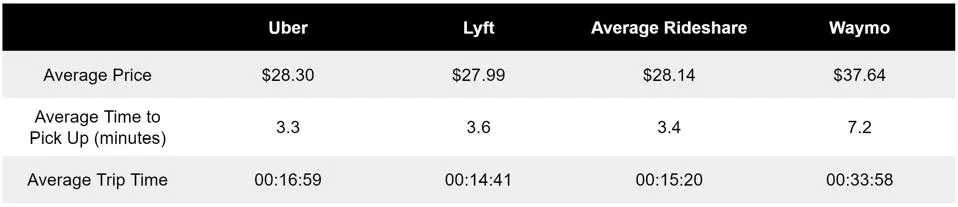

- A new Forbes study comparing 50 rides reveals that Waymo robotaxis are both more expensive and significantly slower than traditional ride-hailing services like Uber and Lyft.

- The average Waymo ride costs $9.50 more than human-driven alternatives, which averaged $28.14. Even after adding a 20% tip for human drivers, Waymo remains $3.87 more expensive.

- When it comes to time differences: Waymo’s pickup time averages 7.2 minutes versus 3.4 minutes for ride-sharing services, while trip duration averages 33:58 minutes compared to just 15:20 minutes for Uber/Lyft.

My take: This study presents an interesting contrast to Waymo’s recent announcements about providing 150,000 rides per week. Like all technology, Waymos robotaxis will get much faster and much cheaper over time, so even if they are much slower and much more expensive today, this should change rapidly in the coming years.

Read more:

NVIDIA Launches Major Updates for Robot Development

https://blogs.nvidia.com/blog/robot-learning-humanoid-development

The News:

- NVIDIA announced several new tools for AI-enabled robot development at the Conference for Robot Learning (CoRL) in Munich, including:

- NVIDIA Isaac Lab: A new open-source framework that allows developers to train robots in a virtual environment before deploying them in the real world.

- Project GR00T Workflows: A set of specialized tools that help developers create more capable humanoid robots. These tools cover everything from teaching robots to walk to helping them understand their environment.

- Cosmos Tokenizer: A tool that processes visual data (images and videos) 12x faster than existing solutions, making it more efficient for robots to learn from visual information.

- NeMo Curator: A system that helps organize and process video data 7x faster than before, making it easier for robots to learn from video demonstrations

My take: Wow, what a massive update for the robotics industry! NVIDIA is basically providing an entire ecosystem for humanoid robot development, from simulation to perception to control. Among all the new features, I think the new Cosmos Tokenizer is the most impressive, which can now process visual data 12x faster while maintaining the same or better quality. I have included links to Isaac Lab, the Cosmos tokenizer and NeMo Curator below if you are interested in learning more!

Read more:

- Cosmos Tokenizer: A suite of image and video neural tokenizers | NVIDIA

- GitHub – isaac-sim/IsaacLab: Unified framework for robot learning built on NVIDIA Isaac Sim

- GitHub – NVIDIA/Cosmos-Tokenizer: A suite of image and video neural tokenizers

- GitHub – NVIDIA/NeMo-Curator: Scalable data pre processing and curation toolkit for LLMs

Microsoft Unveils Magentic-One: Multi-Agent AI System

The News:

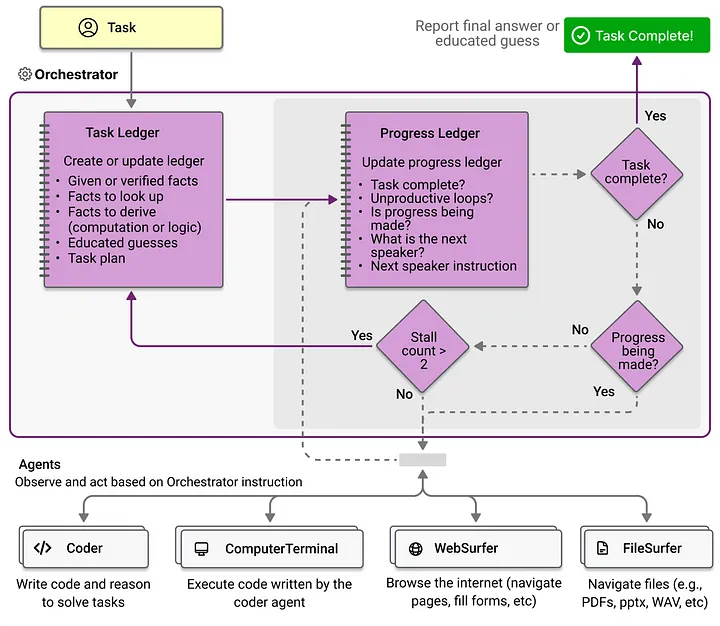

- Microsoft Research has introduced Magentic-One, a “generalist multi-agent system for solving complex tasks”. In practice, Magnetic-One is an extension to Microsoft’s own AutoGen framework.

- Magentic-One uses a lead “Orchestrator” agent that directs four other specialized agents: WebSurfer for browser operations, FileSurfer for file navigation, Coder for programming tasks, and ComputerTerminal for executing code.

- Microsoft is making Magentic-One open-source under the Creative Commons CC BY 4.0 license, and is also releasing AutoGenBench, an evaluation tool for testing agentic benchmarks. Using the CC BY 4.0 license means that you can use, modify and distribute the work, use it commercially, adapt and build upon the work, but you must provide credit to Microsoft.

What you might have missed: All agents in Magentic-One are based on GPT-4o, however it is model agnostic and can use different models to support different capabilities.

My take: Magentic-One sounds very good in theory, however I could not find a single web page or YouTube video that has actually tested the framework to see how well it works for specific use cases, which is unusual. When OpenAI Swarm launched we had dozens of demonstrations posted within hours. The main benefit of Magentic-One seems to be ease of use, and if you are curious in exactly what makes Magnetic-One better than AutoGen, Mehul Gupta made an excellent summary here.

Read more:

Oasis Launches First Playable Open-World AI Model

The News:

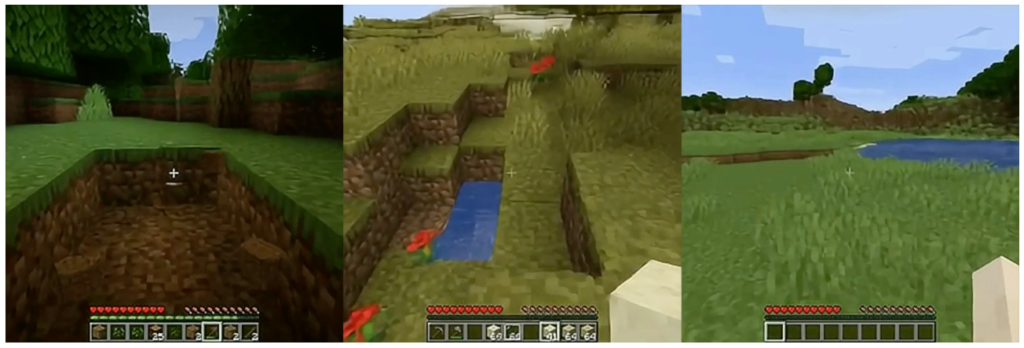

- Developers from Decart and Etched have unveiled Oasis, the first playable open-world AI model that can generate and simulate interactive 3D environments in real-time.

- The model can create vast open worlds with diverse terrains, objects, and interactive elements, while maintaining physical consistency and real-time performance.

- Oasis combines Large Language Models with Neural Fields to enable dynamic world generation and modification through natural language commands.

- The system supports real-time editing, allowing users to modify the environment with commands like “add a forest here” or “create a lake in that valley”.

My take: Oasis definitely looks like a first step towards AI-powered game worlds that can be generated and modified in real-time. However much like the real-time Doom demo from a few weeks ago, this is a very early research prototype. For example, distant object appear warped and fuzzy, and it has difficulties over long contexts where objects seen in the horizon fall outside the context and is changed when moved out and in of context. However for a first release I believe Oasis is amazing, and definitely worth a read if you are interested in game development and AI.

Read more: You can actually download and run Oasis yourself from their GitHub page.

Tencent Releases Hunyuan3D-1.0, First Open-Source Text and Image-to-3D Model

https://huggingface.co/tencent/Hunyuan3D-1

The News:

- Tencent has launched Hunyuan3D-1.0, the first open source AI model supporting both text-to-3D and image-to-3D generation.

- Hunyuan3D uses a two-stage approach: first generating multi-view RGB images in 4 seconds, then reconstructing the 3D asset in 7 seconds using a feed-forward reconstruction model.

- The lite version can generate high-quality 3D assets within 10 seconds on an NVIDIA A100 GPU, while the standard version takes about 25 seconds.

- The model has been released with complete model weights, inference code, and algorithms, available for free for commercial use!

My take: While technically Hunyuan3D is “open source”, its license agreement is very restrictive, making it unusable for most business applications. It is currently positioned more as a research and academic tool rather than a truly open-source commercial solution. So if you have the need for text-to-3D and image-to-3D my recommendation is still to use Meshy3D which by many is still considered state-of-the-art for text-to-3D and image-to-3D generation.

Read more:

Tencent Releases Hunyuan-Large, Industry’s Largest Open-Source MoE Model

https://github.com/Tencent/Tencent-Hunyuan-Large

The News:

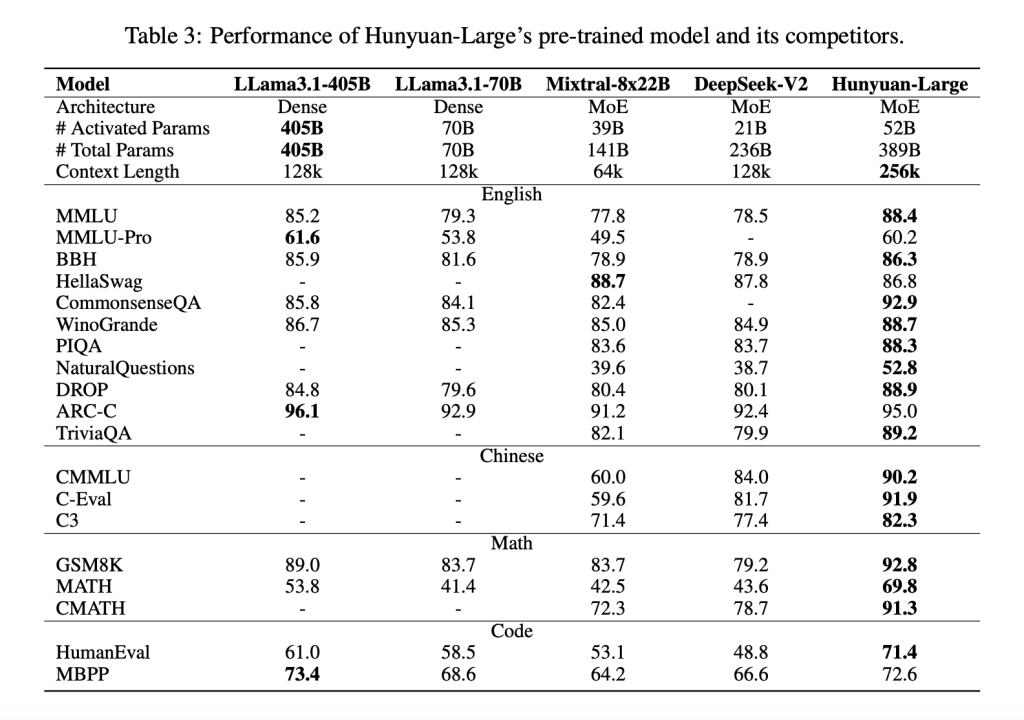

- Tencent has unveiled Hunyuan-Large, a Mixture of Experts (MoE) language model with 389 billion total parameters and 52 billion active parameters.

- Hunyuan-Large outperforms models like LLama3.1 and Mixtral in several evaluations, and supports up to 256K context length.

- In contrast to Hunyuan3D (above), the license agreement for Hunyuan-Large allows for commercial use, but with several limitations, such as: you can only have up to 100 million active users, otherwise you need special permission. You cannot use it to improve other language models, and you cannot use it within the EU. And you must attribute your work with “Powered by Tencent Hunyuan”.

My take: These “almost open source” models are getting annoying. I think it would be better to call it “some source available but with significant restrictions”. While the model weights and inference code for Hunyuan-Large are publicly available, the core training code and data remain proprietary. And the license terms are much more restrictive than traditional open-source licenses like Apache or MIT, making it closer to a permissive commercial license than “true” open source.

Read more: