It finally happened, ChatGPT can now search the web and summarize the results for you. Suddenly all competing models such as Gemini and Claude will now appear “dumb”, and I expect both Anthropic and Google to add search functionality to their LLMs shortly. The bigger question is how this will affect Google — if they add web search to Gemini they now have two competing ways to search the web: (1) Gemini with web search, and (2) Google Search with Gemini summaries. And how does ads fit into all this?

If you are a company that depends on ads and SEO to reach clients, this will have a significant effect on your business. Users don’t see ads when ChatGPT summarizes the web, and they don’t see the web page you spent thousands of dollars designing. How will companies reach out to end-users if everyone switches to LLM-based summaries for most of their searches?

Other news this week includes GitHub that just announced multi-file editing for Copilot, Copilot for Xcode and multi-model support! Waymo announced they are now providing over 150,000 fully automous rides per week, up from 100,000 rides just two months ago. And Boston Dynamics showed off some amazing moves in their latest fully autonomous robot!

THIS WEEK’S NEWS:

- ChatGPT Launches Search and Chrome Extension

- Copilot Adds Multi-Model and Xcode Support

- Waymo Closes $5.6 Billion in Funding, Provides 150,000 Rides per Week

- Anthropic Launches Desktop App and Image Analysis

- Pushing the Frontiers of Audio Generation – Details of Audio Overviews

- New Image Generation Model: Recraft V3

- Boston Dynamics Shows Off Amazing New Robot Moves

ChatGPT Launches Search and Chrome Extension

https://openai.com/index/introducing-chatgpt-search

The News:

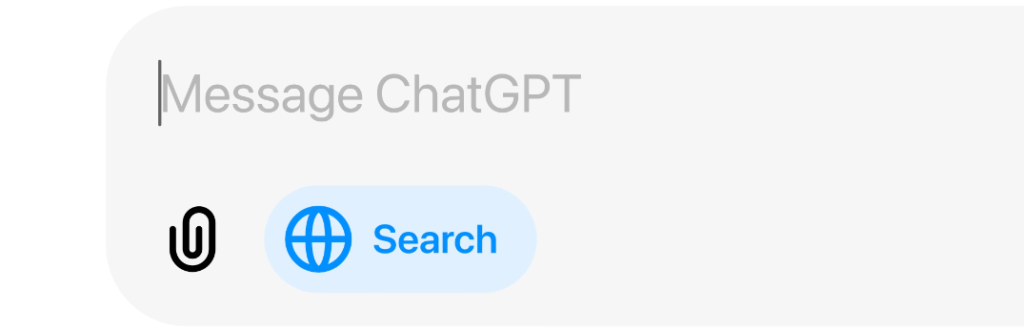

- OpenAI just announced that they have integrated web search to ChatGPT for Plus and Team users, and will expand to Enterprise and Educational users within weeks and free users in the coming months.

- The new search feature provides instant answers about topics such as news, sports, stocks, and weather, with direct source attribution and links.

- ChatGPT will automatically trigger searches when needed, however you can force it to activate web searches using a globe icon.

- The system runs on a specialized version of GPT-4o, fine-tuned for web pages.

- Major publishers such as AP, Reuters, Axel Springer, and others, have signed licensing deals with OpenAI to provide content directly to the platform.

What you might have missed: OpenAI also released a Google Chrome extension that sets it as the default search engine. There are some caveats however, for example all your Chrome searches will now be added as individual chats in ChatGPT. It would also be great if you could send specific search queries directly to Google instead of going all-in on ChatGPT, for most things I still prefer a short linked list of web pages.

My take: From my tests, OpenAI web search works better than Perplexity for non-US content. I have cancelled my Perplexity account and will go all-in on ChatGPT Web Search going forward. On October 20, Perplexity started their latest funding round to raise $500 million dollars, it will be interesting to see if they manage to get that amount of money now when OpenAI has entered as a direct competitor for their business or if they will have to settle for less. The current implementation of web search in ChatGPT is not a direct replacement for Google Search, if you just want the top results you will probably be most effective continuing using Google Search. However for those cases where you spend a few minutes searching for the right information, clicking through dozens of pages, the new ChatGPT Web Search is probably just what you want.

Copilot Adds Multi-Model and Xcode Support

https://github.blog/news-insights/product-news/bringing-developer-choice-to-copilot/

The News:

- During the conference ”GitHub Universe 2024”, GitHub announced a huge amount of upgrades, including:

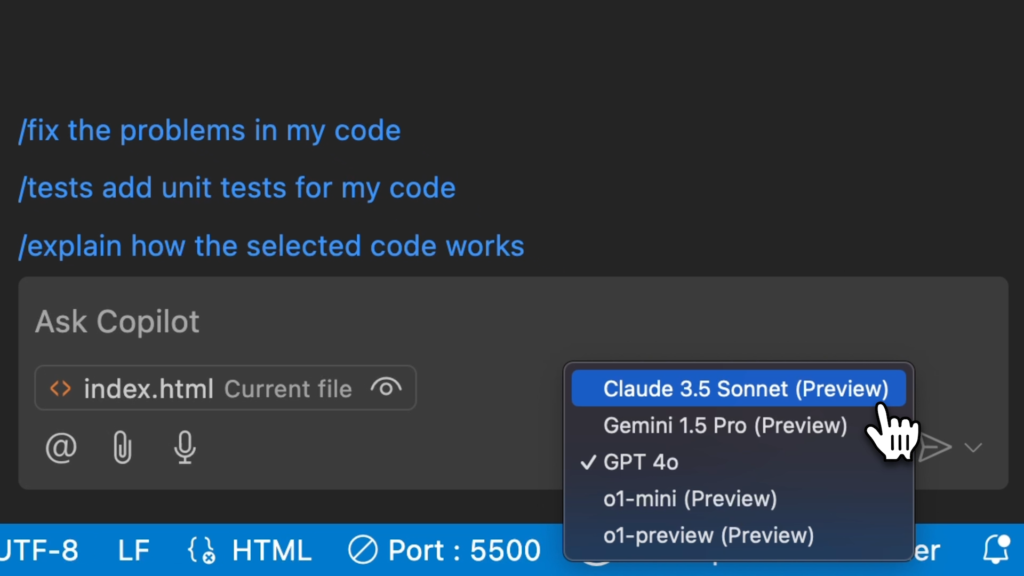

- Multi-Model Support for GitHub Copilot: Developers can now choose from AI models by Anthropic, Google, and OpenAI. You could for example use OpenAI o1 for complex problem solving, and Claude 3.5 for high quality code generation!

- Introduction of GitHub Spark: A new AI tool, GitHub Spark, makes it possible to build complete web applications using just natural language. “With Spark, we will enable over one billion personal computer and mobile phone users to build and share their own micro apps directly on GitHub.”

- Code Review Capabilities: GitHub Copilot now offers AI-assisted code reviews, providing immediate feedback on pull requests.

- Multi-File Editing: Available in Visual Studio Code, this feature allows for simultaneous edits across multiple files.

- Copilot extension for Xcode: In the first version it only supports code completion, with features such as Copilot Chat planned for future releases.

My take: A lot of people are raving about GitHub Spark, and even believes that it kills the Cursor IDE. What many people seem to miss is that the Cursor IDE has a set of local and proprietary fine-tuned models that both provide code completion in the editor, as well as navigate to the next recommended action, all triggered by the Tab key. These models in combination with a large cloud-based model such as Claude 3.5 are what makes Cursor great. Multi-file editing with Copilot is a step in the right direction, it matches the “Composer” feature in Cursor, but the small fine-tuned model is what makes editing in cursor so fast and enjoyable, and Cursor is still unique with that offering. Still, it’s great progress by GitHub and everyone using Copilot should be happy for these improvements!

Read more:

- GitHub Copilot will support models from Anthropic, Google, and OpenAI – The Verge

- GitHub’s Copilot comes to Apple’s Xcode | TechCrunch

- GitHub Universe 2024 opening keynote: delivering phase two of AI code generation – YouTube

Waymo Closes $5.6 Billion in Funding, Provides 150,000 Rides per Week

https://twitter.com/Waymo/status/1851365483972538407

The News:

- Waymo just announced that they are providing over 150,000 paid trips every week, a 50% increase from just two months ago!

- Waymo are also driving over 1 million fully autonomous miles per week, and that figure is growing quickly!

- Finally, Waymo closed a $5.6 billion funding from existing investors including Andreessen Horowitz, Fidelity, Perry Creek, Silver Lake, Tiger Global, and T. Rowe Price.

My take: Once AI is “good enough” in a specific domain, it tends to overtake everything within that domain. We have seen it with text and programming, and we are starting to see it with music, images, video and now autonomous vehicles. Waymo is not only safer than traditional taxis, it is much safer than all manually driven cars. I believe the future for cars is autonomous robottaxis, and we are seeing it grow right in front of our eyes. If you are still skeptical about robottaxis, just check the data from Waymo and their growth rate.

Read more:

- Alphabet’s Waymo closes $5.6 bln funding to expand autonomous ride-hailing service | Reuters

- Safety – Waymo

Anthropic Launches Desktop App and Image Analysis

The News:

- Anthropic has released their Claude desktop app for macOS and Windows. Much like the ChatGPT app for Windows, both Anthropic’s apps are Electron-based Chrome wrappers (the exception here is ChatGPT for MacOS, it’s true native).

- Anthropic also announced that Claude can now view images within a PDF, in addition to text. This should help Claude 3.5 “more accurately understand complex documents, such as those laden with charts or graphics”.

My take: If you use Claude then go ahead and download the apps, being able to use a shortcut to start a new chat is great. Also the image parsing functionality is very welcome, I haven’t tried it out yet but will be doing it next week. Claude 3.5 now also supports PDF inputs in the API in beta.

Read more:

- Claude can now view images within a PDF, in addition to text / X

- The Anthropic API now also supports PDF inputs in beta / X

Pushing the Frontiers of Audio Generation – Details of Audio Overviews

https://deepmind.google/discover/blog/pushing-the-frontiers-of-audio-generation

The News:

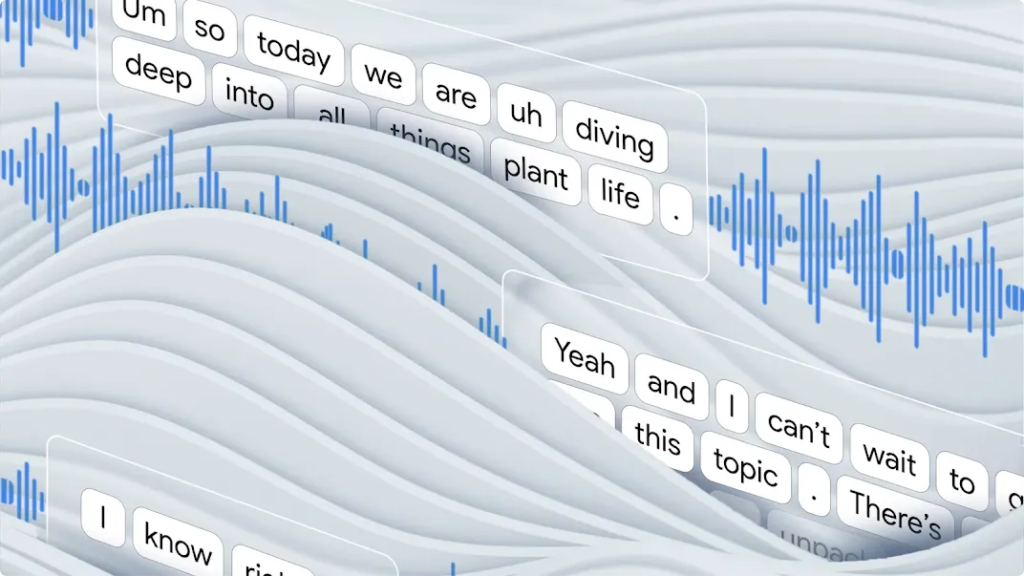

- If you, like me, was greatly impressed by the digital talkshow hosts in NotebookLM (also called “Audio Overviews”), Google DeepMind has now published details about the architecture of the specialized Transformer architecture they developed for this purpose.

- Google also outline how they trained their model and how it learned when to say “umm” and “aah”.

My take: I love NotebookLM Audio Overviews, and once they add voice and personality customizations I think this is it. This is what most of us will use to summarize all the content that is released every day into a manageable format to consume during a walk or run.

New Image Generation Model: Recraft V3

https://twitter.com/recraftai/status/1851757270599664013

The News:

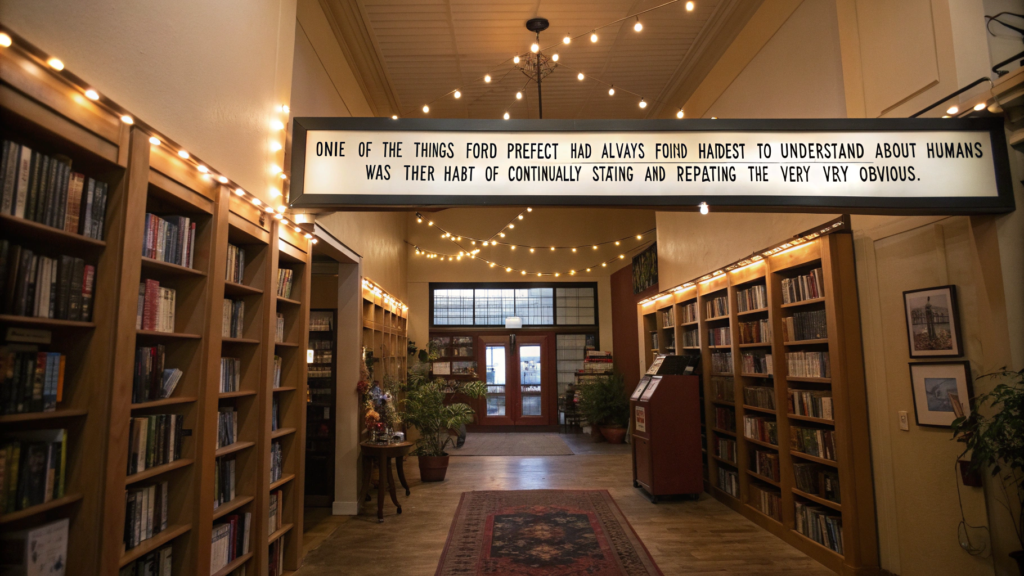

- Startup company Recraft just announced their new model V3, which according to Recraft is “the only model in the world that can generate images with long texts, as opposed to just one or a couple of words.”

- The model achieved a 72% win rate and 1172 ELO score on the Artificial Analysis leaderboard, outperforming models from Midjourney and FLUX.

- “Built for graphic design” – using Recraft V3 you can control text size and placement in the image.

- “Precise style control” – If you have a brand style, you can send those images to Recraft and it will learn from it and use it for new images.

My take: There are a few features in Recraft that I really would have liked (if they worked). First I like being able to output long texts, for the occasions where I need it it will make my life much easier. I also like that I can control how the text is displayed, and also that it can learn my company brand style. From the few examples I tried however (see image above) it failed completely. Maybe it’s OK with “slightly longer texts than you typically send to Flux” but hey, Flux will probably fix those longer texts soon enough.

Try it out at:

Boston Dynamics Shows Off Amazing New Robot Moves

The News:

- Boston Dynamics just showed a new demo where their robot Atlas moves engine covers between supplier containers and a mobile sequencing dolly, fully autonomously. As input the robot just receives a list of bin locations to move parts between.

- The robot, Atlas, uses an ML vision model to detect and localize the environment fixtures and individual bins. The robot uses a specialized grasping policy and continuously estimates the state of manipulated objects to achieve the task.

- There are no prescribed or teleoperated movements; all motions are generated autonomously.

My take: There are a few things that really impressed me in the video. The first is the use of an extended neck and waist to turn its head and torso before moving. Atlas can also rotate its waist and start walking backwards while at the same time rotating the upper torso. This combination of movements makes the Atlas move almost like a human. Compared to robots from Figure, Sanctuary AI and Tesla Atlas only uses three fingers, but it seems to be working very well in this example.

Read more: